Category: VXLAN

Lab: Routing Between VXLAN Segments

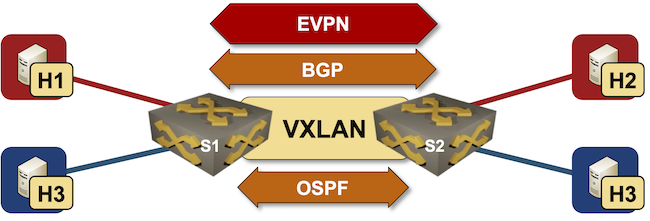

In the previous EVPN/VXLAN lab exercises, we covered the basics of Ethernet bridging over VXLAN and the use of the EVPN control plane to build layer-2 segments.

It’s time to move up the protocol stack. Let’s see how you can route between VXLAN segments, this time using unique unicast IP addresses on the layer-3 switches.

You can run the lab on your own netlab-enabled infrastructure (more details), but also within a free GitHub Codespace or even on your Apple-silicon Mac (installation, using Arista cEOS container, using VXLAN/EVPN labs).

Lab: VXLAN Bridging with EVPN Control Plane

In the previous VXLAN labs, we covered the basics of Ethernet bridging over VXLAN and a more complex scenario with multiple VLANs.

Now let’s add the EVPN control plane into the mix. The data plane (VLANs mapped into VXLAN-over-IPv4) will remain unchanged, but we’ll use EVPN (a BGP address family) to build the ingress replication lists and MAC-to-VTEP mappings.

Lab: More Complex VXLAN Deployment Scenario

In the first VXLAN lab, we covered the very basics. Now it’s time for a few essential concepts (before introducing the EVPN control plane or integrated routing and bridging):

- Each VXLAN segment could have a different set of VTEPs (used to build the BUM flooding list)

- While the VXLAN Network Identifier (VNI) must be unique across the participating VTEPs, you could map different VLAN IDs into a single VNI (allowing you to merge two VLAN segments over VXLAN)

- Neither VXLAN VNI nor VLAN ID has to be globally unique (but it helps to make them unique to remain sane)

New Project: Open-Source VXLAN/EVPN Labs

After launching the BGP labs in 2023 and IS-IS labs in 2024, it was time to start another project that was quietly sitting on the back burner for ages: open-source (and free) VXLAN/EVPN labs.

The first lab exercise is already online and expects you to extend a single VLAN segment across an IP underlay network using VXLAN encapsulation with static ingress replication.

EVPN Designs: Multi-Pod with IP-Only WAN Routers

In the multi-pod EVPN design, I described a simple way to merge two EVPN fabrics into a single end-to-end fabric. Here are a few highlights of that design:

- Each fabric is running OSPF and IBGP, with core (spine) devices being route reflectors

- There’s an EBGP session between the WAN edge routers (sometimes called border leaf switches)

- Every BGP session carries IPv4 (underlay) and EVPN (overlay) routes.

In that design, the WAN edge routers have to support EVPN (at least in the control plane) and carry all EVPN routes for both fabrics. Today, we’ll change the design to use simpler WAN edge routers that support only IP forwarding.

EVPN Designs: Multi-Pod Fabrics

In the EVPN Designs: Layer-3 Inter-AS Option A, I described the simplest multi-site design in which the WAN edge routers exchange IP routes in individual VRFs, resulting in two isolated layer-2 fabrics connected with a layer-3 link.

Today, let’s explore a design that will excite the True Believers in end-to-end layer-2 networks: two EVPN fabrics connected with an EBGP session to form a unified, larger EVPN fabric. We’ll use the same “physical” topology as the previous example; the only modification is that the WA-WB link is now part of the underlay IP network.

… updated on Sunday, September 28, 2025 11:23 +0200

EVPN Designs: Layer-3 Inter-AS Option A

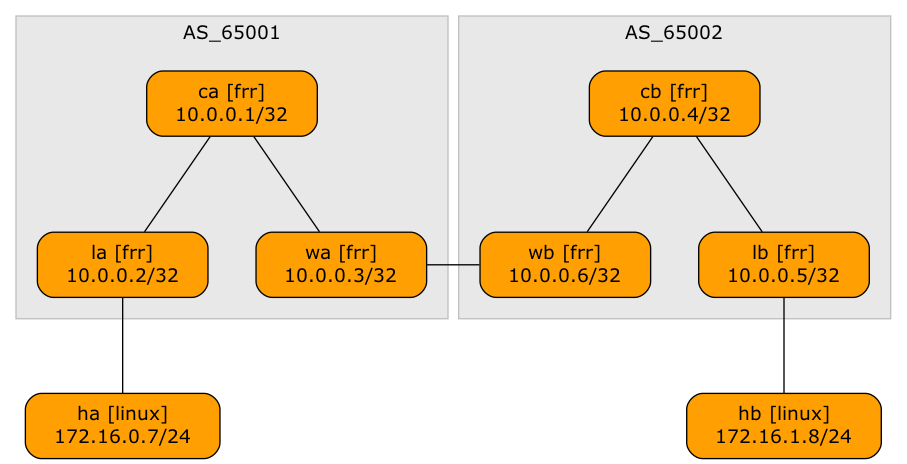

A netlab user wanted to explore a multi-site design where every site runs an independent EVPN fabric, and the inter-site link is either a layer-2 or a layer-3 interconnect (DCI). Let’s start with the easiest scenario: a layer-3 DCI with a separate (virtual) link for every tenant (in the MPLS/VPN world, we’d call that Inter-AS Option A)

Lab topology

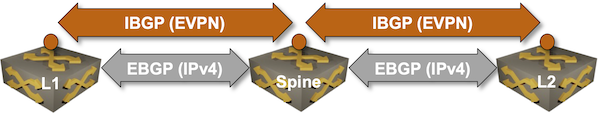

EVPN Designs: EVPN IBGP over IPv4 EBGP

We’ll conclude the EVPN designs saga with the “most creative” design promoted by some networking vendors: running an IBGP session (carrying EVPN address family) between loopbacks advertised with EBGP IPv4 address family.

Oversimplified IBGP-over-EBGP design

There’s just a tiny gotcha in the above Works Best in PowerPoint diagram. IBGP assumes the BGP neighbors are in the same autonomous system while EBGP assumes they are in different autonomous systems. The usual way out of that OMG, I painted myself into a corner situation is to use BGP local AS functionality on the underlay EBGP session:

EVPN Designs: EVPN EBGP over IPv4 EBGP

In the previous blog posts, we explored three fundamental EVPN designs: we don’t need EVPN, IBGP EVPN AF over IGP-advertised loopbacks (the way EVPN was designed to be used) and EBGP-only EVPN (running the EVPN AF in parallel with the IPv4 AF).

Now we’re entering Wonderland: the somewhat unusual1 things vendors do to make their existing stuff work while also pretending to look cool2. We’ll start with EBGP-over-EBGP, and to understand why someone would want to do something like that, we have to go back to the basics.

… updated on Thursday, October 10, 2024 18:04 +0200

EVPN Designs: EBGP Everywhere

In the previous blog posts, we explored the simplest possible IBGP-based EVPN design and made it scalable with BGP route reflectors.

Now, imagine someone persuaded you that EBGP is better than any IGP (OSPF or IS-IS) when building a data center fabric. You’re running EBGP sessions between the leaf- and the spine switches and exchanging IPv4 and IPv6 prefixes over those EBGP sessions. Can you use the same EBGP sessions for EVPN?

TL&DR: It depends™.

EVPN Designs: Scaling IBGP with Route Reflectors

In the previous blog posts, we explored the simplest possible IBGP-based EVPN design and tried to figure out whether BGP route reflectors do more harm than good. Ignoring that tiny detail for the moment, let’s see how we could add route reflectors to our leaf-and-spine fabric.

As before, this is the fabric we’re working with:

Response: The Usability of VXLAN

Wes made an interesting comment to the Migrating a Data Center Fabric to VXLAN blog post:

The benefit of VXLAN is mostly scalability, so if your enterprise network is not scaling… just don’t. The migration path from VLANs is to just keep using VLANs. The (vendor-driven) networking industry has a huge blind spot about this.

Paraphrasing the famous Dinesh Dutt’s Autocon1 remark: I couldn’t disagree with you more.

Building Layer-3-Only EVPN Lab

A few weeks ago, Roman Dodin mentioned layer-3-only EVPNs: a layer-3 VPN design with no stretched VLANs in which EVPN is used to transport VRF IP prefixes.

The reality is a bit muddier (in the VXLAN world) as we still need transit VLANs and router MAC addresses; the best way to explore what’s going on behind the scenes is to build a simple lab.

Migrating a Data Center Fabric to VXLAN

Darko Petrovic made an excellent remark on one of my LinkedIn posts:

The majority of the networks running now in the Enterprise are on traditional VLANs, and the migration paths are limited. Really limited. How will a business transition from traditional to whatever is next?

The only sane choice I found so far in the data center environment (and I know it has been embraced by many organizations facing that conundrum) is to build a parallel fabric (preferably when the organization is doing a server refresh) and connect the new fabric with the old one with a layer-3 link (in the ideal world) or an MLAG link bundle.

BGP, EVPN, VXLAN, or SRv6?

Daniel Dib asked an interesting question on LinkedIn when considering an RT5-only EVPN design:

I’m curious what EVPN provides if all you need is L3. For example, you could run pure L3 BGP fabric if you don’t need VRFs or a limited amount of them. If many VRFs are needed, there is MPLS/VPN, SR-MPLS, and SRv6.

I received a similar question numerous times in my previous life as a consultant. It’s usually caused by vendor marketing polluting PowerPoint slide decks with acronyms without explaining the fundamentals1. Let’s fix that.