Blog Posts in September 2010

Introduction to 802.1Qaz (Enhanced Transmission Selection – ETS)

Enhanced Transmission Selection (ETS) is the second part of the Data Center Bridging puzzle (I’ve already described Priority Flow Control). It specifies two different technologies:

- Queuing mechanisms in bridges

- Data Center Bridging eXchange protocol: a Control/Negotiation protocol that allows bridges and hosts to negotiate QoS parameters in a bridged network.

Although some bridges from some vendors supported numerous QoS mechanisms in the past, 802.1Qaz is the first attempt to standardize a richer set of QoS behaviors than the strict priority queuing defined in 802.1p.

FCoE Quote-of-the-Day

“Use FC where you feel you need to, use Ethernet (NFS, CIFS, iSCSI) everywhere else -- and save money and effort in the process”

Etherealmind and myself have been singing this song for quite some time (probably upsetting a few people working for my favorite vendor), but this time it comes straight from EMC’s CTO. And he didn’t even mention FCoE in his list of storage protocols.

What exactly is a Nexus 4000?

Someone mentioned a while ago in a comment to one of my blog posts that the Nexus 4000 switch already supports multihop FCoE. Now that we know what multihop FCoE really is, let’s see how Nexus 4000 fits into the picture.

The Cisco Nexus 4000 Series Design Guide starts with a confusing set of claims:

- The Cisco Nexus 4000 Series Switches provide the Fibre Channel Forwarder (FCF) function.

- Nexus 4000 is a FCoE Initialization Protocol (FIP) snooping bridge.

ATAoE: response from Coraid

A few days after writing my ATAoE post I got a very nice e-mail from Sam Hopkins from Coraid responding to every single point I’ve raised in my post. I have to admit I’ve missed the tag field in the ATAoE packets which does allow parallel requests between a server and a storage array, solving some of the sequencing/fragmentation issues. I’m still not convinced, but here is the whole e-mail (I did just some slight formatting) with no further comments from my side.

DMVPN: Non-Unique NHRP Registrations

During my last DMVPN webinar, one of the students mentioned the need for non-unique NHRP registrations in environments where the public IP address of a DMVPN spoke site changes due to DHCP lease expiration or PPPoE session termination. Finally I found some time to recreate the scenario in my DMVPN lab; here are the results.

Hiding documentation ... will they never learn?

One of the best presentations we had last week during the Net Field Day 2010 was given by Doug Gourlay from Arista. Their products have numerous highly interesting features; Terry liked their use of TDR and I was particularly delighted by the VM Tracer and decided to write about it as soon as I find some time (read: today).

2012-09-29: To keep the record straight: a few months after I wrote this blog post, Arista made most of the EOS documentation available online (as of today, it's latest version only, with no release notes).

Setting access lists with RADIUS

Chris sent me an interesting challenge a few days ago: he wanted to set inbound access lists on virtual access interfaces with RADIUS but somehow couldn’t get this feature to work.

Uncle Google quickly provided two documents on Cisco.com: an older one (explaining the IETF attributes, vendor-specific attributes and AV-pairs) and the most recent one (with more attributes and less useful information) covering every Cisco IOS software release up to 12.2 (yeah, it looks like the RADIUS attributes haven’t been touched in a long time). According to the documentation, attribute #11 as well as AV-pairs ip:inacl/ip:outacl and lcp:interface-config should work, but the access list did not appear in the interface configuration.

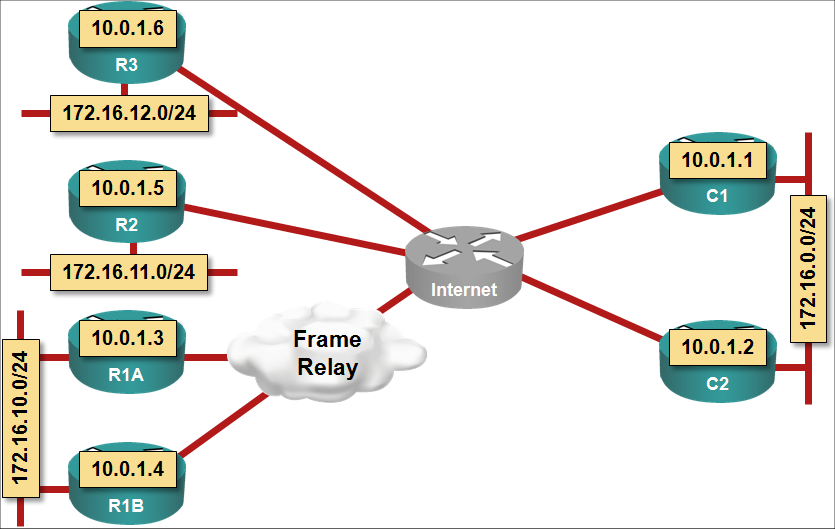

Advanced DMVPN Webinar: Router Configurations

I included 12 sets of complete router configurations with the DMVPN webinar covering every single design scenario described in the webinar. The seven router lab topology emulates an enterprise DMVPN deployment with a redundant central site, a redundant remote site (with two routers) and two non-redundant remote sites (using two uplinks in a few scenarios). The seventh router emulates the Internet. The configurations can be used on any hardware (real or otherwise) supporting recent Cisco IOS software, allowing you to test and modify the design scenarios discussed in the webinar.

Multihop FCoE 102: VN_port proxy and FIP snooping

A few weeks ago I wrote about the multihop FCoE basics and the two fundamentally different ways an FCoE network could be designed: FCoE on every switch or FCoE on the edges with DCB-extended bridging in the middle.

There are two other configurations you’ll likely see in access parts of an FCoE network: FCoE VN_port proxying and FIP snooping.

Virtualization links (2010-09-19)

A medley of virtualization/access network links:

Access layer virtualization: VN-Tag and VEPA. A nice summary of what Cisco and HP are trying to sell us as VN-Tag and VEPA. The truth might be a bit different (definitely not Joe’s fault).

UCS Network Adapter Options Overview. A nice summary of three NIC architectures with a reasonable answer to the question: “why would I need virtual NICs in my server?” applicable to a generic Data Center environment.

Great Minds Think Alike – Cisco and VMware Agree On Sharing vs. Limiting. Another fantastic introductory QoS post. It looks like we have to repeat the “rate-limiting is bad, queuing is good” mantra ad nauseam hoping everyone eventually gets it. Bonus: people addicted to GUI might finally get it due to the illustrative highway analogies.

Speaking of illustrative, Brad Hedlund published a huge VMware 10GE QoS Design Deep Dive with Cisco UCS, Nexus article (no, I haven’t read it all yet ... my flight home from #TechFieldDay took only 11 hours).

Net Field Day 2010 – first impressions

I just spent frantic three days in San Jose with a dozen of fellow bloggers attending the Net Field Day 2010 event masterfully organized by Stephen Foskett and Claire Chaplais (thank you both for a truly outstanding experience!). I can’t tell you how delighted I was when they selected me as one of the participants, more so as this event finally allowed me to get in touch with a number of people I was regularly meeting in vSpace. However, the whole point of the Net Field Day is to talk with the vendors and figure out what they’re doing, so let’s start with my first impressions.

The sorry state of the industry. My first impression: real networking innovation is gone.

ATAoE for Converged Data Center Networks? No Way

When I started writing about storage industry and its attempts to tweak Ethernet to its needs, someone mentioned ATAoE. I read the ATAoE Wikipedia article and concluded that this dinky technology probably makes sense in a small home office… and then I’ve stumbled across an article in The Register that claimed you could run a 9000-user Exchange server on ATAoE storage. It was time to deep-dive into this “interesting” L2+7 protocol. As expected, there are numerous good reasons you won’t hear about ATAoE in my Data Center 3.0 for Networking Engineers webinar.

Storage networking is like SNA

I’m writing this post while travelling to the Net Field Day 2010, the successor to the awesome Tech Field Day 2010 during which the FCoTR technology was launched. It’s thus only fair to extend that fantastic merger of two technologies we all love, look at the bigger picture and compare storage networking with SNA.

Notes:

- If you’re too young to understand what I’m talking about, don’t worry. Yes, you’ve missed all the beauties of RSRB/DLSw, CIP, APPN/APPI and the likes, but major technology shifts happen every other decade or so, so you’ll be able to use FC/FCoE/iSCSI analogies the next time (and look like a dinosaur to the rookies). Make sure, though, that you read the summary.

- I’ll use present tense throughout the post when comparing both environments although SNA should be mostly history by now.

Long-distance vMotion and the traffic trombone

Few days ago I wrote about the impact of vMotion on a Data Center network and the traffic flow issues. Now let’s walk through what happens when you move a running virtual machine (VM) between two data centers (long-distance vMotion). Imagine we’re moving a web server that is:

- Serving a few Internet clients (with firewall/NAT and/or load balancing somewhere in the path);

- Getting most of its data from a database server sitting nearby;

- Reading and writing to a local disk.

The traffic flows are shown in the following diagram:

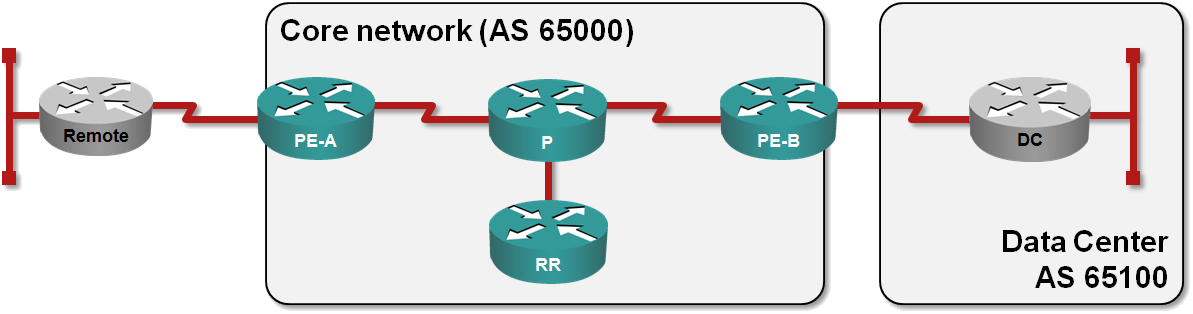

IPv6 SP Core webinar: router configurations

The attendees of my Building IPv6 Service Provider Core webinar get several sets of complete router configurations for a six router lab that emulates a typical Service Provider network with a residential customer and an enterprise BGP customer. The configurations can be used on any hardware (real or otherwise) supporting recent Cisco IOS software, allowing you to test and modify the design scenarios discussed in the webinar.

vMotion: an elephant in the Data Center room

A while ago I had a chat with a fellow CCIE (working in a large enterprise network with reasonably-sized Data Center) and briefly described vMotion to him. His response: “Interesting, I didn’t know that.” ... and “Ouch” a few seconds later as he realized what vMotion means from bandwidth consumption and routing perspectives. Before going into the painful details, let’s cover the basics.

Introduction to LISP

I’ve been mentioning LISP several times during the last months. It seems to be the only viable solution to the global IP routing table explosion. All other proposals require modifying layers above IP and while that’s where the problem should have been solved, expecting those layers to change any time soon is like waiting for Godot.

If you’re interested in LISP, start with the introduction to LISP I wrote for Search Telecom, continue with the LISP tutorial from NANOG 45 and (for the grand finale) listen to three Google Talks from Dino (almost four hours).

Introduction to 802.1Qbb (Priority-based Flow Control — PFC)

Yesterday I wrote that you don’t need DCB technologies to implement FCoE in your network. The FC-BB-5 standard is quite explicit (it also says that 802.1Qbb is the other option):

Lossless Ethernet may be implemented through the use of some Ethernet extensions. A possible Ethernet extension to implement Lossless Ethernet is the PAUSE mechanism defined in IEEE 802.3-2008.

The PAUSE mechanism (802.3x) gives you lossless behavior, but results in undesired side effects when you run LAN and SAN traffic across a converged Ethernet infrastructure.

DCB and TRILL have nothing in common

The emerging Ethernet bridging technologies have been hyped to an extent where the lines between them completely blurred, resulting in statements like “we need DCB and TRILL for FCoE”. Actually, none of that is true, but let’s focus on DCB and TRILL first.

Virtual aggregation: a quick fix for FIB/TCAM overflow

During the Big Hot and Heavy Switches podcast, Dan Hughes complained that the Nexus 7000 switch cannot take the full BGP table. The reason is simple: it’s TCAM (FIB) has only 56.000 entries and the BGP table has almost 350.000 routes.

Nexus 7000 is a Data Center switch, so the TCAM size is not really a limitation (it would usually have a default route toward the WAN core), but the same problem is experienced by Service Providers all over the world – the TCAM/FIB size of their high-speed routers is limited.

… updated on Saturday, December 26, 2020 13:51 UTC

RIBs and FIBs (aka IP Routing Table and CEF Table)

Every now and then, I’m asked about the difference between Routing Information Base (RIB), also known as IP Routing Table and Forwarding Information Base (FIB), also known as CEF table (on Cisco’s devices) or IP forwarding table.

Let’s start with an overview picture (which does tell you more than the next thousand words I’ll write):

The IPv6 “experts” strike again

IT World Canada has recently published an interesting “Disband the ITU's IPv6 Group, says expert” article. I can’t agree more with the title or the first message of the article: there is no reason for the IPv6 ITU group to exist. However, as my long-time readers know, that’s old news ... and the article is unfortunately so full of technical misinformation and myths and that I hardly know where to begin. Trying to be constructive, let’s start with the points I agree with.

IPv6 was designed to meet the operational needs that existed 20 years ago. Absolutely true. See my IPv6 myths for more details.

ITU-T has spun up two groups that are needlessly consuming international institutional resources. Absolutely in agreement (but still old news). I also deeply agree with all the subsequent remarks about ITU-T and needless politics (not to mention the dire need of most of ITU-T to find some reason to continue existing). That part of the article should become a required reading for any standardization body.

And now for (some of) the points I disagree with:

2019-09-01: Stumbled upon this old blog post and fixed the "IAB adoption of CLNP" part. Would love to know more about what was going on, but couldn't find any details apart from a few vague mentions.