Category: design

IBGP Is the Better EBGP

Whenever I was explaining how one could build EBGP-only data center fabrics, someone would inevitably ask, “But could you do that with IBGP?”

TL&DR: Of course, but that does not mean you should.

Anyway, leaving behind the land of sane designs, let’s trot down the rabbit trail of IBGP-only networks.

Comparing IGP and BGP Data Center Convergence

A Thought Leader1 recently published a LinkedIn article comparing IGP and BGP convergence in data center fabrics2. In it, they3 claimed that:

iBGP designs would require route reflectors and additional processing, which could result in slightly slower convergence.

Let’s see whether that claim makes any sense.

TL&DR: No. If you’re building a simple leaf-and-spine fabric, the choice of the routing protocol does not matter (but you already knew that if you read this blog).

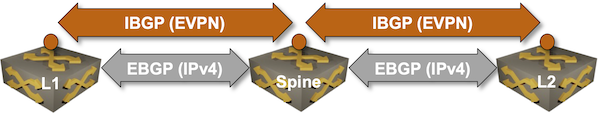

EVPN Designs: EVPN IBGP over IPv4 EBGP

We’ll conclude the EVPN designs saga with the “most creative” design promoted by some networking vendors: running an IBGP session (carrying EVPN address family) between loopbacks advertised with EBGP IPv4 address family.

Oversimplified IBGP-over-EBGP design

There’s just a tiny gotcha in the above Works Best in PowerPoint diagram. IBGP assumes the BGP neighbors are in the same autonomous system while EBGP assumes they are in different autonomous systems. The usual way out of that OMG, I painted myself into a corner situation is to use BGP local AS functionality on the underlay EBGP session:

EVPN Designs: EVPN EBGP over IPv4 EBGP

In the previous blog posts, we explored three fundamental EVPN designs: we don’t need EVPN, IBGP EVPN AF over IGP-advertised loopbacks (the way EVPN was designed to be used) and EBGP-only EVPN (running the EVPN AF in parallel with the IPv4 AF).

Now we’re entering Wonderland: the somewhat unusual1 things vendors do to make their existing stuff work while also pretending to look cool2. We’ll start with EBGP-over-EBGP, and to understand why someone would want to do something like that, we have to go back to the basics.

… updated on Thursday, October 10, 2024 18:04 +0200

EVPN Designs: EBGP Everywhere

In the previous blog posts, we explored the simplest possible IBGP-based EVPN design and made it scalable with BGP route reflectors.

Now, imagine someone persuaded you that EBGP is better than any IGP (OSPF or IS-IS) when building a data center fabric. You’re running EBGP sessions between the leaf- and the spine switches and exchanging IPv4 and IPv6 prefixes over those EBGP sessions. Can you use the same EBGP sessions for EVPN?

TL&DR: It depends™.

One-Arm Hub-and-Spoke VPN with MPLS/VPN

All our previous designs of the hub-and-spoke VPN (single PE, EVPN) used two VRFs for the hub device (ingress VRF and egress VRF). Is it possible to build a one-arm hub-and-spoke VPN where the hub device exchanges traffic with the PE router over a single link?

TL&DR: Yes, but only on some devices (for example, Cisco IOS or FRRouting) when using MPLS transport.

Here’s a high-level diagram of what we’d like to achieve:

EVPN Hub-and-Spoke Layer-3 VPN

Now that we figured out how to implement a hub-and-spoke VPN design on a single PE-router, let’s do the same thing with EVPN. It turns out to be trivial:

- We’ll split the single PE router into three PE devices (pe_a, pe_b, and pe_h)

- We’ll add a core router (p) and connect it with all three PE devices.

As we want to use EVPN and have a larger core network, we’ll also have to enable VLANs, VXLAN, BGP, and OSPF on the PE devices.

This is the topology of our expanded lab:

Hub-and-Spoke VPN on a Single PE-Router

Yesterday’s blog post discussed the traffic flow and the routing information flow in a hub-and-spoke VPN design (a design in which all traffic between spokes flows through the hub site). It’s time to implement and test it, starting with the simplest possible scenario: a single PE router using inter-VRF route leaking to connect the VRFs.

Hub-and-Spoke VPN Topology

Hub-and-spoke topology is by far the most complex topology I’ve ever encountered in the MPLS/VPN (and now EVPN) world. It’s used when you want to push all the traffic between sites attached to a VPN (spokes) through a central site (hub), for example, when using a central firewall.

You get the following diagram when you model the traffic flow requirements with VRFs. The forward traffic uses light yellow arrows, and the return traffic uses dark orange ones.

EVPN Designs: Scaling IBGP with Route Reflectors

In the previous blog posts, we explored the simplest possible IBGP-based EVPN design and tried to figure out whether BGP route reflectors do more harm than good. Ignoring that tiny detail for the moment, let’s see how we could add route reflectors to our leaf-and-spine fabric.

As before, this is the fabric we’re working with:

Migrating a Data Center Fabric to VXLAN

Darko Petrovic made an excellent remark on one of my LinkedIn posts:

The majority of the networks running now in the Enterprise are on traditional VLANs, and the migration paths are limited. Really limited. How will a business transition from traditional to whatever is next?

The only sane choice I found so far in the data center environment (and I know it has been embraced by many organizations facing that conundrum) is to build a parallel fabric (preferably when the organization is doing a server refresh) and connect the new fabric with the old one with a layer-3 link (in the ideal world) or an MLAG link bundle.

EVPN Designs: IBGP Full Mesh Between Leaf Switches

In the previous blog post in the EVPN Designs series, we explored the simplest possible VXLAN-based fabric design: static ingress replication without any L2VPN control plane. This time, we’ll add the simplest possible EVPN control plane: a full mesh of IBGP sessions between the leaf switches.

Repost: Think About the 99% of the Users

Daniel left a very relevant comment on my Data Center Fabric Designs: Size Matters blog post, describing how everyone rushes to sell the newest gizmos and technologies to the unsuspecting (and sometimes too-awed) users1:

Absolutely right. I’m working at an MSP, and we do a lot of project work for enterprises with between 500 and 2000 people. That means the IT department is not that big; it’s usually just a cost center for them.

EVPN Designs: VXLAN Leaf-and-Spine Fabric

In this series of blog posts, we’ll explore numerous routing protocol designs that can be used to implement EVPN-with-VXLAN L2VPNs in a leaf-and-spine data center fabric. Every design will come with a companion netlab topology you can use to create a lab and explore the behavior of leaf- and spine switches.

Our leaf-and-spine fabric will have four leaves and two spines (but feel free to adjust the lab topology fabric parameters to build larger fabrics). The fabric will provide layer-2 connectivity to orange and blue VLANs. Two hosts will be connected to each VLAN to check end-to-end connectivity.

Repost: EBGP-Mostly Service Provider Network

Daryll Swer left a long comment describing how he designed a Service Provider network running in numerous private autonomous systems. While I might not agree with everything he wrote, it’s an interesting idea and conceptually pretty similar to what we did 25 years ago (IBGP without IGP, running across physical interfaces, with every router being a route-reflector client of every other router), or how some very large networks were using BGP confederations.

Just remember (as someone from Cisco TAC told me in those days) that “you might be the only one in the world doing it and might hit bugs no one has seen before.”