… updated on Thursday, October 10, 2024 18:04 +0200

EVPN Designs: EBGP Everywhere

In the previous blog posts, we explored the simplest possible IBGP-based EVPN design and made it scalable with BGP route reflectors.

Now, imagine someone persuaded you that EBGP is better than any IGP (OSPF or IS-IS) when building a data center fabric. You’re running EBGP sessions between the leaf- and the spine switches and exchanging IPv4 and IPv6 prefixes over those EBGP sessions. Can you use the same EBGP sessions for EVPN?

TL&DR: It depends™.

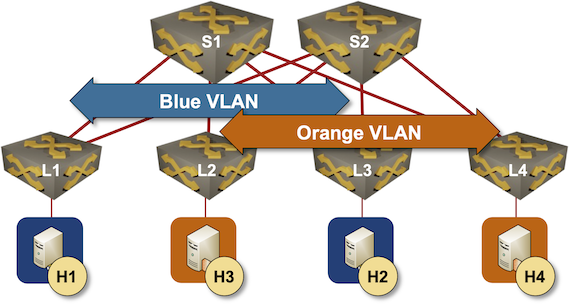

We’ll yet again work with a simple leaf-and-spine fabric:

Leaf-and-spine fabric with two VLANs

However, this time:

- We’ll be running EBGP and no IGP between leaf- and spine switches.

- We’ll try to run EBGP sessions over IPv6 link-local addresses (more details) to reduce the overhead of managing IPv4 subnets.

- The EBGP sessions will transport IPv4 and EVPN address families.

What could possibly go wrong? Starting with the EBGP-as-better-IGP idea:

- OSPF or IS-IS configuration is trivial compared to EBGP configuration unless your fabric has hundreds of switches, forcing you to deploy OSPF areas or multi-level IS-IS1.

- BGP needs way more configuration state than OSPF or IS-IS. You must keep track of BGP neighbors, their IP addresses (unless you can use IPv6 LLA EBGP sessions), and their AS numbers2. In OSPF, you can use a one-liner: network 0.0.0.0/0 area 03

- You could simplify BGP configuration and use the same AS number on all spine switches (recommended to prevent path hunting) and another AS number on all leaf switches. Still, then you’d have to manipulate AS path, turn off AS-path-based loop prevention checks, or use default routing4.

The only control-plane stack that makes EBGP as easy to deploy as IGP is still FRRouting. Multiple vendors support IPv6 LLA EBGP sessions, but most of them expect you to navigate the unexpected configuration requirements like we have to define a peer group for interface EBGP sessions.

Now for the EVPN address family considerations:

- The EVPN next hop (VTEP) should not change across the data center fabric; you wouldn’t want intermediate nodes to do VXLAN-to-VXLAN bridging5. The spine switches, thus, should not change the BGP next hop on EBGP sessions, but that’s not how EBGP works. Some vendors tweak the default EBGP behavior in the EVPN address family and leave the BGP next-hop unchanged. Others require a configuration nerd knob.

- EVPN has an excellent auto RT functionality that automatically sets the EVPN route targets based on the device’s BGP AS number and VLAN ID. That does not work across multiple autonomous systems unless the vendor (like Cumulus Linux) decides it’s OK to ignore the AS number part of EVPN route targets6

Finally, the elephant in the room. Some vendors seem to have suboptimal EVPN implementations that struggle with EVPN churn or a lost EVPN BGP session. Those vendors will invent all sorts of reasons why it makes perfect sense to run EVPN IBGP sessions between endpoints advertised with underlay IPv4 EBGP, or (even better) why it’s best to run EVPN EBGP sessions between loopbacks advertised through a different set of IPv4 EBGP sessions.

We’ll leave those discussions for another time and explore the more straightforward scenario of running the IPv4 and EVPN address families on the same EBGP sessions. We’ll use a lab setup similar to the IBGP Full Mesh Between Leaf Switches; read that blog post as well as the Creating the Lab Environment section of the first blog post in this series to get more details.

Leaf-and-Spine EBGP-Everywhere Lab Topology

This is the netlab lab topology description we’ll use to set up IPv4+EVPN EBGP sessions between leaf and spine switches.

defaults.device: eosprovider: clabaddressing.p2p.ipv4: Trueevpn.as: 65000evpn.session: [ ebgp ]bgp.community.ebgp: [ standard, extended ]bgp.sessions.ipv4: [ ebgp ]plugin: [ fabric ]fabric:spines: 2leafs: 4spine.bgp.as: 65100leaf.bgp.as: '{ 65000 + count }'groups:_auto_create: Trueleafs:module: [ bgp, vlan, vxlan, evpn ]spines:module: [ bgp, evpn ]hosts:members: [ H1, H2, H3, H4 ]device: linuxvlan.mode: bridgevlans:orange:links: [ H1-L1, H2-L3 ]blue:links: [ H3-L2, H4-L4 ]tools:graphite:

The VXLAN Leaf-and-Spine Fabric blog post explains most of the topology file. We had to make these changes to implement the EBGP-everywhere scenario:

- Line 4: We’re using unnumbered point-to-point links (remove this line if your device does not support interface EBGP sessions)

- Line 5: We need a global AS number to set the route targets for EVPN layer-2 segments7

- Line 6: EVPN has to be enabled on EBGP sessions

- Line 7: Switches must send extended BGP communities on EBGP sessions

- Line 8: We don’t need an IBGP session between S1 and S2 (by default, netlab tries to build IBGP sessions between routers in the same autonomous system). The fabric has only EBGP sessions.

- Line 14: The BGP AS number on the spine switches is set to 65100

- Line 16: The BGP AS number on the individual leaf switches is set to 65000 + switch ID (more details, example)

- Line 20: Leaf switches are running VLANs, VXLAN, BGP, and EVPN

- Line 22: Spine switches are running BGP and EVPN

Assuming you already did the previous homework, it’s time to start the lab with the netlab up command. You can also start the lab in a GitHub Codespace (the directory is EVPN/ebgp); you’ll still have to import the Arista cEOS container, though.

Behind the Scenes

This is the FRRouting BGP configuration of L1. As you can see, it’s as concise as it can get. The spine configuration is almost identical; it has more EBGP neighbors but no additional nerd knobs.

router bgp 65001bgp router-id 10.0.0.1no bgp default ipv4-unicastbgp bestpath as-path multipath-relaxneighbor eth1 interface remote-as 65100neighbor eth1 description S1neighbor eth2 interface remote-as 65100neighbor eth2 description S2!address-family ipv4 unicastnetwork 10.0.0.1/32neighbor eth1 activateneighbor eth2 activateexit-address-family!address-family l2vpn evpnneighbor eth1 activateneighbor eth2 activateadvertise-all-vnivni 101000rd 10.0.0.1:1000route-target import 65000:1000route-target export 65000:1000exit-vniadvertise-svi-ipadvertise ipv4 unicastexit-address-familyexit

As this is the first FRRouting configuration in this series, let’s walk through it:

- Lines 2-4: Defaults

- Lines 5-8: Configuring interface EBGP neighbors. We could use neighbor remote-as external in a manually-crafted configuration.

- Lines 10-14: We decided to configure an explicit IPv4 address family, so we must activate the EBGP neighbors.

- Lines 17-18: We must activate the EVPN address family for the EBGP neighbors.

- Lines 20-24: Defining a layer-2 VXLAN segment. Route distinguisher and route targets have static values.

- Line 25: The router should advertise its IP address in an EVPN update (not relevant for this lab)

- Line 26: The router should redistribute IPv4 unicast prefixes into EVPN type-5 routing updates (irrelevant for this lab).

And this is the functionally equivalent L1 configuration for Arista EOS. The spine configuration is almost identical; Arista EOS requires no extra nerd knobs for EBGP EVPN sessions.

router bgp 65001router-id 10.0.0.1no bgp default ipv4-unicastbgp advertise-inactiveneighbor ebgp_intf_Ethernet1 peer groupneighbor ebgp_intf_Ethernet1 remote-as 65100neighbor ebgp_intf_Ethernet1 description S1neighbor ebgp_intf_Ethernet1 send-community standard extended largeneighbor ebgp_intf_Ethernet2 peer groupneighbor ebgp_intf_Ethernet2 remote-as 65100neighbor ebgp_intf_Ethernet2 description S2neighbor ebgp_intf_Ethernet2 send-community standard extended largeneighbor interface Et1 peer-group ebgp_intf_Ethernet1neighbor interface Et2 peer-group ebgp_intf_Ethernet2!vlan 1000rd 10.0.0.1:1000route-target import 65000:1000route-target export 65000:1000redistribute learned!address-family evpnneighbor ebgp_intf_Ethernet1 activateneighbor ebgp_intf_Ethernet2 activate!address-family ipv4neighbor ebgp_intf_Ethernet1 activateneighbor ebgp_intf_Ethernet1 next-hop address-family ipv6 originateneighbor ebgp_intf_Ethernet2 activateneighbor ebgp_intf_Ethernet2 next-hop address-family ipv6 originatenetwork 10.0.0.1/32

Let’s walk through the extra configuration we had to make:

- Lines 5-12: We must create peer groups for interface EBGP sessions. A single peer group would be good enough; netlab creates a different peer group for every EBGP peer to be able to apply per-peer routing policies.

- Lines 13-14: We create interface peers

- Lines 28,30: IPv4 address family will use IPv6 next hops (interface EBGP sessions use RFC 8950).

The Arista EOS configuration is a bit more verbose than FRRouting, but not too bad.

You can view complete configurations for all switches on GitHub.

Does It Work?

Of course, it does, or I would be fixing configuration templates instead of writing a blog post. The EVPN updates sent from L1 to S1/S2 are forwarded almost intact8 to the other leaf switches.

The following printout shows L2’s view of one of the EVPN routes advertised from L1. Note that we have two identical EVPN routes in the BGP table; L1 is advertising its routes to S1 and S2, and they forward them to L2.

BGP routing table information for VRF defaultRouter identifier 10.0.0.2, local AS number 65002BGP routing table entry for mac-ip aac1.ab83.733e, Route Distinguisher: 10.0.0.1:1000Paths: 2 available65100 6500110.0.0.1 from fe80::50dc:caff:fefe:602%Et2 (10.0.0.6)Origin IGP, metric -, localpref 100, weight 0, tag 0, valid, external, ECMP head, ECMP, best, ECMP contributorExtended Community: Route-Target-AS:65000:1000 TunnelEncap:tunnelTypeVxlanVNI: 101000 ESI: 0000:0000:0000:0000:000065100 6500110.0.0.1 from fe80::50dc:caff:fefe:502%Et1 (10.0.0.5)Origin IGP, metric -, localpref 100, weight 0, tag 0, valid, external, ECMP, ECMP contributorExtended Community: Route-Target-AS:65000:1000 TunnelEncap:tunnelTypeVxlanVNI: 101000 ESI: 0000:0000:0000:0000:0000

The only significant change from the IBGP case is the BGP next-hop information (lines 7 and 12):

- The next hop is the L1 VTEP (10.0.0.1)

- The router advertising the route has an IPv6 link-local address

- The router ID of the router advertising the router is the loopback interface of S1/S2.

Was It Worth the Effort?

TL&DR: Meh. The only “benefit” claimed by people who like this design is a single routing protocol.

I would use this design with a device using the FRRouting control plane. I might use it with other devices if the vendor rep can point me to a relevant “validated design” and the configuration is not too cumbersome (Arista EOS is OK).

Caveats9? Extra nerd knobs? Run away and use IBGP-over-IGP.

Next: EVPN EBGP over IPv4 EBGP Continue

Revision History

- 2024-10-10

- Removed the unnecessary IBGP session between S1 and S2 based on the feedback by AW.

-

In which case, I hope you’re reading this blog post solely for its entertainment value ;) ↩︎

-

Unless you’re using neighbor remote-as external FRRouting configuration command ↩︎

-

Or whatever your loopback prefix range is ↩︎

-

Please don’t unless you want a fun troubleshooting exercise after a leaf-to-spine link failure. The details are left as an exercise for the reader. ↩︎

-

Due to hardware limitations, most of them wouldn’t be able to do that anyway. ↩︎

-

Not always a good idea, but you already know there’s a tradeoff lurking wherever you look. ↩︎

-

netlab is not using automatic EVPN route targets or route distinguishers. ↩︎

-

Apart from a longer AS path ↩︎

-

Cisco Nexus OS documentation still claims that “In a VXLAN EVPN setup that has 2K VNI scale configuration, the control plane downtime may take more than 200 seconds. To avoid potential BGP flap, extend the graceful restart time to 300 seconds.” I’m unsure whether that would apply to an EBGP session restart due to a link flap, but it might explain why they’re talking about EVPN EBGP sessions between loopback interfaces. ↩︎

Lab is great! I'm confused by the iBGP peer between the spines. Is this just an artifact of config generation? I can't think of why it would be necessary or useful in this design but could be missing something.

The IBGP session is the side effect of how netlab sets up BGP sessions. It assumes there should be an IBGP session between routers in the same AS (which is usually correct).

Will fix the lab topology and the blog post. Thank you!

For ebgp only evpn, I assume frr and arista eos uses single ebgp process for overlay and underlay from your explanation. Do you ever encountered vendors that spawn different bgp process for underlay and overlay for ebgp only evpn that makes it a concern ?

Also for spine config for arista I assume the difference with ibgp overlay is just next-hop-unchanged, in your opinion is it better than other vendors like nxos or srlinux? Or the "nerd knob" that we need for this ebgp only fabric to work, is mostly on the leaf side?

Arista is one of those vendors that realized you SHOULD NOT change the BGP next hop on EBGP sessions BY DEFAULT. It does not need next-hop-unchanged (start the lab and check it out).

As for the rest: https://blog.ipspace.net/2021/11/multi-threaded-routing-daemons/

Hope this helps, Ivan