Resiliency of VM NIC firewalls

Dmitry Kalintsev left a great comment on my security paradigm changing post:

I have not yet seen redundant VNIC-level firewall implementations, which stopped me from using [...] them. One could argue that vSwitches are also non-redundant, but a vSwitch usually has to do stuff much less complex than what a firewall would, meaning chances or things going south are lower.

As always, things are not purely black-and-white and depend a lot on the product architecture and implementation.

VM NIC firewalls implemented in VMs

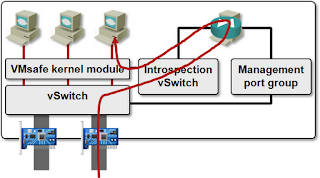

Products like vShield App/Zones implement the VM NIC firewall functionality in a separate VM running on the same host as the protected VM – all the traffic sent and received by the protected VM has to traverse a non-redundant firewall VM:

You could configure what happens when the firewall VM crashes in vShield release 5 and later – it’s either fail-open or fail-close, but neither one is a good option. vSphere HA cluster doesn’t react to firewall VM crashes (why should it), leaving the VMs residing on the same host either unprotected or isolated from the network.

vShield Edge is a totally different beast – the latest release has high-availability features including stateful failover ... but then it’s a routed firewall, not a VM NIC firewall.

Cisco’s VSG is a totally different story – it has full high-availability with stateful failover.

VM NIC firewalls implemented in the hypervisor kernel

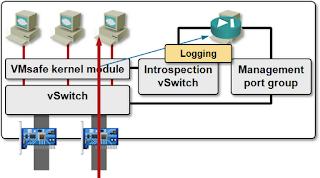

Although Juniper’s vGW uses the same API as VMware’s vShield App firewall, its implementation is totally different – all the firewalling functionality is implemented in a loadable vSphere kernel module. The control VM (still running on the same vSphere host) is used solely for configuration, monitoring and logging purposes, and you can have a HA pair of them (on each vSphere host) in mission-critical environments.

I can only guess what happens if the vGW kernel module crashes, but hopefully it brings down the whole vSphere host, in which case vSphere HA kicks in and restarts the VMs on other hosts in the same cluster.

Firewall impact on hypervisor host

Dmitry raised another valid point: “There's a non-insubstantial risk of firewall module consuming too much of the host resources, and either slowing down network for collocated VMs, or starving them of CPU (or both).”

Obviously that can always happen (although I would be more concerned about the explosion of connection tables), but physical firewalls fare no better (see also this comment). You have to choose your poison: do you want a single vSphere host to be hosed (DRS would probably save the day in VMware environments and spread the load across the cluster) or do you want your Internet-facing firewall to be hosed and bring down the whole data center? Obviously you can also decide not to have firewalls at all and rely solely on hardened hosts ;)

More information

Virtual Firewalls webinar has a more detailed overview of virtual firewall solutions (including VM NIC firewalls) and description of individual products for VMware, Hyper-V and Linux environments.

Advantages and drawbacks of virtual firewalls are also one of the topics of my Network Infrastructure for Cloud Computing workshop @ Interop Las Vegas, so make sure you drop by if you plan to attend Interop.

Thanks for the detailed response. :) I probably should have been a bit clearer in my first comment - I meant kernel module firewalls, rather than simply VNIC-level ones. In addition to Juniper's vGW, there is one from Checkpoint (Security Gateway Virtual Edition, which can be either deployed either in a VM, which can't do VNIC-level filtering IIRC, or as a kernel module, which I'm almost certain can).

When talking about the host resource consumption, another clarification is probably in order: I'm not too fussed about VM-based firewalls, because you can explicitly control host resources available to VMs; however when it comes to kernel modules, I'm much less sure and comfortable. I'm also not quite sure what DRS can do if a kernel module gets "hot", starving the host.

Now, here's another consideration: if I understand it correctly, VNIC-level firewalls are deployed one or a redundant pair per host, and have to serve *all* VMs running on that host. On the other hand, "regular", non-VNIC firewalls, can be deployed in multitude (one or more per customer, spread across different hosts, and potentially shuffled around by DRS), which in my eyes is a more scalable way of doing things, plus if one of them is hosed, it's only affects one customer.

Hope this makes sense..

Thanks so much for the link!

Not true with Cisco's VSG at least.

If yes, it doesn't change the premise of my comment then. If not, could you please clarify?

-- Dmitri

VSG policies are then applied to port-profiles on the Nexus 1000V which are then applied to virtual machines (to a VMware admin this is just picking the network label in the drop-down to assign it to a particular network).

The actual traffic is switched locally by the hypervisor (the Nexus 1000V VEM module built into ESX to be specific), only the first packet of a new conversation is checked against the VSG, then a rule is basically cached on the local host and processing continues without involving VSG (until a policy change occurs on the VSG or the cached copy times out).

VNMC (the centralized management console) can manage up to 128 VSGs (or 600 hosts).

I've had the VSG in production since August 2011 and it's been extremely solid for us. We've only encountered one significant issue that we're still forced to workaround; overall it's been a great solution (and way better than private VLANs, etc.).

Thanks for the clarification. Looks like the availability of the VSG services depends on the N1KV kernel module. Also I imagine that with only first packet going through the VSG, more complex firewall tasks requiring packet reassembly, etc. are probably not possible.

Firewalls like Cisco ASA, Checkpoint, Netscreen & the virtual counterparts like Juniper vGW, vShield Edge & vShield App Distributed Firewall all do many checks in the connection tracking area, see some of the NSS & ICSA criteria around those checks.

Ivan - it would be interesting to do a blog around where ACLs stop and Firewalls begin and their intersection in the virtualization space....

You probably have something like this in mind: http://blog.ioshints.info/2013/03/the-spectrum-of-firewall-statefulness.html

If the above description of VSG is correct, then it's definitely a reflexive ACL firewall. Also, I don't think VEM does IP or TCP reassembly to check validity of TCP segments (one more argument for reflexive ACL classification).

On the other hand, it would be interesting to hear what vShield App does that's more than that. Anything you can share?

Thank you!

Ivan