Category: fabric

Mythbusting: NFV Data Center Fabric Buffering Requirements

Every now and then I stumble upon an article or a comment explaining how Network Function Virtualization (NFV) introduces new data center fabric buffering requirements. Here’s a recent example:

For Telco/carrier Cloud environments, where NFVs (which are much slower than hardware SGW) get used a lot, latency is higher with a lot of jitter due to the nature of software and the varying link speeds, so DC-level near-zero buffer is not applicable.

It seems to me we’re dealing with another myth. Starting with the basics:

Using Unequal-Cost Multipath to Cope with Leaf-and-Spine Fabric Failures

Scott submitted an interesting the comment to my Does Unequal-Cost Multipath (UCMP) Make Sense blog post:

How about even Large CLOS networks with the same interface capacity, but accounting for things to fail; fabric cards, links or nodes in disaggregated units. You can either UCMP or drain large parts of your network to get the most out of ECMP.

Before I managed to write a reply (sometimes it takes months while an idea is simmering somewhere in my subconscious) Jeff Tantsura pointed me to an excellent article by Erico Vanini that describes the types of asymmetries you might encounter in a leaf-and-spine fabric: an ideal starting point for this discussion.

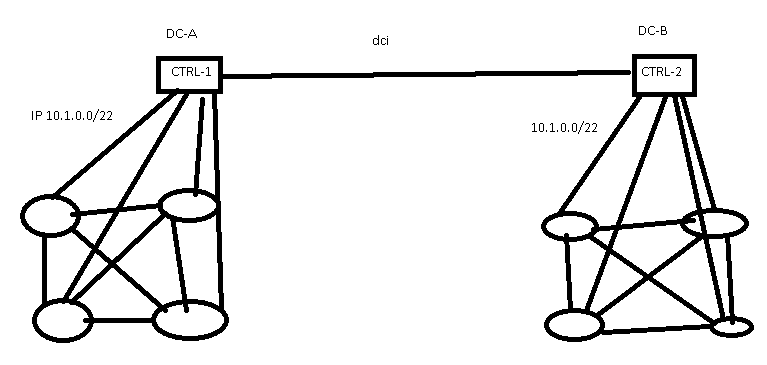

Impact of Centralized Control Plane Partitioning

A long-time reader sent me a series of questions about the impact of WAN partitioning in case of an SDN-based network spanning multiple locations after watching the Architectures part of Data Center Fabrics webinar. He therefore focused on the specific case of centralized control plane (read: an equivalent of a stackable switch) with distributed controller cluster (read: switch stack spread across multiple locations).

SDN controllers spread across multiple data centers

Worth Reading: Understanding Table Sizes on the Arista 7050QX-32

Arista published a blog post describing the details of forwarding table sizes on 7050QX-series switches. The description includes the base mode (fixed tables), unified forwarding tables and even the IPv6 LPM details, and dives deep into what happens when the switch runs out of forwarding table entries.

Too bad they’re describing an ancient Trident-2 ASIC (I last mentioned switches using it in 2017 Data Center Fabrics update). Did NDA expire on that one?

Redundant Server Connectivity in Layer-3-Only Fabrics (Part 2)

In June 2020 I published the first part of Redundant Server Connectivity in Layer-3-Only Fabrics article describing the target design and application-layer requirements.

During the summer I added the details of multi-subnet server and client connectivity and a few conclusions.

Redundant Server Connectivity in Layer-3-Only Fabrics

A long while ago I decided to write an article explaining how you could run VMware NSX on ESXi servers with redundant connections to two top-of-rack switches on top of a layer-3-only fabric (a fabric with IP subnets and VLANs limited to a single top-of-rack switch). Turns out that’s Mission Impossible, so I put the article on the back burner and slowly forgot about it.

Well, not exactly. Every now and then my subconsciousness would kick it up and I’d figure out yet-another reason why it’s REALLY hard to do it right. After a while, I decided to try again, and completely rewrote the article. The first part is already online, more details coming (hopefully) soon.

Worth Exploring: Arista EVPN-Based Automation Virtual Lab

David Varnum created a fantastic leaf-and-spine fabric of vEOS switches running with GNS3 and automated with Ansible playbooks.

Not only that - his blog post includes detailed setup instructions, and the corresponding GitHub repository contains all the source code you need to get it up and running.

BGP AS Numbers on MLAG Members

I got this question about the use of AS numbers on data center leaf switches participating in an MLAG cluster:

In the Leaf-and-Spine Fabric Architectures you made the recommendation to have the same AS number on all members of an MLAG cluster and run iBGP between them. In the Autonomous Systems and AS Numbers article you discuss the option of having different AS number per leaf. Which one should I use… and do I still need the EBGP peering between the leaf pair?

As always, there’s a bit of a gap between theory and practice ;), but let’s start with a leaf-and-spine fabric diagram illustrating both concepts:

What Data Center Switches Should I Buy with VMware NSX?

Another interesting question I got from an ipSpace.net subscriber:

Assuming we can simplify the physical network when using overlay virtual network solutions like VMware NSX, do we really need datacenter switches (example: Cisco Nexus instead of Catalyst product line) to implement the underlay?

Let’s recap what we really need to run VMware NSX:

Should I Go with VXLAN or MLAG with STP?

TL&DR: It’s 2020, and VXLAN with EVPN is all the rage. Thank you, you can stop reading.

On a more serious note, I got this questions from an Johannes Spanier after he read my do we need complex data center switches for NSX underlay blog post:

Would you agree that for smaller NSX designs (~100 hypervisors) a much simpler Layer2 based access-distribution design with MLAGs is feasible? One would have two distribution switches and redundant access switches MLAGed together.

I would still prefer VXLAN for a number of reasons:

Must Read: Impact of Tomahawk-4 on Data Center Fabric Designs

Dinesh Dutt, a pragmatic IP routing guru, the mastermind behind great concepts like simplified BGP configuration, and one of the best ipSpace.net authors, finally decided to start blogging. His first article: describing the impact of having 256 100GE ports in a single ASIC (Tomahawk 4). Hope you’ll enjoy his musings as much as I did ;)

Do We Need Complex Data Center Switches for VMware NSX Underlay

Got this question from one of ipSpace.net subscribers:

Do we really need those intelligent datacenter switches for underlay now that we have NSX in our datacenter? Now that we have taken a lot of the intelligence out of our underlying network, what must the underlying network really provide?

Reading the marketing white papers the answer would be IP connectivity… but keep in mind that building your infrastructure based on information from vendor white papers usually gives you the results your gullibility deserves.

Getting More Bang for Your VXLAN Bucks

A little while ago I explained why you can’t use more than 4K VXLAN segments on a ToR switch (at least with most ASICs out there). Does that mean that you’re limited to a total of 4K virtual ethernet segments?

Of course not.

You could implement overlay virtual networks in software (on hypervisors or container hosts), although even there the enterprise products rarely give you more than a few thousand logical switches (to use NSX terminology)… but that’s a product, not technology limitation. Large public cloud providers use the same (or similar) technology to run gazillions of tenant segments.

Building Fabric Infrastructure for an OpenStack Private Cloud

An attendee in my Building Next-Generation Data Center online course was asked to deploy numerous relatively small OpenStack cloud instances and wanted select the optimum virtual networking technology. Not surprisingly, every $vendor had just the right answer, including Arista:

We’re considering moving from hypervisor-based overlays to ToR-based overlays using Arista’s CVX for approximately 2000 VLANs.

As I explained in Overlay Virtual Networking, Networking in Private and Public Clouds and Designing Private Cloud Infrastructure (plus several presentations) you have three options to implement virtual networking in private clouds:

Commentary: We’re stuck with 40 years old technology

One of my readers sent me this email after reading my Loop Avoidance in VXLAN Networks blog post:

Not much has changed really! It’s still a flood/learn bridged network, at least in parts. We count 2019 and talk a lot about “fabrics” but have 1980’s networks still.

The networking fundamentals haven’t changed in the last 40 years. We still use IP (sometimes with larger addresses and augmentations that make it harder to use and more vulnerable), stream-based transport protocol on top of that, leak addresses up and down the protocol stack, and rely on technology that was designed to run on 500 meters of thick yellow cable.