Category: data center

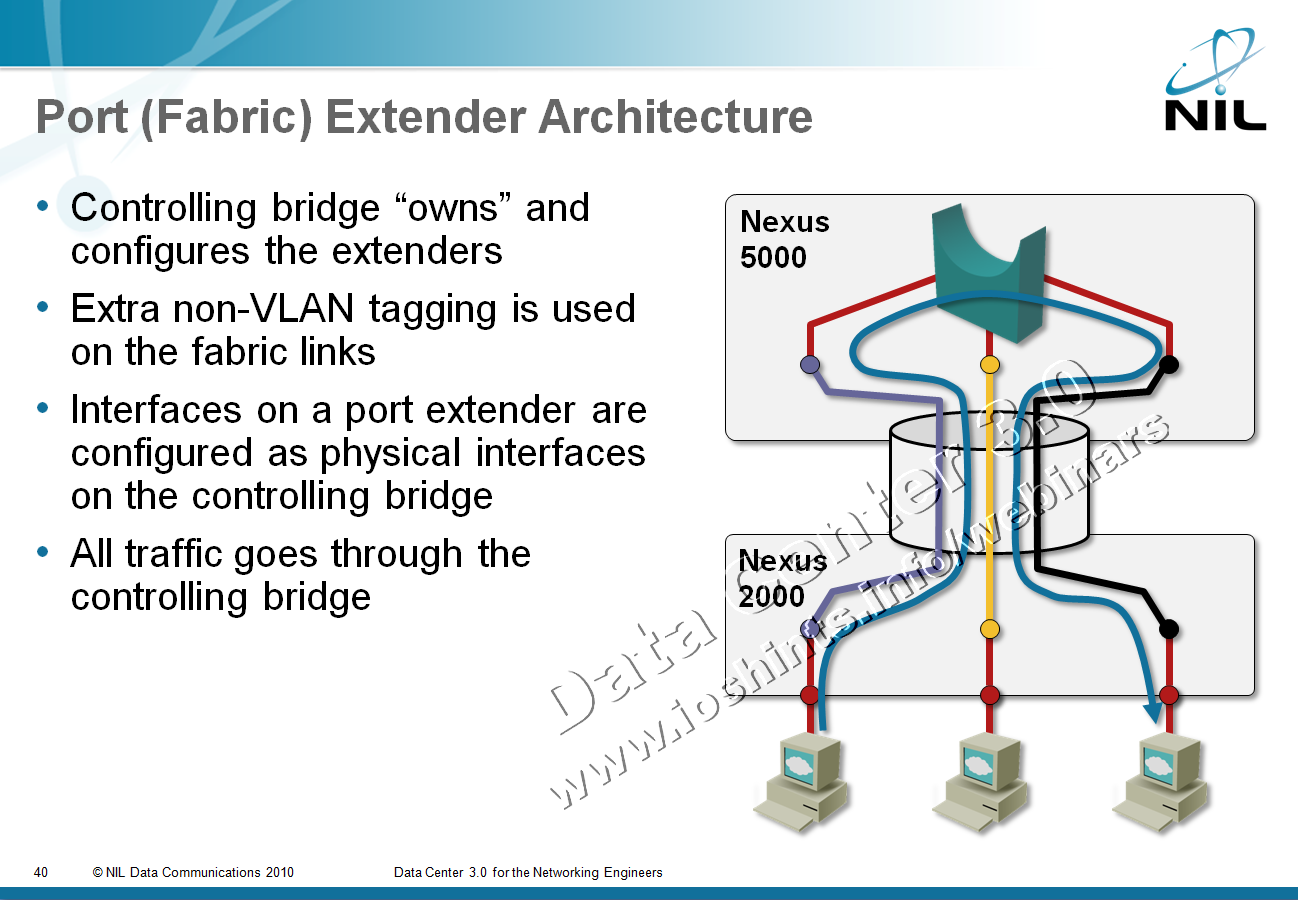

Port or Fabric Extenders?

Among other topics discussed during the Big Hot and Heavy Switches (Part 1) podcast (if you haven’t listened to it yet, it’s high time you do), we’ve mentioned port extenders. As our virtual whiteboard is not always clearly visible during the podcast (although we scribble heavily on it), here’s the big-picture architecture:

After the podcast I wanted to dig into a few minor technical details and stumbled into a veritable confusopoly.

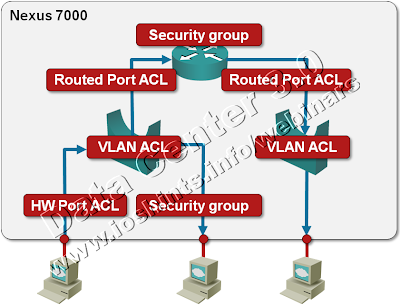

Packet Filters on a Nexus 7000

We’re always quick to criticize ... and usually quiet when we should praise. I’d like to fix one of my omissions: a few days ago I was trying to figure out whether Nexus 7000 supports IPv6 access lists (one of the presentations I was looking at while researching the details for my upcoming Data Center webinar implied there might be a problem) and was pleasantly surprised by the breadth of packet filters offered on this platform. Let’s start with a diagram.

How many large-scale bridging standards do we need?

Someone had a “borderless data center mobility” dream a few years ago and managed to infect a few other people, resulting in a networking industry pandemic that is usually exhibited by the following “facts”:

- Unhindered Virtual Machine mobility across the globe is the absolute prerequisite for any business agility. Wrong. There are other field-proven solutions and although inter-site VM mobility has been demonstrated, it’s still a half-baked idea and has many caveats.

- You can only reach that Holy Grail by extending your layer-2 domains across vast distances. Totally wrong. It would be easier to fix L3 routing and signaling protocols than to invent completely new technologies trying to fix L2 problems. Users of Microsoft NLB are might disagree ... in which case I wish them luck in scaling their architecture.

- Large-scale bridging is absolutely mandatory if you want to build cloud solutions with tens of thousands of servers. Not sure about that. Google is there, Facebook, Twitter and Amazon are (at least) close, large web hosting providers have been around for years ... and yet they somehow managed to survive with existing technologies and good network designs.

Just today XKCD published a very relevant comic, so I can skip my usually sarcastic comments and focus on the plethora of emerging large-scale bridging standards and implementations. Let’s walk through them:

Look beyond your cubicle

The Packet Pushers Episode 11 (If You Can’t Be Replaced, You Can’t Be Promoted) contains numerous highly valuable career advices. I won’t spoil the fun by telling you what they are (listen to the podcast if you haven’t done so already); I’ll just add one to their long list: always look beyond what you’re doing at the moment. For example, a networking engineer working anywhere near a Data Center environment should be very familiar with the server and storage technologies.

Read more ... (this time @ etherealmind.com)

TRILL and 802.1aq are like apples and oranges

A comment by Brad Hedlund has sent me studying the differences between TRILL and 802.1aq and one of the first articles I’ve stumbled upon was a nice overview which claimed that the protocols are very similar (as they both use IS-IS to select shortest path across the network). After studying whatever sparse information there is on 802.1aq and the obligatory headache, I’ve figured out that the two proposals have completely different forwarding paradigms. To claim they’re similar is the same as saying DECnet phase V and MPLS Traffic Engineering are similar because they both use IS-IS.

Use BitTorrent to update software in your Data Center

Stretch (@packetlife) shared an interesting link in a comment to my P2P traffic is bad for the network post: Facebook and Twitter use BitTorrent to distribute software updates across hundreds (or thousands) of servers ... another proof that no technology is good or bad by itself (Greg Ferro might have a different opinion about FCoE).

Shortly after I’ve tweeted about @packetlife’s link, @sevanjaniyan replied with an even better link to a presentation by Larry Gadea (infrastructure engineer @ Twitter) in which Larry describes Murder, Twitter’s implementation of software distribution on top of BitTornado library.

If you have a data center running large number of servers that have to be updated simultaneously, you should definitely watch the whole presentation; here’s a short spoiler for everyone else:

Bridging and Routing, Part II

Based on the readers’ comments on my “Bridging and Routing: is there a difference?” post (thanks you!), here are a few more differences between bridging and routing:

Cost. Layer-2 switches are almost always cheaper than layer-3 (usually combined layer-2/3) switches. There are numerous reasons for the cost difference, including:

Why Is TRILL Not Routing at Layer-2

Peter John Hill made an interesting observation in a comment to one of my blog posts; he wrote “TRILL really is routing at layer 2.”

He’s partially right – TRILL uses a routing protocol (IS-IS) and the TRILL protocol used to forward Ethernet frames (TRILL data frames) definitely has all the attributes of a layer-3 protocol:

- TRILL data frames have layer-3 addresses (RBridge nickname);

- They have a hop count;

- Layer-2 next-hop is always the MAC address of the next-hop RBridge;

- As the TRILL data frames are propagated between RBridges, the outer MAC header changes.

Server Virtualization Has Totally Changed the Data Center Networking

There’s an extremely good reason Brad Hedlund mentioned server virtualization in his career advice: it has fundamentally changed the Data Center networking.

Years ago, we’ve treated servers as oversized IP hosts. From the networking perspective, they were no different from other IP hosts. Some of them had weird clustering requirements, some of them had multiple uplinks that had to be managed somehow, but those were just minor details. Server virtualization is a completely different beast.

Bridging and Routing: Is There a Difference?

In his comment to one of my TRILL posts, Petr Lapukhov has asked the fundamental question: “how is bridging different from routing?”. It’s impossible to give a concise answer (let alone something as succinct as 42) as the various kludges and workarounds (including bridges and their IBM variants) have totally muddied the waters. However, let’s be pragmatic and compare Ethernet bridging with IP (or CLNS) routing. Throughout this article, bridging refers to transparent bridging as defined by the IEEE 802.1 series of standards.

Design scope. IP was designed to support global packet switching network infrastructure. Ethernet bridging was designed to emulate a single shared cable. Various design decisions made in IP or Ethernet bridging were always skewed by these perspectives: scalability versus transparency.

Easy deployment of IPv6 content

During the last Google IPv6 Implementors Conference Donn Lee from Facebook showed how easy it is to make your content available over IPv6 and LISP ... if you happen to have the right load balancer that supports IPv6 (to view his presentation, click the slides link next to his name in the conference agenda). I would say all the excuses why your content cannot be possibly made available over IPv6 are gone (and one can only hope that a certain vendor I’m often mentioning will finally realize IPv6 is needed on more boxes than just routers and switches).

Hat tip to Andrej Kobal who sent me the link to the FB presentation.

Where would you need bridging in the Data Center

In the recent months, there’s been a lot of buzz about next-generation Data Center bridging, including the Earth Is Flat rediscovery from Brocade (I thought that was settled in middle ages) and a TRILL article in SearchNetworking (which quoted both Greg and me as being on the opposite sides of the TRILL debate).

The more I think about this problem, the more I’m wondering whether we really need large-scale bridging in data centers (it looks like Google can live quite happily without it). We definitely need some bridging, but generic large-scale inter-site monstrosity? I doubt.

Please try to help me: forget all the “this is how we do it” presumptions, figure out a scenario where you absolutely need bridging and describe it in the comments.

Worse is Better

My long-time friend Anne Johnson has published a link to Vijay Gill’s keynote presentation in her short summary of NANOG 49. Vijay has an interesting problem: Google’s infrastructure is so huge that he has no time for fancy toys or complex solutions; keeping the simple stuff running is hard enough. I love his rephrasing of the KISS principle (renamed to Worse is Better):

You see, everybody else is too afraid of looking stupid because they just can’t keep enough facts in their head at once to make multiple inheritance, … or multithreading, or any of that stuff work.

So they sheepishly go along with whatever faddish programming network craziness has come down from the architecture astronauts who speak at conferences and write books and articles and are so much smarter than us that they don’t realize that the stuff that they’re promoting is too hard for us.

His Guiding Principles are also excellent (and oft repeated by the old-timers who have learned their lessons the hard way):

- Important not to try to be all things to all people

- Don't build infrastructure just for its own sake

- Don't imagine unlikely potential needs that aren't really there

I would strongly suggest you browse through the rest of his presentation.

Interconnecting two core switches

Ethan Banks has a great article @ PACKETattack: in Assembly Required – Interconnecting 2 Ethernet Chassis Switches he describes various options you have when you want to connect your redundant core switches. Using more than one physical link is the obvious choice; most people are careful enough to use at least two linecards, but the true magic begins when you start considering the bandwidth allocation to individual linecards and port groups within linecards.

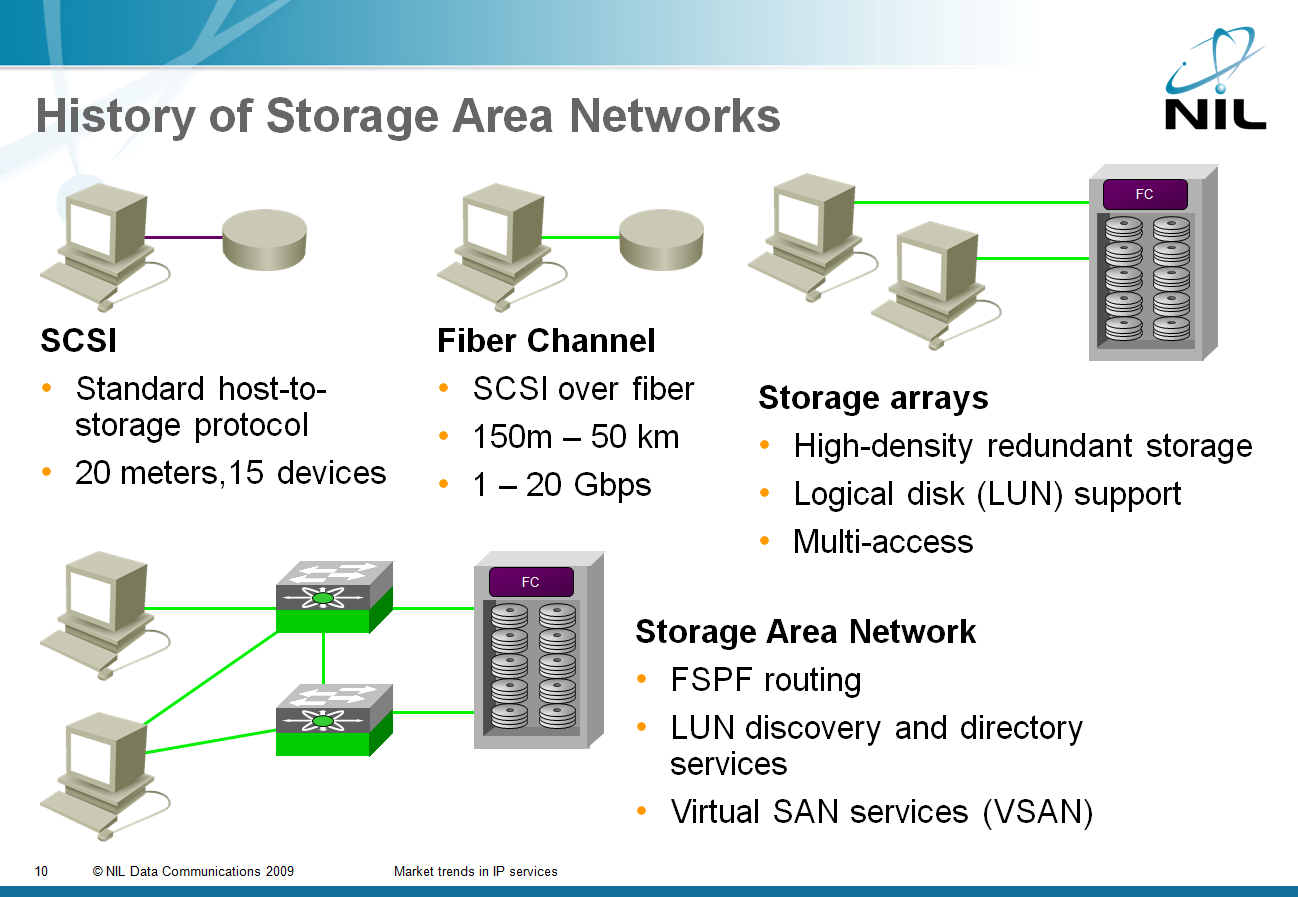

iSCSI and SAN: Two Decades of Rigid Mindsets

Greg Ferro asked a very valid question in his blog: “Why does iSCSI use TCP as the underlying transport protocol”? It’s possible to design storage-focused protocol that uses connectionless lower layers (NFS comes to mind), but the storage industry has been too focused on their past to see past the artificial obstacles they’ve set up themselves.