Category: data center

MPLS/VPN in the Data Center? Maybe not in the hypervisors

A while ago I wrote that the hypervisor vendors should consider turning the virtual switches into PE-routers. We all know that’s never going to happen due to religious objections from everyone who thinks VLANs are the greatest thing ever invented and MP-BGP is pure evil, but there are at least two good technical reasons why putting MPLS/VPN (as we know it today) in the hypervisors might not be the best idea in very large data centers.

Do we really need Stateless Transport Tunneling (STT)

The first question everyone asked after Nicira had published yet another MAC-over-IP tunneling draft was probably “do we really need yet another encapsulation scheme? Aren’t VXLAN or NVGRE enough?” Bruce Davie tried to answer that question in his blog post (and provided more details in another one), and I’ll try to make the answer a bit more graphical.

VXLAN and EVB questions

Wim (@fracske) De Smet sent me a whole set of very good VXLAN- and EVB-related questions that might be relevant to a wider audience.

If I understand you correctly, you think that VXLAN will win over EVB?

I wouldn’t say they are competing directly from the technology perspective. There are two ways you can design your virtual networks: (a) smart core with simple edge (see also: voice and Frame Relay switches) or (b) smart edge with simple core (see also: Internet). EVB makes option (a) more viable, VXLAN is an early attempt at implementing option (b).

OpenFlow: A perfect tool to build SMB data center

When I was writing about the NEC+IBM OpenFlow trials, I figured out a perfect use case for OpenFlow-controlled network forwarding: SMB data centers that need less than a few hundred physical servers – be it bare-metal servers or hypervisor hosts (hat tip to Brad Hedlund for nudging me in the right direction a while ago)

Does CCIE still make sense?

A reader of my blog sent me this question:

I am a Telecommunication Engineer currently preparing for the CCIE exam. Do you think that in a near future it will be worth to be a CCIE, due to the recent developments like Nicira? What will be the future of Cisco IOS, and protocols like OSPF or BGP? I am totally disoriented about my career.

Well, although I wholeheartedly agree with recent post from Derick Winkworth, the sky is not falling (yet):

Edge Virtual Bridging (802.1Qbg) – a Technology Refusing to Die

I thought Edge Virtual Bridging (EVB) would be the technology transforming the kludgy vendor-specific VM-aware networking solutions into a properly designed architecture, but the launch of L2-over-IP solutions for VMware and Xen hypervisors is making EVB obsolete before it ever made it through the IEEE doors.

Updated Webinar Roadmaps

I finally found just the right set of tools to draw and update webinar roadmaps without too much hassle, and updated all of them to include the webinars developed during late 2011 and planned for 2012:

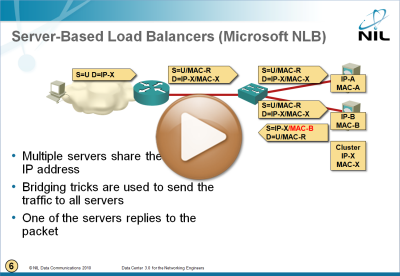

Microsoft Network Load Balancing Behind the Scenes

I figured out I wrote a lot about Microsoft Network Load Balancing (NLB) without ever explaining how that marvel of engineering works. To fix that omission, here’s a short video taken from the Data Center 3.0 webinar.

FIB Update Challenges in OpenFlow Networks

Last week I described the problems high-end service provider routers (or layer-3 switches if you prefer that terminology) face when they have to update large number of entries in the forwarding tables (FIBs). Will these problems go away when we introduce OpenFlow into our networks? Absolutely not, OpenFlow is just another mechanism to download forwarding entries (this time from an external controller) not a laws-of-physics-changing miracle.

VXLAN runs over UDP – does it matter?

Scott Lowe asked a very good question in his Technology Short Take #20:

VXLAN uses UDP for its encapsulation. What about dropped packets, lack of sequencing, etc., that is possible with UDP? What impact is that going to have on the “inner protocol” that’s wrapped inside the VXLAN UDP packets? Or is this not an issue in modern networks any longer?

Short answer: No problem.

… updated on Tuesday, November 17, 2020 16:49 UTC

IP Renumbering in Disaster Avoidance Data Center Designs

It’s hard for me to admit, but there just might be a corner use case for split subnets and inter-DC bridging: even if you move a cold VM between data centers in a controlled disaster avoidance process (moving live VMs rarely makes sense), you might not be able to change its IP address due to hard-coded IP addresses, be it in application code or configuration files.

Disaster recovery is a different beast: if you’ve lost the primary DC, it doesn’t hurt if you instantiate the same subnet in the backup DC.

Can We Really Ignore Spaghetti and Horseshoes?

Brad Hedlund wrote a thought-provoking article a few weeks ago, claiming that the horseshoes (or trombones) and spaghetti created by virtual workloads and appliances deployed anywhere in the network don’t matter much with new data center designs that behave like distributed switches. In theory, he’s right. In practice, less so.

Which virtual networking technology should I use?

After I published the Decouple virtual networking from the physical world article, @paulgear1 sent me a very valid tweet: “You seemed a little short on suggestions about the path forward. What should customers do right now?” Apart from the obvious “it depends”, these are the typical use cases (as I understand them today – please feel free to correct me).

FCoE and LAG – industry-wide violation of FC-BB-5?

Anyone serious about high-availability connects servers to the network with more than one uplink, more so when using converged network adapters (CNA) with FCoE. Losing all server connectivity after a single link failure simply doesn’t make sense.

If at all possible, you should use dynamic link aggregation with LACP to bundle the parallel server-to-switch links into a single aggregated link (also called bonded interface in Linux). In theory, it should be simple to combine FCoE with LAG – after all, FCoE runs on top of lossless Ethernet MAC service. In practice, there’s a huge difference between theory and practice.

Nexus vPC and Consistency Checker

Michel sent me a detailed e-mail describing both his enthusiasm with vPC and the headaches consistency checker is causing him. Here’s the good part:

Nexus vPC seems like a perfect solution for real multi-chassis etherchannel. At work we're using it extensively on a few pairs of Nexus 7000's.

... and then it turns sour:

However, there is one MAJOR drawback with vPC at this time, it's the way the consistency checker works (or rather, does not work). We've come across two specific situations where consistency checker will bring down your beautiful and redundant vPC link, and we've found no way around.

Here are his problems: