VXLAN termination on physical devices

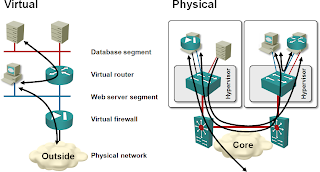

Every time I’m discussing the VXLAN technology with a fellow networking engineer, I inevitably get the question “how will I connect this to the outside world?” Let’s assume you want to build pretty typical 3-tier application architecture (next diagram) using VXLAN-based virtual subnets and you already have firewalls and load balancers – can you use them?

The product information in this blog post is outdated - Arista, Brocade, Cisco, Dell, F5, HP and Juniper are all shipping hardware VXLAN gateways (this post has more up-to-date information). The concepts explained in the following text are still valid; however, I would encourage you to read other VXLAN-related posts on this web site or watch the VXLAN webinar to get a more recent picture.

The only product supporting VXLAN Tunnel End Point (VTEP) in the near future is the Nexus 1000V virtual switch; the only devices you can connect to a VXLAN segment are thus Ethernet interface cards in virtual machines. If you want to use a router, firewall or load balancer (sometimes lovingly called application delivery controller) between two VXLAN segments or between a VXLAN segment and the outside world (for example, a VLAN), you have to use a VM version of the layer-3 device. That’s not necessarily a good idea; virtual networking appliances have numerous performance drawbacks and consume way more CPU cycles than needed ... but if you’re a cloud provider billing your customers by VM instances or CPU cycles, you might not care too much.

The virtual networking appliances also introduce extra hops and unpredictable traffic flows into your network, as they can freely move around the data center at the whim of workload balancers like VMware’s DRS. A clean network design (left) is thus quickly morphed into a total spaghetti mess (right):

Cisco doesn’t have any L3 VM-based product, and the only thing you can get from VMware is vShield Edge – a dumbed down Linux with a fancy GUI. If you’re absolutely keen on deploying VXLAN, that shouldn’t stop you; there are numerous VM-based products, including BIG-IP load balancer from F5 and Vyatta’s routers. Worst case, you can turn a standard Linux VM into a usable router, firewall or NAT device by removing less functionality from it than VMware did. Not that I would necessarily like doing that, but it’s one of the few options we have at the moment.

Next steps?

Someone will have to implement VXLAN on physical devices sooner or later; running networking functions in VMs is simply too slow and too expensive. While I don’t have any firm information (not even roadmaps), do keep in mind Ken Duda’s enthusiasm during the VXLAN Packet Pushers podcast (and remember that both Arista and Broadcom appear in the author list of VXLAN and NVGRE drafts).

Furthermore, VXLAN encapsulation format is actually a subset of OTV encapsulation, as Omar Sultan pointed out in his VXLAN Deep Dive blog post, which means that Cisco already has the hardware necessary to terminate VXLAN segments in Nexus 7000.

How could you do it?

Layer-3 termination of VXLAN segments is actually pretty easy (from the architectural and control plane perspective):

- VMs attached to a VXLAN segment are configured with the default gateway’s IP address (intra-VXLAN subnet logical IP address of the physical termination device);

- A VM sending an IP packet to an off-subnet destination has to send it to the default gateway’s IP address and performs an ARP request;

- One or more layer-3 VXLAN termination devices respond to the ARP request sent in the VXLAN encapsulation and the Nexus 1000V switch in the hypervisor running the VM remembers RouterVXLANMAC-to-RouterPhysicalIP address mapping;

- When the VM sends an IP packet to the default gateway’s MAC address, the Nexus 1000V switch forwards the IP-in-MAC frame to the nearest RouterPhysicalIP address.

No broadcast or flooding is involved in the layer-3 termination, so you could easily use the same physical IP address and the same VXLAN MAC address on multiple routers (anycast) and achieve instant redundancy without first hop redundancy protocols like HSRP or VRRP.

Layer-2 extension of VXLAN segments into VLANs (that you might need to connect VXLAN-based hosts to an external firewall) is a bit tougher. As you’re bridging between VXLAN and an 802.1Q VLAN, you have to ensure that you don’t create a forwarding loop.

You could configure the VXLAN layer-2 extension (bridging) on multiple physical switches and run STP over VXLAN ... but I hope we’ll never see that implemented. It would be way better to use IP functionality to select the VXLAN-to-VLAN forwarder. You could, for example, run VRRP between redundant VXLAN-to-VLAN bridges and use VRRP IP address as the VXLAN physical IP address of the bridge (all off-VXLAN MAC addresses would appear as being reachable via that IP address to other VTEPs). The VRRP functionality would also control the VXLAN-to-VLAN forwarding – only the active VRRP gateway would perform the L2 forwarding. You could still use a minimal subset of STP to prevent forwarding loops, but I wouldn’t use it as the main convergence mechanism.

Summary

VXLAN is a great concept that gives you clean separation between virtual networks and physical IP-based transport infrastructure, but we need VXLAN termination in physical devices (switches, potentially also firewalls and load balancers) before we can start considering large-scale deployments. Till then, it will remain an interesting proof-of-concept tool or a niche product used by infrastructure cloud providers.

More information

The concepts and challenges of virtualized networking are described in the Introduction to Virtualized Networking webinar. For more details, check out my Data Center 3.0 for Networking Engineers (recording) and VMware Networking Deep Dive (recording) webinars. Both of them are also available as part of the Data Center Trilogy and you get access to all three webinars (and numerous others) as part of the yearly subscription.

Intel recently added AES-NI to its server processor lineup (it's in the new E7's and 5600 series Xeon), however they only handle symmetric, not asymmetric.

So as Ivan said, it's going to chew up a lot more CPU cycles than would otherwise be chewed.

(A) troubleshooting complexities

(B) increased network utilization

I don't really care about N/S shifting to E/W. That's happening anyway and needs to be solved, but wasting bandwidth is a different story.

Of course, if you have too much bandwidth in your DC and too many CPU cycles (so you can do routing in VM appliances), you might not care.

I think we’re more likely to see a shared/virtualized pool of physical appliances (loadbalancers with SSL, firewalls, etc), connected to the “network fabric” somewhat like service linecards in a 6500 chassis (and hopefully supporting VXLAN termination natively at some point to avoid the L2 issues you described).

Still, VXLAN termination in hardware may help keep the spaghetti slightly less convoluted.

Once implemented properly, LISP will solve the IP address mobility problem, but not all the others.

But when, and on which type of ASA ?

However, I wouldn't go down the VXLAN-VLAN-FW path (although you could). Why don't you virtualize the firewall?

http://blog.ipspace.net/2015/09/vxlan-hardware-gateway-overview.html