Category: data center

Layer-2 Network Is a Single Failure Domain

This topic has been on my to-write list for over a year and its working title was phrased as a question, but all the horror stories you’ve shared with me over the last year or so (some of them published in my blog) have persuaded me that there’s no question – it’s a fact.

If you think I’m rephrasing the same topic ad nauseam, you’re right, but every month or so I get an external trigger that pushes me back to the same discussion, this time an interesting comment thread on Massimo Re Ferre’s blog.

IPv6-only Data Center (built by Tore Anderson)

When I mentioned the uselessness of stateless NAT64, I got in nice discussion with Tore Anderson who wanted to use stateless NAT64 in reverse direction (stateless NAT46) to build an IPv6-only data center. Some background information first (to define the context of his thinking before we jump into the technical details):

Are Fixed Switches More Efficient Than Chassis Ones?

Brad Hedlund did an excellent analysis of fixed versus chassis-based switches in his Interop presentation and concluded that fixed switches offer higher port density and lower per-port power consumption than chassis-based ones. That’s true when comparing individual products, but let’s ask a different question: how much does it take to implement a 384-port non-blocking fabric (equivalent to Arista’s 7508 switch) with fixed switches?

Virtual Networks: the Skype Analogy

I usually use the “Nicira is Skype of virtual networking” analogy when describing the differences between Nicira’s NVP and traditional VLAN-based implementations. Cade Metz liked it so much he used it in his What Is a Virtual Network? It’s Not What You Think It Is article, so I guess a blog post is long overdue.

Before going into more details, you might want to browse through my Cloud Networking Scalability presentation (or watch its recording) – the crucial slide is this one:

Does Optimal L3 Forwarding Matter in Data Centers?

Every data center network has a mixture of bridging (layer-2 or MAC-based forwarding, aka switching) and routing (layer-3 or IP-based forwarding); the exact mix, the size of L2 domains, and the position of L2/L3 boundary depend heavily on the workload ... and I would really like to understand what works for you in your data center, so please leave as much feedback as you can in the comments.

LineRate Proxy: Software L4-7 Appliance With a Twist

Buying a new networking appliance (be it VPN concentrator, firewall or load balancer … aka Application Delivery Controller) is a royal pain. You never know how much performance you’ll need in two or three years (and your favorite bean counter will not allow you to scrap it in less than 4-5 years). You do know you’ll never get the performance promised in vendor’s data sheets … but you don’t always know which combination of features will kill the box.

Now, imagine someone offers you a performance guarantee – you’ll always get what you paid for. That’s what LineRate Systems, a startup just exiting stealth mode is promising.

Full Mesh Is the Worst Possible Fabric Architecture

One of the answers you get from some of the vendors selling you data center fabrics is “you can use any topology you wish” and then they start to rattle off an impressive list of buzzword-bingo-winning terms like full mesh, hypercube and Clos fabric. While full mesh sounds like a great idea (after all, what could possibly go wrong if every switch can talk directly to any other switch), it’s actually the worst possible architecture (apart from the fully randomized Monkey Design).

Beware of fabric-wide Link Aggregation Groups

Fernando made a very valid comment to my Monkey Design Still Doesn’t Work Well post: if we would add a few more links between edge and core (fabric) switches to that network, we might get optimal bandwidth utilization in the core. As it turns out, that’s not the case.

Networking Tech Field Day #3: First Impressions

Last week Stephen Foskett and Greg Ferro brought back their merry crew of geeks (and a network security princess) for the third Networking Tech Field Day. We’ve met some exciting new vendors (Infineta and Spirent) and a few long-time friends (Arista, Cisco, NEC and Solarwinds).

Infineta gave us a fantastic deep-dive into deduplication math, and Spirent blew our socks off with their testing gear. As for the generic state of the networking industry, William R. Koss nicely summarized my feelings in a blog post published last Friday:

Cisco & VMware: Merging the Virtual and Physical NICs

Virtual (soft) switches present in almost every hypervisor significantly reduce the performance of high-bandwidth virtual machines (measurements done by Cisco a while ago indicate you could get up to 38% more throughput if you tie VMs directly to hardware NICs), but as I argued in my “Soft Switching Might Not Scale, But We Need It” post, we need hypervisor switches to isolate the virtual machines from the vagaries of the physical NICs.

Engineering gurus from Cisco and VMware have yet again proven me wrong – you can combine VMDirectPath and vMotion if you use VM-FEX.

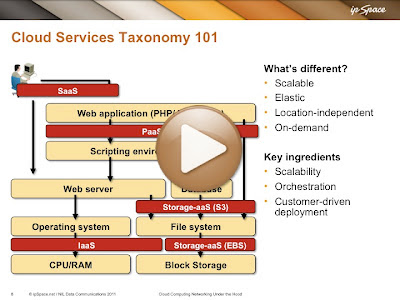

Cloud Services Taxonomy

One of the challenges of designing data center networks that support cloud service is agreeing on what exactly each one of those services should be doing. This video (part of the Cloud Computing Networking webinar) explains what various categories of cloud services actually do and where they could be used in a typical web application stack.

Stretched Layer-2 Subnets – The Server Engineer Perspective

A long while ago I got a very interesting e-mail from Dmitriy Samovskiy, the author of VPN-Cubed, in which he politely asked me why the networking engineers find the stretched layer-2 subnets so important. As we might get lucky and spot a few unicorns merrily dancing over stretched layer-2 rainbows while attending the Networking Tech Field Day, I decided share the e-mail contents with you (obviously after getting an OK from Dmitriy).

Looking into Data Center Storage Protocols mysteries

Should you use FC, FCoE or iSCSI when deploying new gear in your existing data center? What about Greenfield deployments? What are the decision criteria? Should you just skip iSCSI and use NFS if you’re focusing on server virtualization with VMware? Does it still make sense to build separate iSCSI network? Are jumbo frames useful? We’ll try to answer all these questions and a few more in the first Data Center Virtual Symposium sponsored by Cisco Systems.

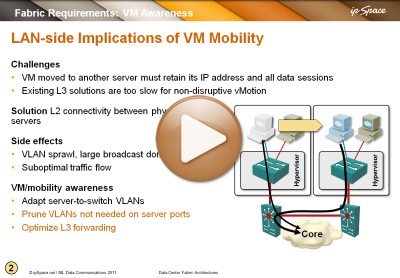

Video: Networking requirements for VM mobility

You’re probably sick and tired of me writing and talking about networking requirements for VM mobility (large VLAN segments that some people want to extend across the globe), but just in case you have to show someone a brief summary, here’s a video taken from the Data Center Fabric Architectures webinar.

You’ll also find VM mobility challenges described to various degrees in Introduction to Virtual Networking, VMware Networking Deep Dive and Data Center Interconnects webinars

… updated on Friday, December 25, 2020 18:17 UTC

Scalable, Virtualized, Automated Data Center

Matt Stone sent me a great set of questions about the emerging Data Center technologies (the headline is also his, not mine) together with an interesting observation “it seems as though there is a lot of reinventing the wheel going on”. Sure is – read Doug Gourlay’s OpenFlow article for a skeptical insider view. Here's a lovely tidbit:

So every few years the networking industry invents some new term whose primary purpose is to galvanize the thinking of IT purchasers, give them a new rallying cry to generate budget, hopefully drive some refresh of the installed base so us vendor folks can make our quarter bookings targets.

But I’m digressing, let’s focus on Matt’s questions. Here are the first few.