Cisco & VMware: Merging the Virtual and Physical NICs

Virtual (soft) switches present in almost every hypervisor significantly reduce the performance of high-bandwidth virtual machines (measurements done by Cisco a while ago indicate you could get up to 38% more throughput if you tie VMs directly to hardware NICs), but as I argued in my “Soft Switching Might Not Scale, But We Need It” post, we need hypervisor switches to isolate the virtual machines from the vagaries of the physical NICs.

Engineering gurus from Cisco and VMware have yet again proven me wrong – you can combine VMDirectPath and vMotion if you use VM-FEX.

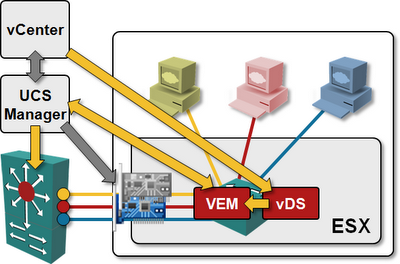

This is (approximately) how that marvel of engineering works (and you’ll find more details in this presentation):

- You have to configure VM-FEX (which means that you can only use this trick if you have an UCS system with Palo chipset in the server blades).

- Palo chipset emulates the registers and data structures used by the VMXNET3 paravirtualized device driver (and most VMs use VMXNET3 today due to its performance benefits). You can thus link a VM with VMXNET3 device driver directly to the physical hardware presented to the server by the Palo chipset (using VMDirectPath, for example).

Cisco was using VMDirectPath in the VM-FEX performance measurements; in most VM-FEX deployments you’d use the passthrough VEM to enable vMotion of the VMs using VM-FEX.

- vSphere 5 introduced support for vMotion with VMDirectPath for VM-FEX NICs. This enhancement is crucial as it allows a VM using VM-FEX NIC without a VEM to be vMotioned to another host.

The trick VMware’s engineers used is very simple (conceptually, but I’m positive there are numerous highly convoluted implementation details): once you get a request to vMotion a VM, you freeze the VM, copy physical registers of the VM-FEX VIC to the data structures used by the hypervisor kernel implementation of VMXNET3 device, disconnect the VM from the physical hardware, and allow it to continue working through the virtual VMXNET3 device and VEM. Once the VM is moved to another ESX host, the contents of the VMXNET3 virtual device registers get copied to the physical NIC, and the VM yet again regains full access to the physical hardware.

Was it all just an alphabet soup?

Check out my virtualization webinars – they will help you get a decent foothold in the brave new world of server and network virtualization.

Intra-host traffic is obviously faster than traffic going through VM-FEX (and back). The question is: how often and how much intra-host traffic would you in your environment? It all depends on the applications.

- All the virtual ADC market is another use case.

- Actually all the intermediate VMs before reaching the Application VMs.

http://www.cisco.com/en/US/docs/switches/datacenter/nexus5000/sw/layer2/513_n1_1/b_Cisco_n5k_layer2_config_gd_rel_513_N1_1_chapter_010101.html

It won't be too much longer before VM-FEX is being implemented on non-Cisco NICs, and later on non-Cisco servers...

Even with VM-FEX you still have to install a VEM on ESXi. :)

Finally, I was told the VM-FEX VEM is not exactly the same thing as Nexus 1000v VEM (but you do need a kernel module because you have to modify VDS behavior).

A VM-FEX virtual Ethernet interface can be configured as a SPAN source and destination just like a physical interface can, but the 5Ks and FIs have limitations on SPAN, and depending on the SPAN requirements this could cause a problem. With 1000v, a VSM supports 64 SPAN/ERSPAN sessions across all installed VEMs. You can send this traffic to the physical network or keep it "virtual" by using a virtual traffic analyzer such as the NAM virtual service blade on the Nexus 1010 "appliance".

As for Netflow, the 5Ks and FIs don't support it, so Netflow requirements are another use-case for 1000v.

QoS on 5Ks and FIs don't support matching/marking L3 DSCP values because they are L2 switches. When L3 capability is added (available now for 55XX, soon for 62XX) DSCP matching/marking is possible. I learned this when prepping UCS for a Unified Communications install. The UC applications mark traffic with DSCP values, and Cisco recommends honoring those values at L2 by translating them to CoS using 1000v.

I am not 100% certain but I am pretty sure when I was looking at this for UCS a few months back they are the same VEM. To verify I'll download the latest releases for 1000v and VM-FEX and see how they compare.

Complete VM-FEX setup videos at http://ucsguru.com