Category: data center

Local TCP Anycast Is Really Hard

Pete Lumbis and Network Ninja mentioned an interesting Unequal-Cost Multipathing (UCMP) data center use case in their comments to my UCMP-related blog posts: anycast servers.

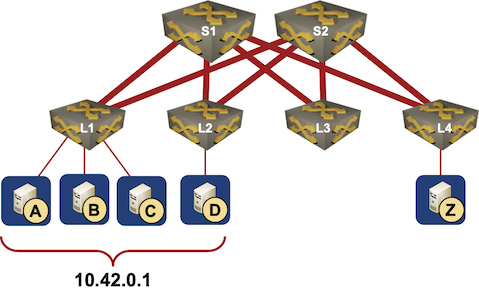

Here’s a typical scenario they mentioned: a bunch of servers, randomly connected to multiple leaf switches, is offering a service on the same IP address (that’s where anycast comes from).

Typical Data Center Anycast Deployment

Mythbusting: NFV Data Center Fabric Buffering Requirements

Every now and then I stumble upon an article or a comment explaining how Network Function Virtualization (NFV) introduces new data center fabric buffering requirements. Here’s a recent example:

For Telco/carrier Cloud environments, where NFVs (which are much slower than hardware SGW) get used a lot, latency is higher with a lot of jitter due to the nature of software and the varying link speeds, so DC-level near-zero buffer is not applicable.

It seems to me we’re dealing with another myth. Starting with the basics:

Worth Watching: Rethinking BGP in the Data Center

Ever since draft-lapukhov was first published almost a decade ago, we all knew BGP was the only routing protocol suitable for data center networking… or at least Thought Leaders and vendor marketers seem to be of that persuasion.

… updated on Monday, May 24, 2021 12:05 UTC

Packet Bursts in Data Center Fabrics

When I wrote about the (non)impact of switching latency, I was (also) thinking about packet bursts jamming core data center fabric links when I mentioned the elephants in the room… but when I started writing about them, I realized they might be yet another red herring (together with the supposed need for large buffers in data center switches).

Here’s how it looks like from my ignorant perspective when considering a simple leaf-and-spine network like the one in the following diagram. Please feel free to set me straight, I honestly can’t figure out where I went astray.

Does Small Packet Forwarding Performance Matter in Data Center Switches?

TL&DR: No.

Here’s another never-ending vi-versus-emacs-type discussion: merchant silicon like Broadcom Trident cannot forward small (64-byte) packets at line rate. Does that matter, or is it yet another stimulating academic talking point and/or red herring used by vendor marketing teams to justify their high prices?

Here’s what I wrote about that topic a few weeks ago:

Rant: Cisco ACI Complexity

A while ago Antti Leimio wrote a long twitter thread describing his frustrations with Cisco ACI object model. I asked him for permission to repost the whole thread as those things tend to get lost, and he graciously allowed me to do it, so here we go.

I took a 5 days Cisco DCACI course. This is all new to me. I’m confused. Who is ACI for? Capabilities and completeness of features is fantastic but how to manage this complex system?

Chasing CRC Errors in a Data Center Fabric

One of my readers encountered an interesting problem when upgrading a data center fabric to 100 Gbps leaf-to-spine links:

- They installed new fiber cables and SFPs;

- Everything looked great… until someone started complaining about application performance problems.

- Nothing else has changed, so the culprit must have been the network upgrade.

- A closer look at monitoring data revealed CRC errors on every leaf switch. Obviously something was badly wrong with the whole batch of SFPs.

Fortunately, my reader took a closer look at the data before they requested a wholesale replacement… and spotted an interesting pattern:

Video: NetQ and Cumulus Linux Data Models

In the last part of his Cumulus Linux 4.0 Update Pete Lumbis talked about using NetQ to capture streaming telemetry and increase network observability, and the new model-driven configuration approach (including all the usual buzzwords like NETCONF, RPC, YAML, JSON, and OpenConfig) coming in 2020.

Building Secure Layer-2 Data Center Fabric with Cisco Nexus Switches

One of my readers is designing a layer-2-only data center fabric (no SVI interfaces on switches) with stringent security requirements using Cisco Nexus switches, and he wondered whether a host connected to such a fabric could attack a switch, and whether it would be possible to reach the management network in that way.

Do you think it’s possible to reach the MANAGEMENT PLANE from the DATA PLANE? Is it valid to think that there is a potential attack vector that someone can compromise to source traffic from the front of the device (ASIC) through the PCI bus across the CPU to the across the PCI bus to the Platform Controller Hub through the I/O card to spew out the Management Port onto that out-of-band network?

My initial answer was “of course there’s always a conduit from the switching ASIC to the CPU, how would you handle STP/CDP/LLDP otherwise”. I also asked Lukas Krattiger for more details; here’s what he sent me:

Weird: Wrong Subnet Mask Causing Unicast Flooding

When I still cared about CCIE certification, I was always tripped up by the weird scenario with (A) mismatched ARP and MAC timeouts and (B) default gateway outside of the forwarding path. When done just right you could get persistent unicast flooding, and I’ve met someone who reported average unicast flooding reaching ~1 Gbps in his data center fabric.

One would hope that we wouldn’t experience similar problems in modern leaf-and-spine fabrics, but one of my readers managed to reproduce the problem within a single subnet in FabricPath with anycast gateway on spine switches when someone misconfigured a subnet mask in one of the servers.

New on ipSpace.net: Virtualizing Network Devices Q&A

A few weeks ago we published an interesting discussion on network operating system details based on an excellent set of questions by James Miles.

Unfortunately we got so far into the weeds at that time that we answered only half of James’ questions. In the second Q&A session Dinesh Dutt and myself addressed the rest of them including:

- How hard is it to virtualize network devices?

- What is the expected performance degradation?

- Does it make sense to use containers to do that?

- What are the operational implications of running virtual network devices?

- What will be the impact on hardware vendors and networking engineers?

And of course we couldn’t avoid the famous last question: “Should network engineers program network devices?”

You’ll need Standard or Expert ipSpace.net subscription to watch the videos.

Video: Simplify Device Configurations with Cumulus Linux

The designers of Cumulus Linux CLI were always focused on simplifying network device configurations. One of the first features along these lines was BGP across unnumbered interfaces, then they introduced simplified EVPN configurations, and recently auto-MLAG and auto-BGP.

You can watch a short description of these features by Dinesh Dutt and Pete Lumbis in Simplify Network Configuration with Cumulus Linux and Smart Datacenter Defaults videos (part of Cumulus Linux section of Data Center Fabrics webinar).

Fixing Firewall Ruleset Problem For Good

Before we start: if you’re new to my blog (or stumbled upon this blog post by incident) you might want to read the Considerations for Host-Based Firewalls for a brief overview of the challenge, and my explanation why flow-tracking tools cannot be used to auto-generate firewall policies.

As expected, the “you cannot do it” post on LinkedIn generated numerous comments, ranging from good ideas to borderline ridiculous attempts to fix a problem that has been proven to be unfixable (see also: perpetual motion).

Using Ansible with Arista EOS and CloudVision

In mid-September, Carl Buchmann, Fred Hsu, and Thomas Grimonet had an excellent presentation describing Arista’s Ansible roles and collections. They focused on two collections: CloudVision integration, and Arista Validated Designs. All the videos from that presentation are available with free ipSpace.net subscription.

Want to know even more about Ansible and network automation? Join our 2-day automation event featuring network automation experts from around the globe talking about their production-grade automation solutions or tools they created, and get immediate access to automation course materials and reviewed hands-on exercises.

Must Watch: Fault Tolerance through Optimal Workload Placement

While I keep telling you that Google-sized solutions aren’t necessarily the best fit for your environment, some of the hyperscaler presentations contain nuggets that apply to any environment no matter how small it is.

One of those must-watch presentations is Fault Tolerance through Optimal Workload Placement together with a wonderful TL&DR summary by the one-and-only Todd Hoff of the High Scalability fame.