… updated on Wednesday, February 16, 2022 16:15 UTC

Anycast Works Just Fine with MPLS/LDP

I stumbled upon an article praising the beauties of SR-MPLS that claimed:

Yet MPLS, until recently, was deprived of anycast routing. This is because MPLS is not a pure packet switching technology, but has a control plane based on virtual circuit switching.

My first reaction was “that’s not how MPLS works,”1 followed by “that would be fun to test” a few seconds later.

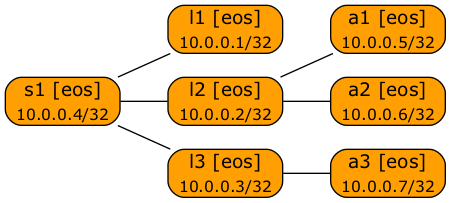

I created a tree network to test the anycast with MPLS idea:

Anycast test network

The lab is built from Arista cEOS virtual machines. The whole network is running OSPF, and MPLS/LDP will be enabled on all links. A1, A2 and A3 will advertise the same prefix (10.0.0.42/32) into OSPF. According to the “no anycast with MPLS” claim, L1 should not be able to reach all three anycast nodes.

You probably know I prefer typing CLI commands over chasing rodents2, so I used netlab to build the lab. Here’s the topology file (I don’t think it can get any simpler than that)

defaults.device: eos

module: [ ospf, mpls ]

mpls.ldp.explicit_null: True

nodes: [ l1, l2, l3, s1, a1, a2, a3 ]

links: [ s1-l1, s1-l2, s1-l3, l2-a1, l2-a2, l3-a3 ]

Next step: starting the lab with netlab up and waiting a minute or so.

I needed a custom template to create the loopback interface on the anycast nodes and advertise it into OSPF. I also had to enable LDP on the new loopback interface due to the way netlab configures LDP on Arista EOS.

interface Loopback42

ip address 10.0.0.42/32

ip ospf area 0.0.0.0

mpls ldp interface

I could use netlab config command to configure lab devices with a custom Jinja2 template, but decided to make the custom configuration part of the lab topology – anycast loopbacks will be configured every time the lab is started with netlab up. I used groups to apply the configuration templates to groups of lab devices:

defaults.device: eos

module: [ ospf,mpls ]

mpls.ldp.explicit_null: True

groups:

anycast:

members: [ a1, a2, a3 ]

config: [ ospf-anycast-loopback.j2 ]

nodes: [ l1, l2, l3, s1, a1, a2, a3 ]

links: [ s1-l1, s1-l2, s1-l3, l2-a1, l2-a2, l3-a3 ]

Smoke Test

Let’s inspect the routing tables first (hint: netlab connect is an easy way to connect to lab devices without bothering with their IP addresses or /etc/hosts file).

Here’s the routing table entry for 10.0.0.42 on L2:

l2>show ip route detail | section 10.0.0.42

O 10.0.0.42/32 [110/20] via 10.1.0.13, Ethernet2 l2 -> a1

via 10.1.0.17, Ethernet3 l2 -> a2

Likewise, S1 has two paths to the anycast prefix (through L2 and L3):

s1>show ip route detail | section 10.0.0.42

O 10.0.0.42/32 [110/30] via 10.1.0.5, Ethernet2 s1 -> l2

via 10.1.0.9, Ethernet3 s1 -> l3

What about MPLS forwarding table? Here’s the LFIB entry for 10.0.0.42 or S1. Please note that a single incoming label maps into two outgoing labels, interfaces, and next hops.

s1>show mpls lfib route detail | section 10.0.0.42

L 116386 [1], 10.0.0.42/32

via M, 10.1.0.9, swap 116385

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet3

via M, 10.1.0.5, swap 116384

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet2

And here’s the corresponding LFIB entry from L2. Please note that the anycast nodes advertise the anycast prefix with explicit-null label because I configured label local-termination explicit-null.

l2>show mpls lfib route detail | section 10.0.0.42

L 116384 [1], 10.0.0.42/32

via M, 10.1.0.13, swap 0

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet2

via M, 10.1.0.17, swap 0

payload autoDecide, ttlMode uniform, apply egress-acl

interface Ethernet3

The final test (traceroute from L1 to anycast IP address) failed on Arista cEOS or vEOS. The data plane implementation of Arista’s virtual devices seems to be a bit limited: even though there are several next hops in the EOS routing table and LFIB, I couldn’t find a nerd knob to force more than one entry into the Linux routing table (which is used for packet forwarding).

However, if you repeat the same tests on Cisco IOS with ip cef load-sharing algorithm include-ports source destination configured, you’ll see traceroute printouts ending on different anycast nodes:

l1#traceroute 10.0.0.42 port 80

Type escape sequence to abort.

Tracing the route to 10.0.0.42

VRF info: (vrf in name/id, vrf out name/id)

1 s1 (10.1.0.2) [MPLS: Label 25 Exp 0] 1 msec 1 msec 1 msec

2 l2 (10.1.0.5) [MPLS: Label 25 Exp 0] 1 msec 1 msec

l3 (10.1.0.9) [MPLS: Label 26 Exp 0] 1 msec

3 a3 (10.1.0.21) 1 msec *

a2 (10.1.0.17) 1 msec

After increasing the probe count (as suggested by Anonymous in the comments), the trace reaches all three anycast servers:

l1#traceroute 10.0.0.42 port 80 probe 10

Type escape sequence to abort.

Tracing the route to 10.0.0.42

VRF info: (vrf in name/id, vrf out name/id)

1 s1 (10.1.0.2) [MPLS: Label 25 Exp 0] 1 msec 1 msec...

2 l3 (10.1.0.9) [MPLS: Label 26 Exp 0] 1 msec 1 msec

l2 (10.1.0.5) [MPLS: Label 25 Exp 0] 1 msec 1 msec...

3 a2 (10.1.0.17) 1 msec *

a3 (10.1.0.21) 1 msec *

a1 (10.1.0.13) 1 msec *

a3 (10.1.0.21) 1 msec *

a1 (10.1.0.13) 1 msec *

Myth busted. Traditional MPLS offers more than P2P virtual circuits. MPLS forwarding entries follow the IP routing table entries. While I like SR-MPLS (as opposed to its ugly cousin SRv6), you don’t need it to run anycast services; LDP works just fine.

The Curse of Duplicate Addresses

While anycast works with MPLS/LDP (as demonstrated), LDP is not completely happy with the setup.

Worst case, anycast servers choose the anycast IP address as the LDP Identifier, and the adjacent devices try to connect to the anycast IP address when establishing LDP TCP session. That can’t end well – you have to set the LDP router ID to the loopback interface with unique IP address.

LDP also advertises all local IP addresses to LDP neighbors to help them map FIB next hops to LDP neighbors3. Multiple LDP neighbors advertising the same IP address make L2 decidedly unhappy4, resulting in syslog messages like this one:

Feb 16 17:57:25 l2 LdpAgent: %LDP-6-SESSION_UP: Peer LDP ID: 10.0.0.4:0, Peer IP: 10.0.0.4, Local LDP ID: 10.0.0.2:0, VRF: default

Feb 16 17:57:25 l2 LdpAgent: %LDP-6-SESSION_UP: Peer LDP ID: 10.0.0.6:0, Peer IP: 10.0.0.6, Local LDP ID: 10.0.0.2:0, VRF: default

Feb 16 17:57:25 l2 LdpAgent: %LDP-6-SESSION_UP: Peer LDP ID: 10.0.0.5:0, Peer IP: 10.0.0.5, Local LDP ID: 10.0.0.2:0, VRF: default

Feb 16 17:57:25 l2 LdpAgent: %LDP-4-DUPLICATE_PEER_INTERFACE_IP: Duplicate interface IP 10.0.0.42 detected on 10.0.0.5:0

The setup still works, but the extraneous syslog messages might upset an overly fastidious networking engineer. To make LDP happy, run BGP (not OSPF) with the anycast servers and distribute labels for anycast addresses with IPv4/IPv6 labeled unicast (BGP-LU) address family.

Next: Building a BGP Anycast Lab Continue

Build Your Own Lab

You can replicate this experiment within your browser (but you’ll be limited to Arista cEOS containers):

- Open the netlab-examples repository in GitHub Codespaces

- Install the Arista cEOS container in your codespace

- Change directory to

routing/anycast-mpls-ospf - Start the lab with clab provider: netlab up -p clab

If you want to use your own infrastructure:

- Set up a Linux server or virtual machine. If you don’t have a preferred distribution, use Ubuntu.

- Install netlab and the other prerequisite software

Next:

- Download Arista vEOS image and build a Vagrant box

- Download the lab topology file into an empty directory

- Execute netlab up

Revision History

- 2024-10-20

- Added GitHub Codespaces instructions

- 2024-08-10

- MPLS data plane works in cEOS release 4.32.1F and is supported in netlab release 1.9.0

- 2023-02-02

- Moved back to vEOS – cEOS does not support MPLS data plane and netlab refuses to configure MPLS on cEOS.

- 2021-11-17

- The curse of duplicate addresses section has been added based on feedback from Dmytro Shypovalov. Thanks a million for keeping me on the straight and narrow!

- 2021-11-18

- Added a traceroute printout with larger probe count as suggested by an anonymous commenter.

- 2022-02-16

- Rewrote the blog post to use Arista cEOS. Also added lab setup instructions.

- 2022-03-08

- Added a link to configuration tarball

Wondering if traceroute on "s1" would also reach "a1" (on third hop) as it's not shown in the output. Maybe by increasing probe count for traceroute?

Minor typo: title "Anycast MPLS entry on S1" should be "Anycast MPLS entry on L2"

MPLS is Transport, and Transport is more concerned about addressing, routing, synchronization etc, while Anycast, taken to its root, is a naming issue, so one can quickly realize there's no fundamental reason that prevents MPLS from supporting anycast. Any limitation, is strictly implementational, not technological. So it's not too surprising how even old LDP code can take on Anycast.

"MPLS is not a pure packet switching technology, but has a control plane based on virtual circuit switching." while I can see why Dmytro might have come to this conclusion, it's not really true either. It's true that MPLS is an evolution of ATM, and therefore, is not tunnel, but VC -- never understand the pointless debates about whether MPLS is tunnel or VC as the people who claim it's tunnel obviously don't understand its history -- but MPLS is more like loose VC. MPLS relies on a functional routing protocol to discover the paths, and LDP -- if it can be called MPLS control plane as MPLS control plane can consist of way more than LDP -- assigns the labels for the prefixes, so MPLS in an IP network is essentially packet-switching, not VC. If a core DCE breaks down in a VC network, the VC breaks, but in MPLS IP network, the LSP adjusts itself based on the underlying routing topology. LDP itself is a simplistic label assignment protocol, AFAIK it doesn't perform any function central to a VC control plane, so all in all MPLS deviates a fair bit from traditional VC network.

But the most important part is: MPLS is more general than both VC and packet-switch, because it's Transport, and this point is crucial. MPLS can therefore be generalized to support non-packet-switch network, in VC style or what not. So all of these points make it obvious that nothing would stop MPLS from supporting Anycast routing, or any form of routing, for that matter. And the reason this needs to be clearly identified is so vendors can't use that as an excuse to sell SR for the wrong reason.

And I never understand what the excitement is with SR, given that it's a product of SDN, itself a misconception(or a flight of fantasy) from the start. SR is more like a point solution for a few special cases, and it tries to overcome the state problems by introducing more constructs into the network, like SRGB and global segments, that can lead to tight binding and confusion, esp. at large scale.

In fact Dmytro's post already mentioned 3 deficiencies of SRGB mismatch. Introducing more moving parts, esp. global ones, into big distributed systems, always leads to complexity and unintended consequences. SR will run into problem with MPLS network where label is no longer just an abstract concept with no physical reality, but has to match the underlying resources. This 'fitting the data' requirement and SRGB can contradict each other, leading to much headache. And if one has to add a controller to SR to do TE, then one will run into all the scaling problems of centralized routing that SDN proponents have learnt the hard way. So personally I agree 100% with Greg Ferro's remark here:

https://packetpushers.net/srx6-snake-oil-or-salvation

Just a couple of comments:

Some years ago I attended a vendor's course on Segment Routing tailored for my company and the SR advocate made his debut saying that LDP didn't support ECMP ..... :(..... some of us nearly fell off our chairs but it was such a macroscopic idiocy that we didn't want to embarrass him and so we said nothing... the worrying bit though is that most of the audience didn't fall off any chair as it went through pretty unnoticed... this is just to say that there's always a lot of fertile ground around for any new technology...

Regarding the operational fragility SRGB offers us ... I'd recommend the following reading: https://datatracker.ietf.org/doc/html/draft-ietf-spring-conflict-resolution

Cheers/Ciao

Andrea