Hyper-V 3.0 Extensible Virtual Switch

It took years before the rumored Cisco vSwitch materialized (in the form of Nexus 1000v), several more years before there was the first competitor (IBM Distributed Virtual Switch), and who knows how long before the third entrant (recently announced HP vSwitch) jumps out of PowerPoint slides and whitepapers into the real world.

Compare that to the Hyper-V environment, where we have at least two virtual switches (Nexus 1000V and NEC's PF1000) mere months after Hyper-V's general availability.

The difference: Microsoft did the right thing, created an extensible vSwitch architecture, and thoroughly documented all the APIs (there's enough documentation that you can go and implement your own switch extension if you're so inclined).

A short video taken from Virtual Firewalls webinar describes the extensible architecture of Hyper-V virtual switch.

Short Summary

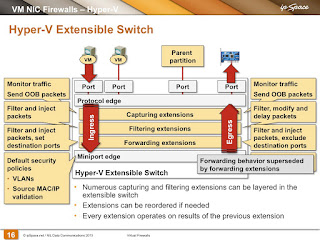

- A switch extension can be a capturing, filtering, or forwarding extension;

- Capturing extensions can capture packets and generate their own packets (example: report to an sFlow collector, implement ERSPAN functionality);

- Filtering extensions can inspect, drop (traffic policing or filtering) or delay (traffic shaping) packets, as well as generate their own packets;

- Forwarding extensions can do all of the above, plus replace the default forwarding rules (specify their own set of output ports). Each packet can be set to one or more output ports to implement flooding, multicast, or SPAN-like behavior.

- Each extension is called twice, first on the Ingress path (input VM or port to switch), then (when the set of destination physical or virtual ports is known) on the Egress path (switch to set of output ports).

It seems Microsoft really learned the hard lessons of the circuitous history of virtual networking, and it looks like they did the right thing … assuming the highly extensible mechanism they implemented doesn’t bog down the switching performance. Time to do some performance tests. Any volunteers?

Thanks,

Stu Miniman

Wikibon.org

Twitter: @stu

You might want to have the same configuration interface/API and the same overlay networking model across multiple hypervisors, in which case a vSwitch running on multiple platforms (ex: Nexus 1000V) comes handy.

Best,

Ivan

I think we'll see more vswitches emerge in some form or another (open source extensions or commercial products).

So, is the proliferation of vswitches a good thing for users? Not sure yet, but choice is good, so that in it of itself is usually a positive thing...for the user :)

-Jason

For example, InMon developed a free traffic monitoring extension implementing the sFlow standard on the Hyper-V extensible vSwitch and our module can be combined with the default switching module, or with Cisco or NEC forwarding modules. The following article describes our experiences with the Hyper-V extensible vSwitch:

Microsoft Hyper-V

One of the reasons Apache has been so successful is the rich ecosystem of modules that has developed around it. It would be great to see vSwitches develop into similarly open platforms.

Anyway I have a question about Hyper-V and ESXi standard switch. Currently I'm doing a performance evaluation of different vSwitches as a part of my research work. My intention is to evaluate perormance of bare vSwitches by using simple bridging of network traffic over physical NICs (this way I can use external testing equipment). However, this is possible for OpenvSwitch, but by default bridging between pNICs is disabled in Hyper-V and ESXi due to the lack of STP support (so traffic loops can not occur). However, if I install PF1000, pNICS bridging can be enabled in Hyper-V, unlike in ESXi (even if I use Cisco 1000V).

My question is, if you happen to know the answer: Is there any way to disable this loop prevention in standard ESXi vSwitch or in standard Hyper-V (maybe some debug or serivce mode exists, where this woud be possible)?

The validity of such results is questionable, their usefulness is not.