Redundant DMVPN designs, Part 1 (The Basics)

Most of the DMVPN-related questions I get are a variant of the “how many tunnels/hubs/interfaces/areas do I need for a redundant DMVPN design?” As always, the right answer is “it depends”, but here’s what I’ve learned so far.

Single Router/Single Uplink on Spoke Site

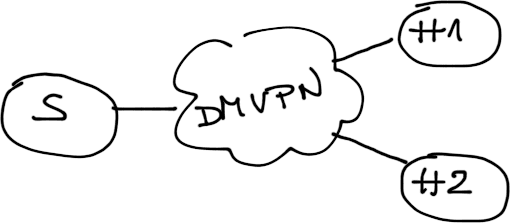

This blog post focuses on the simplest possible design – each spoke site has a single router and a single uplink. There’s no redundancy at the spokes, which might be an acceptable tradeoff (or you could use 3G connection as a backup for DMVPN).

You’d probably want to have two hub routers (preferably with independent uplinks), which brings us to the fundamental question: “do we need one or two DMVPN clouds?”

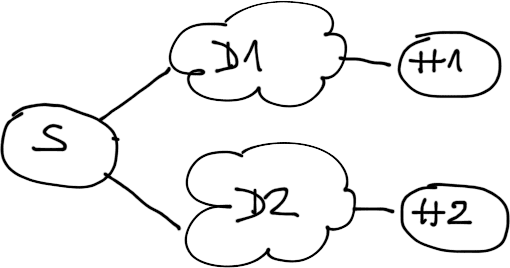

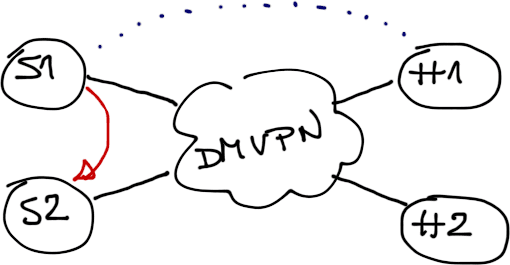

Design#1 – One Hub per DMVPN Tunnel

In this design, each hub router controls its own DMVPN subnet, and spoke routers have multiple tunnel interfaces (one per hub). Each hub router is the NHRP server for the subnet it controls, and propagates routing information between spokes.

Two DMVPN tunnels, single hub per tunnel

This design is by far the simplest, cleanest, and easiest to understand/troubleshoot – it has no intricate dependencies between routing protocols and NHRP. You can also easily deploy primary/backup hub router scenarios by increasing the interface cost of one tunnel interface, or you could load-share the traffic between both hub routers.

Load sharing across hub routers

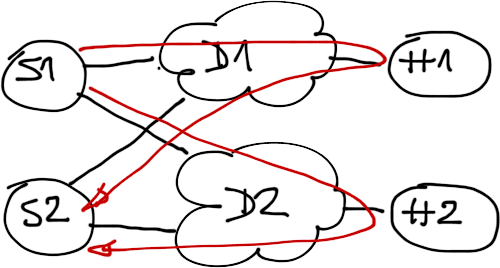

And now for the drawbacks:

- Multiple IPsec sessions could be established between a pair of spoke routers in DMVPN Phase 2 deployments (one over each tunnel interface);

You might get two IPsec sessions between a pair of spoke routers (one per tunnel)

- GRE keys are mandatory in Phase 2 deployments (otherwise the spokes cannot decipher which tunnel the other spoke router was using), causing performance degradation if the hub routers don’t support GRE keys in hardware (Catalyst 6500 doesn’t)

- This design does not work with large-scale Phase 3 DMVPNs where you want each spoke to connect to a subset of hubs (you cannot establish Phase 2/3 shortcuts across DMVPN tunnels, only within a tunnel).

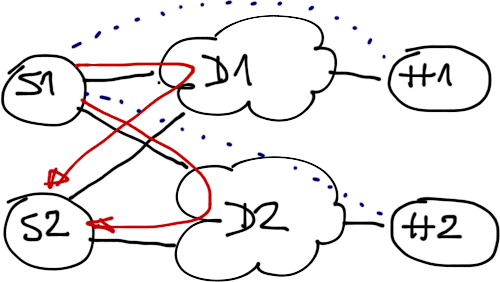

Design#2 – Multiple Hubs in a Single DMVPN Tunnel

In this design, you connect all hub routers to the same DMVPN tunnel. All hub routers act as NHRP servers, and propagate routing information between the spokes (if you use OSPF, one of the hub routers would become a DR, another one a BDR).

Single DMVPN tunnel, two hubs per tunnel

You cannot use Phase 1 DMVPN with multiple hub routers – Phase 1 DMVPN uses P2P GRE tunnels on the spoke routers with tunnel destination set to hub router’s outside IP address.

Phase 2/3 DMVPN designs with multiple hub routers per tunnel could experience severe convergence issues – detecting failure of a hub router could take as long as three minutes. On the benefits side, this design does not require GRE keys (which is good news if your hub router is a Catalyst 6500) or multiple IPsec sessions between spoke routers.

Spoke-to-spoke session established across a Phase2/3 DMVPN tunnel

Summary

- One hub router per DMVPN subnet is the ideal design for Phase 2 DMVPN deployments ... unless you have Catalyst 6500 as your hub router, in which case you must use one DMVPN subnet due to lack of hardware GRE key support. That’s also the only design you can use with Phase 1 DMVPN.

- One DMVPN subnet is probably the best design for Phase 3 DMVPN and it’s mandatory if you have partial spoke-to-hub NHRP connectivity.

Coming next: spoke routers with multiple uplinks and spoke sites with redundant routers.

have multiple dmvpn hubs and spokes and multiple ISP on each side

to connect it together we need dedicated VRF for each ISP (outside VRFs) and dedicated VRF for each tunnel-interface (inside VRFs). then, configuring each tunnel-interface as usual and redistributing routes between inside VRFs via BGP.

with this scheme we have all possible connectivity between any number of hubs and spokes with any number of ISP connections each.

P.S.: sorry for bad english.

also, this scheme working good with linux (but in static tunnel end-poing configuration with linux host. not found the time and desire to properly configure NHRP daemon on linux side

I've been trying to find a solution for this scenario for last three months.

I have 3 routers each one has ADSL and 3G interfaces. one of them will be a hub and the other two will be spokes. What is the best way to have redundancy for this topology?. I am thinking of configuring 2 DMVPN clouds, one on the DSL and other on the 3G. I know that, if I change the metrics of tunnel interfaces I can make the routers to prefer DSL with EIGRP.

All DSL IPs are static Public and the 3G interface on the Hub is also stati. But spokes' 3G IPs are dynamic.

So my conundrum is,

If I implement the secondary DMVPN as mentioned above, How am I going to configure default routes on the HUB?. ( I can have a specific route on the Spokes, pointing the HUB's 3G IP, out via it's 3G interface because I already know the HUB's 3G IP address(static).)But on the Hub, I already have a default route via DSL interface. Because the Spokes 3G IPs are dynamic, I can't specify a route on the Hub so that the Hub can reply to ISAKMP initiation traffic received from Spokes 3G-IP back via Hubs 3G interface. So even though hub gets the spokes initiation traffic(for secondary DMVPN) from it's 3G interface, it's gonna reply using it's DSL interface ( is it ?). So I think this may cause lots of issues..

Can you please put me in the right direction.. May be there is a better way. This has been a pain in the back side for too long now.

Thank you in advance.

This design is described in my DMVPN webinars (and you get tested router configs implementing it), and also here: http://blog.ioshints.info/2012/01/redundant-dmvpn-designs-part-2-multiple.html

Thanks for your reply.

I just bought all 3 DMVPN webinars (special offer :)). Awesome stuff. I will go through those and get back to you if I still have problems.

Thanks

So my question is, can I use VRFs and still allow internal users to access internet without going through the hub?

What would be my best option here..??

Thanks a lot for taking time for this. :)

Next, add a default route in the global routing table pointing to one of the Internet uplinks (you MUST include interface in the static route), and configure inter-VRF NAT.

Shameless plug: inter-VRF NAT is described in details (together with router configs) in Enterprise MPLS/VPN webinar and my MPLS/VPN books.

I have a bunch of Spoke's in my Dual Hub Design. And i get the phenomenon that some of them won't reconnect to the HUB. ( DMVPN Config is all the same) The "sh dmvpn" command shows me "NHRP" and they get stucked. After dis/en -able all works fine. Any suggestions ?