Multihop FCoE 101

The FCoE confusion spread by networking vendors has reached new heights with contradictory claims that you need TRILL to run multihop FCoE (or maybe you don’t) and that you don’t need congestion control specified in 802.1Qau standard (or maybe you do). Allow me to add to your confusion: they are all correct ... depending on how you implement FCoE.

Before going into details, you need to know some FC and FCoE port terminology:

FC/FCoE term | Translated into plain English |

|---|---|

N_Port | Fiber channel port on a server or storage |

F_Port | Fiber channel port on a switch |

E_Port | FC port on a switch that can be used to interconnect switches |

Domain | FC switch |

VN_Port | Virtual N_Port. Created on a FCoE node (server or storage) to enable FCoE communication with a FCoE switch |

VF_Port | Virtual F_port on a switch, created as needed to establish connection with an end-node (N_Port) |

VE_Port | Virtual E_port, created on an FCoE switch to link it with another FCoE switch |

In the simplest FCoE topology, a server with CNA (converged network adapter; a card that can send both Ethernet and FCoE traffic over the same gigabit Ethernet uplink) is connected to an FCoE-enabled switch, which has a direct connection into the legacy FC network.

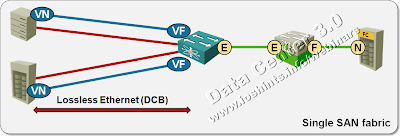

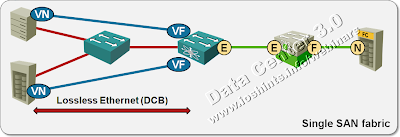

The muddy waters start to appear when you have to insert intermediate switches between the servers (VN_ports) and legacy FC fabric. HP and NetApp work with the assumption that the intermediate switches don’t run FC protocol stack and only support Data Center Bridging (DCB) standards. Congestion control becomes mandatory in large networks and network stability is of paramount importance (thus NetApp’s recommendation to use TRILL).

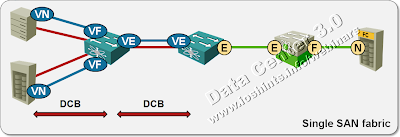

Cisco, on the other hand, is pushing another design: every intermediate switch is a full-blown FC switch, running full FC protocol stack and participating in FSPF (FC routing). In this case, you don’t need congestion control (congestion is handled by the FC protocol stack) and you totally bypass bridging, so you don’t care whether bridging uses spanning tree, TRILL or something else.

The truly confusing part of the whole story: both designs (and any combination of them) are valid according to the FC-BB-5 standard; I’ll try to point out some of their benefits and drawbacks in future posts. FIP snooping and NPIV/VNP_ports in FCoE environment are covered in the next post.

If you liked this explanation and would like to get a more thorough introduction to new LAN, storage and server virtualization technologies, watch my Data Center 3.0 for Networking Engineers webinar (buy a recording or yearly subscription).

Cheers!

It's also possible to deploy a DCB switch that has FC visibility and forwarding intelligence, but is not a "full FCF running FSPF". Think of it like NPV for FCoE.

Cheers,

Brad

From a purely theoretical perspective: every FCoE switch with a VE_port is a FC domain. In theory, you could have up to 253 domains per FC SAN (not just 24), but I guess you're hitting limitations of your existing gear, in which case, you might consider NPV interface between FC and FCoE.