Category: workshop

Scaling IaaS network infrastructure

I got totally fed up with the currently popular “flat-earth with long-distance bridging” architecture paradigm while developing the Data Center Interconnects webinar. It all started with the layer-2 hypervisor switches and lack of decent L3 network-side solutions; promoting non-scalable cloudy solutions doesn’t help either.

The network infrastructure would scale better if the hypervisors would work as MPLS/VPN PE-routers, but even MPLS would hit scalability limits when the number of servers grows into tens of thousands. The only truly scalable solution is IP-over-IP or MAC-over-IP implemented in the hypervisor switches.

I tried to organize all these thoughts in the “How to build a scalable IaaS cloud network infrastructure” article that was recently published by SearchTelecom ... and just a few days after the article was published, Brad Hedlund pointed me to Infrastructure as a Service Builder’s Guide document, which is saying almost the same thing (and coming to flawed conclusions because they had to promote OpenFlow and NEC).

Ignoring STP? Be careful, be very careful

A while ago I described what it takes to integrate TRILL backbone with the legacy equipment running Spanning Tree Protocol (STP). Unfortunately, Brocade decided to use a non-standard approach to BPDU handling when implementing their TRILL-like VCS fabric. VDX switches running in fabric mode can either drop incoming BPDU frames or transport them transparently across the fabric to other edge ports. Although VDX switches support STP, RSTP and MSTP (as well as RootGuard and BPDUGuard) when configured as standalone switches, the STP processing is disabled when you configure fabric mode; VCS fabric looks like a huge shared LAN segment to the end hosts and core switches.

2013-03-31: Network OS 4.0 and above supports Distributed Spanning Tree (DiST), for more details read this blog post.

NAT64: it’s all about the legacy content

Few days ago I enjoyed listening to the Teredo-bashing Packet Pushers Podcast during which Greg & the crew simply couldn’t avoid NAT64. Tom even wrote a follow-up post explaining why NAT is bad (we all agree with that) and why we shouldn’t use it in IPv6. Unfortunately he missed the elephant in the room: it’s all about the legacy content. IPv6-only residential users have to access IPv4-only content.

NHRP Convergence Issues in Multi-Hub DMVPN Networks

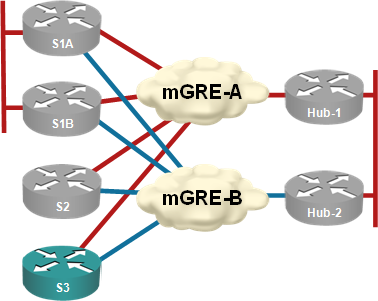

Summary for differently attentive: A hub router failure in multi-hub DMVPN networks can cause spoke-to-spoke traffic disruptions that last up to three minutes.

Almost every DMVPN design I’ve seen has multiple hubs for redundancy purposes. I’ve always preached the “one hub per DMVPN tunnel” mantra (see the diagram below) to those who were willing to listen citing “NHRP issues after hub failure” as one of the main reasons you should not have two or more hubs per DMVPN tunnel.

Each hub router controls an independent DMVPN tunnel

VPLS versus OTV for L2 Data Center Interconnect (DCI)

DJ Spry asked an interesting question in a comment to my MPLS/VPN in DCI designs post: “Why would one choose OTV over MPLS/VPN?” The answer is simple: it depends on what you need. MPLS/VPN provides path isolation between layer-3 domains (routed networks) across MPLS or IP infrastructure whereas OTV providers layer-2 transport (and VLAN-based path isolation) across IP infrastructure. However, it does make sense to compare OTV with VPLS (which was DJ Spry’s next question). Apart from the obvious platform dependence (OTV runs on Nexus 7000, VPLS runs on Catalyst 6500/Cisco 7600 and a few other routers) which might disappear once ASR1K gets the rumored OTV support, there’s a huge gap in functionality and complexity between the two layer-2 transport technologies.

(v)Cloud Architects, ever heard of MPLS?

Duncan Epping, the author of fantastic Yellow Bricks virtualization blog tweeted a very valid question following my vCDNI scalability (or lack thereof) post: “What you feel would be suitable alternative to vCD-NI as clearly VLANs will not scale well?”

Let’s start with the very basics: modern data center switches support anywhere between 1K and 4K (the theoretical limit based on 802.1q framing) VLANs. If you need more than 1K VLANs, you’ve either totally messed up your network design or you’re a service provider offering multi-tenant services (recently relabeled by your marketing department as IaaS cloud). Service Providers had to cope with multi-tenant networks for decades ... only they haven’t realized those were multi-tenant networks and called them VPNs. Maybe, just maybe, there’s a technology out there that’s been field-proven, known to scale, and works over switched Ethernet.

vCloud Director Network Isolation (vCDNI) scalability

When VMware launched its vCloud Director Networking Infrastructure, Greg Ferro (of the Packet Pushers Podcast fame) and myself were very skeptical about its scaling capabilities, more so as it uses MAC-in-MAC encapsulation and bridging was never known for its scaling properties. However, Greg’s VMware contacts claimed that vCDNI scales to thousands of physical servers and Greg wanted to do a podcast about it.

As always, we prepared a few questions in advance, including “How are broadcasts, multicasts and unknown unicasts handled in vCDNI-based private networks?” and “what happens when a single VM goes crazy?” For one reason or another, the podcast never happened. After analyzing Wireshark traces of vCDNI traffic, I probably know why that’s the case.

Brocade VCS fabric has almost-perfect load balancing

Short summary for differently-attentive: proprietary load balancing Brocade uses over ISL trunks in VCS fabric is almost perfect (and way better for high-throughput sessions than what you get with other link aggregation methods).

During the Data Center Fabrics Packet Pushers Podcast we’ve been discussing load balancing across aggregated inter-switch links and Brocade’s claims that its “chip-based balancing” performs better than standard link aggregation group (LAG) load balancing. Ever skeptical, I said all LAG load balancing is chip-based (every vendor does high-speed switching in hardware). I also added that I would be mightily impressed if they’d actually solved intra-flow packet scheduling.

NAT-PT is dead! Long live NAT-64!

I’m getting questions like this one all the time: “Where are we with NAT-PT? It was implemented in IOS quite a few years ago but it has never made it into ASA code.”

Bad news first: NAT-PT is dead. Repeat after me: NAT-PT is dead. Got it? OK.

More bad news: NAT-PT in Cisco IOS was seriously broken after they pulled fast switching code out of IOS. Whatever is left in Cisco IOS might be good enough for a proof-of-concept or early deployment trials, but not for a production-grade solution.

VRF-aware services in Cisco IOS

"Which Cisco IOS services work in a VRF?" is the question I get in almost any VRF-related discussion, so I made sure it’s covered very early in my Enterprise MPLS/VPN deployment webinar. This is the explanation I usually give in the webinar:

You can't ignore IPv6 any longer (in seven steps)

We know the world will eventually run out of IPv4 addresses, but while at least some service providers got the message and already deployed IPv6, it seems like most enterprise IT departments still practice the denial strategy. It’s worrisome to read articles from Jeff Doyle describing the ignorance of his enterprise clients, so I’ll try (yet again) to explain why you should start IPv6 planning NOW.

Does Bridge Assurance Make UDLD Obsolete?

I got an interesting question from Andrew:

Would you say that bridge assurance makes UDLD unnecessary? It doesn't seem clear from any resource I've found so far (either on Cisco's docs or on Google)."

It’s important to remember that UDLD works on physical links whereas bridge assurance works on top of STP (which also implies it works above link aggregation/port channel mechanisms). UDLD can detect individual link failure (even when the link is part a LAG); bridge assurance can detect unaggregated link failures, total LAG failure, misconfigured remote port or a malfunctioning switch.

Framed-IPv6-Prefix used as delegated DHCPv6 prefix

Chris Pollock from io Networks was kind enough to share yet another method of implementing DHCPv6 prefix delegation on PPP interfaces in his comment to my DHCPv6-RADIUS integration: the Cisco way blog post: if you tell the router not to use the Framed-IPv6-Prefix passed from RADIUS in the list of prefixes advertised in RA messages with the no ipv6 nd prefix framed-ipv6-prefix interface configuration command, the router uses the prefix sent from the RADIUS server as delegated prefix.

This setup works reliably in IOS release 15.0M. 12.2SRE3 (running on a 7206) includes the framed-IPv6-prefix in RA advertisements and DHCPv6 IA_PD reply, totally confusing the CPE.

Delegated IPv6 prefixes – RADIUS configuration

In the Building Large IPv6 Service Provider Networks webinar I described how Cisco IOS uses two RADIUS requests to authenticate an IPv6 user (request#1) and get the delegated prefix (request#2). The second request is sent with a modified username (-dhcpv6 is appended to the original username) and an empty password (the fact that is conveniently glossed over in all Cisco documentation I found).

FreeRADIUS server is smart enough to bark at an empty password, to force the RADIUS server to accept a username with no password you have to use Auth-Type := Accept:

Site-A-dhcpv6 Auth-Type := Accept

cisco-avpair = "ipv6:prefix#1=fec0:1:2400:1100::/56"

DHCPv6-RADIUS integration: the Cisco way

Yesterday I described how the IPv6 architects split the functionality of IPCP into three different protocols (IPCPv6, RA and DHCPv6). While the split undoubtedly makes sense from the academic perspective, the service providers offering PPP-based services (including DSL and retrograde uses of PPP-over-FTTH) went berserk. They were already using RADIUS to authenticate PPP users ... and were not thrilled by the idea that they should deploy DHCPv6 servers just to make the protocol stack look nicer.