Category: switching

Speculation: This is how I would build QFabric

Three months after the QFabric launch, the details remain shrouded in mystical clouds, so let’s try to speculate what they could be hiding. We have two well-known facts:

- QFabric has three components: QF/Node (edge device), QF/Interconnect (high-speed core device) and QF/Director (the brains).

- Juniper is strong in the Service Provider technologies, including MPLS, MPLS/VPN, VPLS and BGP. It’s also touting its BGP MPLS-based MAC VPN technology (too long to write more than once, let’s call it BMMV).

I am positive Juniper would never try to build a monster single-brain fabric with Borg or Big Brother architecture as they simply don’t scale (as the OpenFlow crowd will learn in a few years).

Ignoring STP? Be careful, be very careful

A while ago I described what it takes to integrate TRILL backbone with the legacy equipment running Spanning Tree Protocol (STP). Unfortunately, Brocade decided to use a non-standard approach to BPDU handling when implementing their TRILL-like VCS fabric. VDX switches running in fabric mode can either drop incoming BPDU frames or transport them transparently across the fabric to other edge ports. Although VDX switches support STP, RSTP and MSTP (as well as RootGuard and BPDUGuard) when configured as standalone switches, the STP processing is disabled when you configure fabric mode; VCS fabric looks like a huge shared LAN segment to the end hosts and core switches.

2013-03-31: Network OS 4.0 and above supports Distributed Spanning Tree (DiST), for more details read this blog post.

Edge Virtual Bridging (EVB; 802.1Qbg) eases VLAN configuration pains

Challenge: If you want to deploy virtual machines belonging to different security zones within the same physical host, you have to isolate them. VLANs are the most common approach. If you want to migrate a running VM from one host to another while preserving its user sessions, you usually have to rely on bridging. The set of VLANs needed on a trunk link between the hypervisor host and access switch is thus unpredictable (more information in my VMware Networking Deep Dive webinar)

Solution#1 (painful): Configure all possible VLANs on the trunk link. Stretched VLANs spanning the whole data center are an ideal ingredient of a major meltdown.

OpenFlow 1.1 in hardware: I was wrong (again)

Earlier this month I wrote “we’ll probably have to wait at least a few years before we’ll see a full-blown hardware product implementing OpenFlow 1.1.” (and probably repeated something along the same lines in during the OpenFlow Packet Pushers podcast). I was wrong (and I won’t split hairs and claim that an academic proof-of-concept doesn’t count). Here it is: @nbk1 pointed me to a 100 Gbps switch implementing the latest-and-greatest OpenFlow 1.1.

New Data Center switches from Force10

Force10 has just launched a new series of data center switches. The ZettaScale switches are, as one would expect from Force10, down-to-earth high-performance low-footprint products – a good option for those network engineers that like building high-density high-performance data centers with minimal feature overload.

All the information in this post is based on the briefing I’ve received from Force10 last week, the draft materials they sent me and the subsequent answers to my questions. I haven’t been able to touch the boxes or read the product documentation yet.

What is OpenFlow?

A typical networking device (bridge, router, switch, LSR …) has control and data plane. The control plane runs all the control protocols (including port aggregation, STP, TRILL, MAC address learning and routing protocols) and downloads the forwarding instructions into the data plane structures, which can be simple lookup tables or specialized hardware (hash tables or TCAMs).

Don’t lie about proprietary protocols

A few months ago Brocade launched its own version of Data Center Fabric (VCS) and the VDX series of switches claiming that:

The Ethernet Fabric is an advanced multi-path network utilizing an emerging standard called Transparent Interconnection of Lots of Links (TRILL).

Those familiar with TRILL were immediately suspicious as some of the Brocade’s materials mentioned TRILL in the same sentence as FSPF, but we could not go beyond speculations. The Brocade’s Network OS Administrator’s Guide (Supporting Network OS v2.0, December 2010) shows a clear picture.

The Data Center Fabric architectures

Have you noticed how quickly fabric got as meaningless as switching and cloud? Everyone is selling you data center fabric and no two vendors have something remotely similar in mind. You know it’s always more fun to look beyond white papers and marketectures and figure out what’s really going on behind the scenes (warning: you might be as disappointed as Dorothy was). I was able to identify three major architectures (at least two of them claiming to be omnipotent fabrics).

Business as usual

Each networking device (let’s confuse everyone and call them switches) works independently and remains a separate management and configuration entity. This approach has been used for decades in building the global Internet and thus has proven scalability. It also has well-known drawbacks (large number of managed devices) and usually requires thorough design to scale well.

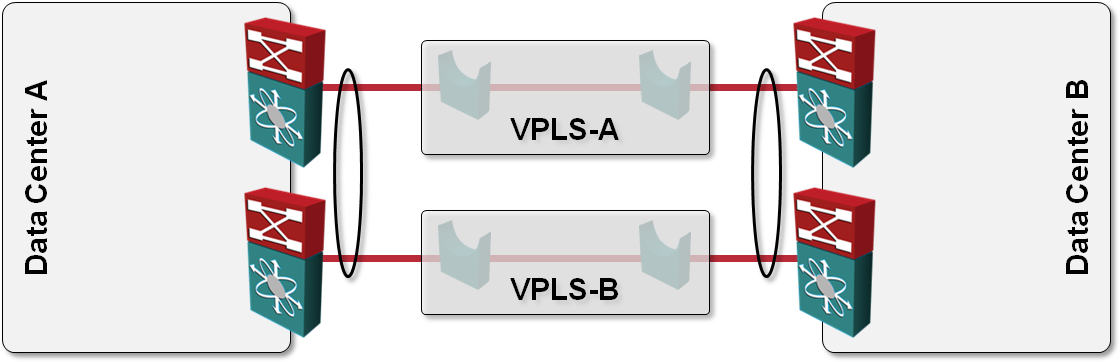

L2 DCI with MLAG over VPLS transport?

One of the answers I got to my “How would you use VPLS transport in L2 DCI” question was also “Can’t you just order two VPLS services, use them as P2P links and bundle the two links into a multi-chassis link aggregation group (MLAG)?” like this:

How Did We Ever Get Into This Switching Mess?

If you’re confused about the numerous meanings of a switch, you’re not the only one. If you wonder how the whole mess started, here’s the full story (from the biased perspective of a grumpy GONER):

In the early 1980s, there were no bridges or routers. Hosts communicated directly with each other or used intermediate nodes (usually hosts, sometimes dedicated devices called gateways) to pass traffic. Networking engineers’ lives would have remained simple were it not for a few overly bright engineers at DEC who decided their application (LAT) would run directly on layer 2 to make it faster.

vSwitch in Multi-chassis Link Aggregation (MLAG) environment

Yesterday I described how the lack of LACP support in VMware’s vSwitch and vDS can limit the load balancing options offered by the upstream switches. The situation gets totally out-of-hand when you connect an ESX server with two uplinks to two (or more) switches that are part of a Multi-chassis Link Aggregation (MLAG) cluster.

Let’s expand the small network described in the previous post a bit, adding a second ESX server and another switch. Both ESX servers are connected to both switches (resulting in a fully redundant design) and the switches have been configured as a MLAG cluster. Link aggregation is not used between the physical switches and ESX servers due to lack of LACP support in ESX.

Intelligent Redundant Framework (IRF) – Stacking as Usual

When I was listening to the Intelligent Redundant Framework (IRF) presentation from HP during the Tech Field Day 2010 and read the HP/H3C IRF 2.0 whitepaper afterwards, IRF looked like a technology sent straight from Data Center heavens: you could build a single unified fabric with optimal L2 and L3 forwarding that spans the whole data center (I was somewhat skeptical about their multi-DC vision) and behaves like a single managed entity.

No wonder I started drawing the following highly optimistic diagram when creating materials for the Data Center 3.0 webinar, which includes information on Multi-Chassis Link Aggregation (MLAG) technologies from numerous vendors.

Multi-Chassis Link Aggregation (MLAG) and Hot Potato Switching

There are two reasons one would bundle parallel Ethernet links into a port channel (official term is Link Aggregation Group):

- Transforming parallel links into a single logical link bypasses Spanning Tree Protocol loop avoidance logic; all links belonging to the port channel can be active at the same time (see also: Multi-Chassis Link Aggregation basics).

- Load sharing across parallel links in a port channel increases the total bandwidth available between adjacent L2 switches or between routers/hosts and switches.

Ethan Banks wrote an excellent explanation of traditional port channel caveats (proving that 1+1 sometimes does not equal 2); things get way worse when you start using Multi-Chassis Link Aggregation due to hot potato switching (the switch tries to forward packets toward destination MAC address as soon as possible) used by all MLAG implementations I’m familiar with.

VMware Virtual Switch: no need for STP

During the Data Center 3.0 webinar I always mention that you can connect a VMware ESX server (with embedded virtual switch) to the network through multiple active uplinks without link aggregation. The response is very predictable: I get a few “how does that work” questions in the next seconds.

VMware did a great job with the virtual switch embedded in the VMware hypervisor (vNetwork Standard Switch – vSS – or vNetwork Distributed Switch – vDS): it uses special forwarding rules (I call them split horizon switching, Cisco UCS documentation uses the term End Host Mode) that prevent forwarding loops without resorting to STP or port blocking.

Data Center Bridging (DCB) Congestion Notification (802.1Qau)

The last (and the least popular) Data Center Bridging (DCB) standard tries to solve the problem of congestion in large bridged domains (PFC enables lossless transport and ETS standardizes DWRR queuing). To illustrate the need for congestion control, consider a simple example shown in the following diagram:

It came to my attention that a vendor might be using this blog post to justify the need for QCN in FCoE environments. Should that be the case, please make sure you also read about the difference between dense and sparse FCoE, the (lack of) need for QCN in FCoE and whether it makes sense to run FCoE over TRILL. Finally, consider how you’ll troubleshoot FCoE environments.