Category: link aggregation

Is MLAG an Alternative to Stackable Switches?

Alex was trying to figure out how to use Catalyst 3850 switches and sent me this question:

Is MLAG an alternative to use rather than physically creating a switch stack?

Let’s start with some terminology.

Link Aggregation Group (LAG) is the ability to bond multiple Ethernet links into a single virtual link. LAG (as defined in 802.1ax standard) can be used between a pair of adjacent nodes. While that’s good enough if you need more bandwidth it doesn’t help if you want to increase redundancy of your solution by connecting your edge device to two switches while using all uplinks and avoiding the shortcomings of STP. Sounds a bit like trying to keep the cake while eating it.

Link Aggregation in OpenFlow Environment

One of my readers couldn’t figure out how to combine Link Aggregation Groups (LAG, aka Port Channel) with OpenFlow:

I believe that in LAG, every traditional switch would know how to forward the packet from its FIB. Now with OpenFlow, does the controller communicate with every single switch and populate their tables with one group ID for each switch? Or how does the controller figure out the information for multiple switches in the LAG?

As always, the answer is “it depends”, and this time we’re dealing with a pretty complex issue.

vLAG Caveats in Brocade VCS Fabric

Brocade VCS fabric has one of the most flexible multichassis link aggregation group (LAG) implementation – you can terminate member links of an individual LAG on any four switches in the VCS fabric. Using that flexibility is not always a good idea.

2015-01-23: Added a few caveats on load distribution

Micro-BFD: BFD over LAG (Port Channel)

The discussion in the comments to my LAG versus ECMP post took a totally unexpected turn when someone mentioned BFD failure detection over port channels (link aggregation groups – LAGs).

What’s the big deal?

LAG versus ECMP

Bryan sent me an interesting question:

When you have the opportunity to use LAG or ECMP, what are some things you should consider?

He already gathered some ideas (thank you!), and I expanded his list and added a few comments.

Purpose: resiliency or more bandwidth? For resiliency you want fast failure detection and the ability to connect to multiple uplink devices, for more bandwidth, you want better hashing.

vSphere Does Not Need LAG Bandaids – the Network Might

Chris Wahl claimed in one of his recent blog posts that vSphere doesn't need LAG band-aids. He's absolutely right – vSphere’s loop prevention logic alleviates the need for STP-blocked links, allowing you to use full server uplink bandwidth without the complexity of link aggregation. Now let’s consider the networking perspective.

Link Aggregation with Stackable Data Center Top-of-Rack Switches

Tomas Kubica made an interesting comment to my Stackable Data Center Switches blog post: “Suppose all your servers have 4x 10G port and you bundle them to LACP NIC team [...] With this stacking link is not going to be used for your inter-server traffic if all servers have active connections to all nodes of your ToR stack.” While he’s technically correct, the idea of having four 10GE ports on each server just to cater to the whims of stackable switches is somewhat hard to sell.

Do we need LACP and UDLD?

The Nexus-focused Packet Pushers were discussing a great question during Cisco Nexus Deep Dive part 2 podcast: do we need LACP on top of UDLD?

Short answer: absolutely.

Beware of fabric-wide Link Aggregation Groups

Fernando made a very valid comment to my Monkey Design Still Doesn’t Work Well post: if we would add a few more links between edge and core (fabric) switches to that network, we might get optimal bandwidth utilization in the core. As it turns out, that’s not the case.

FCoE and LAG – industry-wide violation of FC-BB-5?

Anyone serious about high-availability connects servers to the network with more than one uplink, more so when using converged network adapters (CNA) with FCoE. Losing all server connectivity after a single link failure simply doesn’t make sense.

If at all possible, you should use dynamic link aggregation with LACP to bundle the parallel server-to-switch links into a single aggregated link (also called bonded interface in Linux). In theory, it should be simple to combine FCoE with LAG – after all, FCoE runs on top of lossless Ethernet MAC service. In practice, there’s a huge difference between theory and practice.

Nexus 1000V LACP offload and the dangers of in-band control

A while ago someone sent me the following comment as part of a lengthy discussion focusing on Nexus 1000V: “My SE tells me that the latest 1000V release has rewritten the LACP code so that it operates entirely within the VEM. VSM will be out of the picture for LACP negotiations. I guess there have been problems.”

If you’re not convinced you should be running LACP between the ESX hosts and the physical switches, read this one (and this one). Ready? Let’s go.

Don’t Try to Fake Multi-chassis Link Aggregation (MLAG)

Martin sent me an interesting challenge: he needs to connect an HP switch in a blade enclosure to a pair of Catalyst 3500G switches. His Catalysts are not stackable and he needs the full bandwidth between the switches, so he decided to fake the multi-chassis link aggregation functionality by configuring static LAG on the HP switch and disabling STP on it (the Catalysts have no idea they’re talking to the same switch):

The Data Center Fabric architectures

Have you noticed how quickly fabric got as meaningless as switching and cloud? Everyone is selling you data center fabric and no two vendors have something remotely similar in mind. You know it’s always more fun to look beyond white papers and marketectures and figure out what’s really going on behind the scenes (warning: you might be as disappointed as Dorothy was). I was able to identify three major architectures (at least two of them claiming to be omnipotent fabrics).

Business as usual

Each networking device (let’s confuse everyone and call them switches) works independently and remains a separate management and configuration entity. This approach has been used for decades in building the global Internet and thus has proven scalability. It also has well-known drawbacks (large number of managed devices) and usually requires thorough design to scale well.

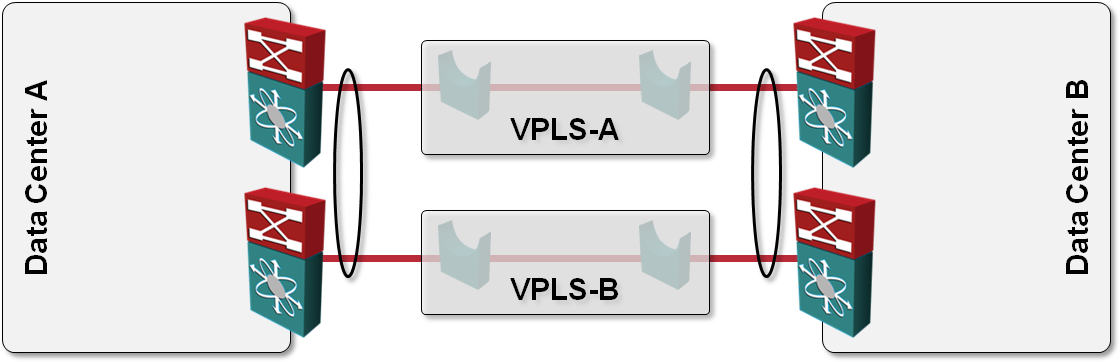

L2 DCI with MLAG over VPLS transport?

One of the answers I got to my “How would you use VPLS transport in L2 DCI” question was also “Can’t you just order two VPLS services, use them as P2P links and bundle the two links into a multi-chassis link aggregation group (MLAG)?” like this:

vSwitch in Multi-chassis Link Aggregation (MLAG) environment

Yesterday I described how the lack of LACP support in VMware’s vSwitch and vDS can limit the load balancing options offered by the upstream switches. The situation gets totally out-of-hand when you connect an ESX server with two uplinks to two (or more) switches that are part of a Multi-chassis Link Aggregation (MLAG) cluster.

Let’s expand the small network described in the previous post a bit, adding a second ESX server and another switch. Both ESX servers are connected to both switches (resulting in a fully redundant design) and the switches have been configured as a MLAG cluster. Link aggregation is not used between the physical switches and ESX servers due to lack of LACP support in ESX.