Category: data center

Stackable Data Center Switches? Do the Math!

Imagine you have a typical 2-tier data center network (because 3-tier is so last millennium): layer-2 top-of-rack switches redundantly connected to a pair of core switches running MLAG (to get around spanning tree limitations) and IP forwarding between VLANs.

Next thing you know, a rep from your favorite vendor comes along and says: “did you know you could connect all ToR switches into a virtual fabric and manage them as a single entity?” Is that a good idea?

VXLAN Is Not a Data Center Interconnect Technology

In a comment to the Firewalls in a Small Private Cloud blog post I wrote “VXLAN is NOT a viable inter-DC solution” and Jason wasn’t exactly happy with my blanket response. I hope Jason got a detailed answer in the VXLAN Technical Deep Dive webinar, here’s a somewhat shorter explanation.

Building Leaf-and-Spine Fabrics with Dell Force10 Switches

In the Clos Fabrics Explained webinar I focused on the Clos fabrics principles of operation and design options, and Brad Hedlund who graciously agreed to be my guest explained how you can use Dell Force10 switches to build them. In this video he’s describing a simple leaf-and-spine topology with 40GE uplinks.

What Exactly Are Virtual Firewalls?

Kaage added a great comment to my Virtual Firewall Taxonomy post:

And many of physical firewalls can be virtualized. One physical firewall can have multiple virtual firewalls inside. They all have their own routing table, rule base and management interface.

He’s absolutely right, but there’s a huge difference between security contexts (to use the ASA terminology) and firewalls running in VMs.

VM-level IP Multicast over VXLAN

Dumlu Timuralp (@dumlutimuralp) sent me an excellent question:

I always get confused when thinking about IP multicast traffic over VXLAN tunnels. Since VXLAN already uses a Multicast Group for layer-2 flooding, I guess all VTEPs would have to receive the multicast traffic from a VM, as it appears as L2 multicast. Am I missing something?

Short answer: no, you’re absolutely right. IP multicast over VXLAN is clearly suboptimal.

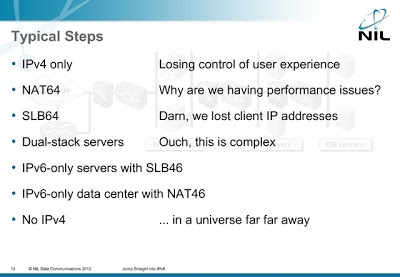

Skip the Transitions, Build IPv6-Only Data Centers

During last week’s IPv6 Summit I presented an interesting idea first proposed by Tore Anderson: let’s skip all the transition steps and implement IPv6-only data centers.

You can view the presentation or watch the video; for more details (including the description of routing tricks to get this idea working with vanilla NAT64), watch Tore’s RIPE64 presentation.

Is Layer-3 DCI Safe?

One of my readers sent me a great question:

I agree with you that L2 DCI is like driving without a seat belt. But is L3 DCI safer in case of DCI link failure? Let's say you have your own AS and PI addresses in use. Your AS spans multiple sites and there are external BGP peers on each site. What happens if the L3 DCI breaks? How will that impact your services?

Simple answer: while L3 DCI is orders of magnitude safer than L2 DCI, it will eventually fail, and you have to plan for that.

If Something Can Fail, It Will

During a recent consulting engagement I had an interesting conversation with an application developer that couldn’t understand why a fully redundant data center interconnect cannot guarantee 100% availability.

Published on , commented on July 10, 2022

SDN, Career Choices and Magic Graphs

The current explosion of SDN hype (further fueled by recent VMworld announcement of Software-Defined Data Centers) made some networking engineers understandably nervous. This is the question I got from one of them:

I have 8 plus years in Cisco, have recently passed my CCIE RS theory, and was looking forward to complete the lab test when this SDN thing hit me hard. Do you suggest completing the CCIE lab looking at this new future of Networking?

Short answer: the sky is not falling, CCIE still makes sense, and IT will still need networking people.

Cisco Nexus 3548: A Victory for Custom ASICs?

Autumn must be a perfect time for data center product launches: last week Brocade launched its core VDX switch and yesterday Arista and Cisco launched their new low-latency switches (yeah, the simultaneous launch must have been pure coincidence).

I had the opportunity to listen to Cisco’s and Arista’s product briefings, continuously experiencing a weird feeling of déjà vu. The two switches look like twin brothers … but there are some significant differences between the two:

Arista launches the first hardware VXLAN termination device

Arista is launching a new product line today shrouded in mists of SDN and cloud buzzwords: the 7150 series top-of-rack switches. As expected, the switches offer up to 64 10GE ports with wire speed L2 and L3 forwarding and 400 nanosecond(!) latency.

Also expected from Arista: unexpected creativity. Instead of providing a 40GE port on the switch that can be split into four 10GE ports with a breakout cable (like everyone else is doing), these switches group four physical 10GE SFP+ ports into a native 40GE (not 4x10GE LAG) interface.

But wait, there’s more...

Building Large L3 Fabrics with Brocade VDX Switches

A few days ago the title of this post would be one of those “find the odd word out” puzzles. How can you build large L3 fabrics when you have to work with ToR switches with no L3 support, and you can’t connect more than 24 of them in a fabric? All that has changed with the announcement of VDX 8770 – a monster chassis switch – and new version of Brocade’s Network OS with layer-3 (IP) forwarding.

Do we need LACP and UDLD?

The Nexus-focused Packet Pushers were discussing a great question during Cisco Nexus Deep Dive part 2 podcast: do we need LACP on top of UDLD?

Short answer: absolutely.

QFabric Behind the Curtain: I was spot-on

A few days ago Kurt Bales and Cooper Lees gave me access to a test QFabric environment. I always wanted to know what was really going on behind the QFabric curtain and the moment Kurt mentioned he was able to see some of those details, I was totally hooked.

Short summary: QFabric works exactly as I’d predicted three months before the user-facing documentation became publicly available (the behind-the-scenes view described in this blog post is probably still hard to find).

Midokura’s MidoNet: a Layer 2-4 virtual network solution

Almost everyone agrees the current way of implementing virtual networks with dumb hypervisor switches and top-of-rack kludges (including Edge Virtual Bridging – EVB or 802.1Qbg – and 802.1BR) doesn’t scale. Most people working in the field (with the notable exception of some hardware vendors busy protecting their turfs in the NVO3 IETF working group) also agree virtual networks running as applications on top of IP fabric are the only reasonable way to go ... but that’s all they currently agree upon.