Building Large L3 Fabrics with Brocade VDX Switches

A few days ago the title of this post would be one of those “find the odd word out” puzzles. How can you build large L3 fabrics when you have to work with ToR switches with no L3 support, and you can’t connect more than 24 of them in a fabric? All that has changed with the announcement of VDX 8770 – a monster chassis switch – and new version of Brocade’s Network OS with layer-3 (IP) forwarding.

Starting with VDX 8770, it’s a chassis-based switch with either four or eight slots, with the chassis providing 4Tbps of bandwidth to each slot. Initial linecards include 48-port 10GE and 12-port 40GE modules (there's also a 48-port 1GE module, but that's so last year), for a total of 384 10GE modules per switch (equivalent to Arista’s high-end boxes and half of what you can squeeze into a Nexus 7018 with F2 linecards). The true difference between VDX 8770 and the other high-end switches lies in the forwarding tables: VDX 8770 supports up to 384K MAC addresses per linecard (Arista’s 7500 and Nexus F2 linecards support up to 16K MAC addresses) and more than 100K ARP entries (competitors: 16K).

If you’re going to build large fabrics running heavily virtualized workloads, you just might need that many forwarding entries ... unless you plan to deploy one of the MAC-over-IP solutions (VXLAN or NVGRE) over an IP-only fabric, in which case the core switches need to know nothing else but the MAC and ARP entries of the ToR switches.

I can’t tell you much about the layer-3 support in the new Network OS. Sanjib HomChaudhuri, Brocade’s director of product management, told me that the hardware of all VDX switches (including the ones launched two years ago) supports layer-3 forwarding, and that all of them will become L3 switches with the new Network OS. I’ll wait for the software documentation to be released before saying anything more.

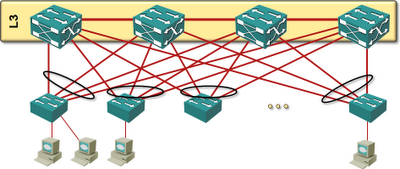

However, there’s one very interesting feature Sanjib mentioned: up to four VDX switches can act as a single first-hop gateway (IP router), sharing the same IP and MAC address. Arista can do a similar trick with Virtual ARP (but not in a layer-2 fabric, because they don’t have a layer-2 fabric technology), and Cisco offers four-router GLBP in FabricPath (using four different MAC addresses, so it’s not a true load balancing solution). Once the new Network OS becomes available, Brocade’s VCS fabric will be the only solution where four core switches can truly load-share IP forwarding load.

Obviously every switch in the fabric can do L3 forwarding, but four of them can share the same MAC and IP address with the upcoming release of Network OS.

Four seems to be a magic number for Brocade. With the current Network OS, up to four switches in a fabric can terminate aggregated link (LAG, Port Channel) from an external device. Let’s see how we can use that to our advantage.

Imagine the network core built from four fully-populated 8-slot VDX 8770 switches. Leaving aside few ports for inter-switch links, you get around 380 edge-facing 40GE ports. Using Brocade's VDX 8770 as core switches and any Trident+-based ToR switch (example: Dell’s S4810, Cisco’s Nexus 3000 or Juniper’s QFX3500) and 3:1 oversubscription ratio (48 x 10GE edge ports and 4 x 40GE uplinks on each ToR switch), you can build a L2/L3 fabric with ~4500 10GE ports with L3 forwarding optimally distributed between the four core switches.

Obviously you could also use Brocade's VDX switches as ToR switches, but none of them has 40GE uplinks today.

You can connect 2250 dual-homed servers to those 4500 ports, but let’s make it 2000 because if you don’t believe in the infinite flexibility of the new VMware’s vMotion without shared storage you’d want to have some storage arrays connected to the fabric as well. With a 50:1 virtualization ratio that you can easily get with the RAM-heavy servers you can buy from most vendors these days, that’s around 100.000 VMs ... probably more than most data centers or VDI deployments will need in the next few weeks.

More information

You’ll find fabric designs guidelines (including numerous L2- and L3-designs) in the upcoming Leaf-and-Spine Fabrics webinar.

Alsoo your comment system does not support openid 2. I could not use my i-name ("=chris.hills").

Ale

Just a minor correction, if I may. You said, "...chassis providing 4Tbps of bandwidth to each slot", but I believe the 4Tbps applies to the backplane switching bandwidth and not each slot.

Best.

Brook.

https://www.google.com/search?q=brocade+vdx+8770+%22per+slot%22

But their documentation also states "4Tbps backplane" for both the 4-slot and 8-slot chassis options. I don't know if 4Tbps is the system limit or the slot limit, and whether or not that is simplex or duplex.

And a correction to my correction :-)... In fact this is designed for 4 Tbps PER SLOT, full duplex for the 8-slot chassis.

Best.

Brook.

And, the fact that Brocade can do MLAG with four Switches doesn't seem very extraordinary... Juniper can do that with more than 10 Switches, implicitly, with Virtual Chassis...

The L3 support for all VDX switches, the ToR VDX 67xx and modular VDX 8770, comes with the latest NOS 3.0 release. You don' have to "buy something", just upgrade the firmware :-)

My problem here is that the other 2 major VDX flavors have very different limits for MAC tables and putting them into the same fabric nerfs the 8770 to the lowest limit. Still and all, they should work great with the Dell F10 40gb switches in their blade centers.

Each SFM has (3) 48*14Gbps connections to form the backplane that makes a total o 2.02 Tbps.

VDX 8770-4 uses 3 SFM = 6Tbps

VDX8770-4 uses 6 SFM = 12 Tbps

Regards,

Ernesto