Cloudy thoughts

We have a public holiday today, so I’ll spend the morning with my kids instead of writing yet another whatever-does-not-scale post. However, I did stumble across two fantastic cartoons that I simply have to share with you.

Nicira uncloaked

Nicira, the OpenFlow startup behind the Open vSwitch, has finally dropped the stealthy cloak. Congratulations!!! Their web site is still pretty sparse on details, but you can get an initial impression of what they’re doing from a number of white papers describing Network Virtualization Platform and DVNI architecture. Short summary: I was almost right, but being a routing-and-switching bloke missed a few interesting bits – OpenFlow (and Open vSwitch) can easily combine security and forwarding functionality.

Virtual Circuits in OpenFlow 1.0 World

Two days ago I described how you can use tunneling or labeling to reduce the forwarding state in the network core (which you have to do if you want to have reasonably fast convergence with currently-available OpenFlow-enabled switches). Now let’s see what you can do in the very limited world of OpenFlow 1.0.

Easy Virtual Network (EVN) – nothing new under the sun

For whatever reason, Easy Virtual Network (EVN), a configuration sugar-glaze on top of VRF-lite (oops, multi-VRF) that has been lurking in the shadows for the last 18 months erupted into the twittersphere after Cisco’s latest switching launch. I can’t possibly understand why the implementation of a decade-old technology on mature platform (Catalyst 4500 and Catalyst 6500) makes news at the time when 40GE and 100GE interfaces were launched, but the intricacies of marketing always somehow escaped me.

Forwarding State Abstraction with Tunneling and Labeling

Yesterday I described how the limited flow setup rates offered by most commercially-available switches force the developers of production-grade OpenFlow controllers to drop the microflow ideas and focus on state abstraction (people living in a dreamland usually go in a totally opposite direction). Before going into OpenFlow-specific details, let’s review the existing forwarding state abstraction technologies.

FIB Update Challenges in OpenFlow Networks

Last week I described the problems high-end service provider routers (or layer-3 switches if you prefer that terminology) face when they have to update large number of entries in the forwarding tables (FIBs). Will these problems go away when we introduce OpenFlow into our networks? Absolutely not, OpenFlow is just another mechanism to download forwarding entries (this time from an external controller) not a laws-of-physics-changing miracle.

Prefix-Independent Convergence (PIC): Fixing the FIB Bottleneck

Did you rush to try OSPF Loop-Free Alternate on a Cisco 7200 after reading my LFA blog post… and disappointedly discovered that it only works on Cisco 7600? The reason is simple: while LFA does add feasible-successor-like behavior to OSPF, its primary mission is to improve RIB-to-FIB convergence time.

Loop-Free Alternate: OSPF Meets EIGRP

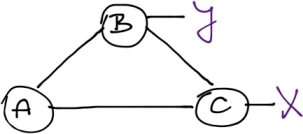

Assume we have a simple triangular network:

Now imagine the A-to-C link fails. How will OSPF react to the link failure as compared to EIGRP? Which one will converge faster? Try to answer the questions before pressing the Read more link ;)

VXLAN runs over UDP – does it matter?

Scott Lowe asked a very good question in his Technology Short Take #20:

VXLAN uses UDP for its encapsulation. What about dropped packets, lack of sequencing, etc., that is possible with UDP? What impact is that going to have on the “inner protocol” that’s wrapped inside the VXLAN UDP packets? Or is this not an issue in modern networks any longer?

Short answer: No problem.

Redundant DMVPN Designs, Part 2 (Multiple Uplinks)

In the Redundant DMVPN Design, Part 1 I described the options you have when you want to connect non-redundant spokes to more than one hub. In this article, we’ll go a step further and design hub and spoke sites with multiple uplinks.

Public IP Addressing

Fact: DMVPN tunnel endpoints have to use public IP addresses or the hub/spoke routers wouldn’t be able to send GRE/IPsec packets across the public backbone.

DHCPv6 Prefix Delegation with Radius Works in IOS Release 15.1

A while ago I described the pre-standard way Cisco IOS used to get delegated IPv6 prefixes from a RADIUS server. Cisco’s documentation always claimed that Cisco IOS implements RFC 4818, but you simply couldn’t get it to work in IOS releases 12.4T or 15.0M. In December I wrote about the progress Cisco is making on the DHCPv6 front and [email protected] commented that IOS 15.1S does support RFC 4818. You know I absolutely had to test that claim ... and it’s true!

… updated on Tuesday, November 17, 2020 16:49 UTC

IP Renumbering in Disaster Avoidance Data Center Designs

It’s hard for me to admit, but there just might be a corner use case for split subnets and inter-DC bridging: even if you move a cold VM between data centers in a controlled disaster avoidance process (moving live VMs rarely makes sense), you might not be able to change its IP address due to hard-coded IP addresses, be it in application code or configuration files.

Disaster recovery is a different beast: if you’ve lost the primary DC, it doesn’t hurt if you instantiate the same subnet in the backup DC.

IPv6 ND Managed-Config-Flag Is Just a Hint

IPv6 hosts can use stateless or stateful autoconfiguration. Stateless address autoconfiguration (SLAAC) uses IPv6 prefixes from Router Advertisement (RA) messages; stateful autoconfiguration uses DHCPv6. The routers can use two flags in RA messages to tell the attached end hosts which method to use:

- Managed-Config-Flag tells the end-host to use DHCPv6 exclusively;

- Other-Config-Flag tells the end-host to use SLAAC to get IPv6 address and DHCPv6 to get other parameters (DNS server address, for example).

- Absence of both flags tells the end-host to use only SLAAC.

One might assume that setting managed-config-flag in RA messages forces IPv6 hosts to use DHCPv6. Wrong, the two flags are just a polite suggestion.

Redundant DMVPN designs, Part 1 (The Basics)

Most of the DMVPN-related questions I get are a variant of the “how many tunnels/hubs/interfaces/areas do I need for a redundant DMVPN design?” As always, the right answer is “it depends”, but here’s what I’ve learned so far.

Single Router/Single Uplink on Spoke Site

This blog post focuses on the simplest possible design – each spoke site has a single router and a single uplink. There’s no redundancy at the spokes, which might be an acceptable tradeoff (or you could use 3G connection as a backup for DMVPN).

You’d probably want to have two hub routers (preferably with independent uplinks), which brings us to the fundamental question: “do we need one or two DMVPN clouds?”

… updated on Wednesday, November 18, 2020 06:44 UTC

Filter Inbound BGP Prefixes: Summary

I got plenty of responses to the How could we filter extraneous BGP prefixes post, some of them referring to emerging technologies and clean-slate ideas, others describing down-to-earth approaches. Thank you all, you’re fantastic!

Almost everyone in the “down-to-earth” category suggested a more or less aggressive inbound filter combined with default routing toward upstream ISPs. Ideally the upstream ISPs would send you responsibly generated default route, or you could use static default routes toward well-known critical infrastructure destinations (like root name servers).