EVPN Designs: IBGP Full Mesh Between Leaf Switches

In the previous blog post in the EVPN Designs series, we explored the simplest possible VXLAN-based fabric design: static ingress replication without any L2VPN control plane. This time, we’ll add the simplest possible EVPN control plane: a full mesh of IBGP sessions between the leaf switches.

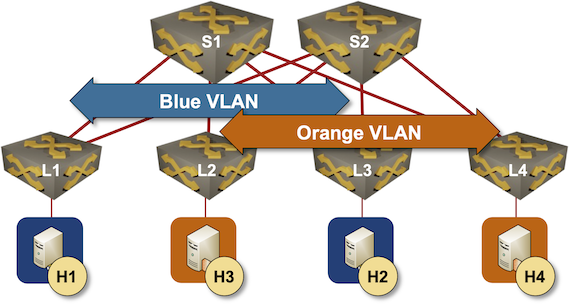

This is the fabric we’re working with:

Leaf-and-spine fabric with two VLANs

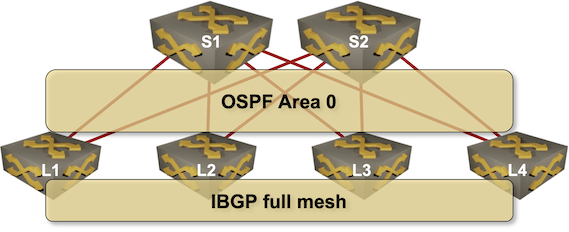

And this is how we’ll use routing protocols in our fabric:

- Leaf- and spine switches are running OSPF

- There’s a full mesh of IBGP sessions between the leaf switches.

- IBGP is used solely to transport EVPN address family.

Full mesh of IBGP sessions between the leaf switches

Let’s set up a lab and try it out. We’ll use a lab setup similar to the VXLAN-only fabric; read that blog post (in particular the Creating the Lab Environment section) to get started.

Leaf-and-Spine Lab Topology

This is the netlab lab topology description we’ll use to set up IBGP full mesh carrying EVPN updates in our leaf-and-spine fabric.

defaults.device: eosprovider: clabplugin: [ fabric ]fabric.spines: 2fabric.leafs: 4bgp.as: 65000bgp.activate.ipv4: []groups:_auto_create: Trueleafs:module: [ ospf, bgp, vlan, vxlan, evpn ]spines:module: [ ospf ]hosts:members: [ H1, H2, H3, H4 ]device: linuxvlan.mode: bridgevlans:orange:links: [ H1-L1, H2-L3 ]blue:links: [ H3-L2, H4-L4 ]tools:graphite:

Most of the topology file is explained in the previous blog post; all we had to do to get from a VXLAN fabric to a full-blown EVPN fabric were three changes1:

- Line 8: The BGP AS number used in the lab2

- Line 9: Our fabric will have BGP sessions between IPv4 loopback addresses, but we’ll not run the IPv4 address family over these sessions.

- Line 14: We also run BGP and EVPN on the leaf switches.

Assuming you already did the previous homework, it’s time to start the lab with the netlab up command. You can also start the lab in a GitHub Codespace (the directory is EVPN/ibgp-full-mesh); you’ll still have to import the Arista cEOS container, though.

Behind the Scenes

This is the relevant part of the configuration of L1 running Arista EOS (you can view complete configurations for all switches on GitHub).

router bgp 65000router-id 10.0.0.1no bgp default ipv4-unicastbgp advertise-inactiveneighbor 10.0.0.2 remote-as 65000neighbor 10.0.0.2 update-source Loopback0neighbor 10.0.0.2 description L2neighbor 10.0.0.2 send-community standard extended largeneighbor 10.0.0.3 remote-as 65000neighbor 10.0.0.3 update-source Loopback0neighbor 10.0.0.3 description L3neighbor 10.0.0.3 send-community standard extended largeneighbor 10.0.0.4 remote-as 65000neighbor 10.0.0.4 update-source Loopback0neighbor 10.0.0.4 description L4neighbor 10.0.0.4 send-community standard extended large!vlan 1000rd 10.0.0.1:1000route-target import 65000:1000route-target export 65000:1000redistribute learned!address-family evpnneighbor 10.0.0.2 activateneighbor 10.0.0.3 activateneighbor 10.0.0.4 activate!address-family ipv4network 10.0.0.1/32

Let’s walk through the changes:

- Line 1: We’re starting a BGP routing process.

- Line 3: We’re not activating the IPv4 address family when configuring an IPv4 BGP neighbor.

- Lines 2 and 4: netlab sets a few parameters to ensure a working BGP configuration under all circumstances. These parameters are not relevant to our discussion.

- Lines 5-16: We have to define the IBGP neighbors (all other leaf switches)

- Line 18: Configuring a VLAN under the BGP routing process creates an EVPN instance (MAC VRF) on Arista EOS.

- Lines 19-21: netlab manages EVPN route targets and route distinguishers. You could use rd auto; Arista EOS documentation claims you could use automatic route targets, but I couldn’t find the corresponding configuration command.

- Lines 24-27: We must exchange EVPN routes with other leaf switches.

Does It Work?

Let’s look around a bit. BGP neighbors are active, and they’re exchanging BGP prefixes:

L1>show bgp evpn summary

BGP summary information for VRF default

Router identifier 10.0.0.1, local AS number 65000

Neighbor Status Codes: m - Under maintenance

Description Neighbor V AS MsgRcvd MsgSent InQ OutQ Up/Down State PfxRcd PfxAcc

L2 10.0.0.2 4 65000 39 39 0 0 00:26:08 Estab 2 2

L3 10.0.0.3 4 65000 39 39 0 0 00:26:10 Estab 2 2

L4 10.0.0.4 4 65000 39 38 0 0 00:26:10 Estab 2 2

Each leaf switch is advertising a single MAC address (H1 through H4) and a VTEP IP address (imet route) that should be used for BUM flooding.

L1>show bgp evpn

BGP routing table information for VRF default

Router identifier 10.0.0.1, local AS number 65000

Route status codes: * - valid, > - active, S - Stale, E - ECMP head, e - ECMP

c - Contributing to ECMP, % - Pending best path selection

Origin codes: i - IGP, e - EGP, ? - incomplete

AS Path Attributes: Or-ID - Originator ID, C-LST - Cluster List, LL Nexthop - Link Local Nexthop

Network Next Hop Metric LocPref Weight Path

* > RD: 10.0.0.4:1001 mac-ip aac1.ab45.fdc3

10.0.0.4 - 100 0 i

* > RD: 10.0.0.1:1000 mac-ip aac1.ab4a.93bb

- - - 0 i

* > RD: 10.0.0.2:1001 mac-ip aac1.aba9.4d50

10.0.0.2 - 100 0 i

* > RD: 10.0.0.3:1000 mac-ip aac1.abbc.7c69

10.0.0.3 - 100 0 i

* > RD: 10.0.0.1:1000 imet 10.0.0.1

- - - 0 i

* > RD: 10.0.0.2:1001 imet 10.0.0.2

10.0.0.2 - 100 0 i

* > RD: 10.0.0.3:1000 imet 10.0.0.3

10.0.0.3 - 100 0 i

* > RD: 10.0.0.4:1001 imet 10.0.0.4

10.0.0.4 - 100 0 i

The imet routes are used to build the ingress replication lists:

L1>show vxlan vtep detail

Remote VTEPS for Vxlan1:

VTEP Learned Via MAC Address Learning Tunnel Type(s)

-------------- ------------------- -------------------------- --------------

10.0.0.3 control plane control plane unicast, flood

Total number of remote VTEPS: 1

The mac-ip routes are used to build VLAN MAC address tables:

L1#show mac address-table

L1>show mac address-table vlan 1000

Mac Address Table

------------------------------------------------------------------

Vlan Mac Address Type Ports Moves Last Move

---- ----------- ---- ----- ----- ---------

1000 aac1.ab4a.93bb DYNAMIC Et3 1 0:03:11 ago

1000 aac1.abbc.7c69 DYNAMIC Vx1 1 0:03:11 ago

Total Mac Addresses for this criterion: 2

The MAC address reachable over the VXLAN interface sits behind VTEP 10.0.0.3. L3 advertised it to L1 in an EVPN update.

L1>show vxlan address-table

Vxlan Mac Address Table

----------------------------------------------------------------------

VLAN Mac Address Type Prt VTEP Moves Last Move

---- ----------- ---- --- ---- ----- ---------

1000 aac1.abbc.7c69 EVPN Vx1 10.0.0.3 1 0:03:27 ago

Total Remote Mac Addresses for this criterion: 1

Was It Worth the Effort?

TL&DR: Barely

Let’s start with the benefits:

- The ingress replication lists are built automatically.

- Each VLAN (EVPN instance) has an independent ingress replication list, which means that the client traffic is flooded only to the VTEPs with a compatible (according to route targets) EVPN instance. That’s a massive win in Carrier Ethernet networks where a customer port often has a single VLAN. It is somewhat irrelevant in data center environments where we usually configure every VLAN on every server port so we don’t have to talk with the server team.

What about the drawbacks?

- We introduced a new routing protocol and a new technology. While it looks simple, it might be fun trying to troubleshoot it at the proverbial 2 AM on a Sunday morning.

- The configurations can become quite lengthy unless you generate them automatically. Whether you are ready to do that is a different question3.

- Managing the full mesh of IBGP sessions is obviously a nightmare. However, things will improve once we add route reflectors.

Should you choose EVPN when building a small data center that provides VLAN connectivity? Probably not4. EVPN starts to make sense when you use advanced features like proxy ARP, multi-subnet VRFs, or the much-praised active-active multihoming5.

Next: BGP Route Reflectors Considered Harmful Continue

-

Sometimes it pays off to have a flexible high-level tool ;) ↩︎

-

The BGP AS number has to be specified as a global parameter, or the EVPN module won’t be able to use it to set route targets or route distinguishers. ↩︎

-

Regardless of what the automation evangelists (myself included) tell you. ↩︎

-

Unless you want to play with a new toy or boost your resume. ↩︎

-

The server team might not want the link aggregation anyway. Most virtualization solutions are happier with multiple independent uplinks. ↩︎

Hi there, thanks for the post. What is supposed to be a RR and where to host it in iBGP overlay? Baremetal host? vm? spine? How is it meant to be scaled with proper reliability and redundancy considering out of underlay network issues?