Optimizing the Time-to-First-Byte

I don’t think I’ve ever met someone saying “I wish my web application would run slower.” Everyone wants their stuff to run faster, but most environments are not willing to pay the cost (rearchitecting the application). Welcome to the wonderful world of PowerPoint “solutions”.

The obvious answer: The Cloud. Let’s move our web servers closer to the clients – deploy them in various cloud regions around the world. Mission accomplished.

Not really; the laws of physics (latency in particular) will kill your wonderful idea. I wrote about the underlying problems years ago, wrote another blog post focused on the misconceptions of cloudbursting, but I’m still getting the questions along the same lines. Time for another blog post, this time with even more diagrams.

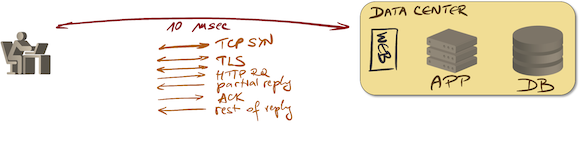

Let’s assume your customer sits 10 msec away from your data center.

Optimizing Time to First Byte: The Challenge

When the client tries to open a web page for the first time, it takes a long while before the first usable byte arrives at the web browser1:

- One round-trip time (RTT) is spent on TCP SYN/ACK

- Two more RTTs are spent negotiating TLS.

- Another RTT is spent sending HTTP request and receiving the initial few packets of the response.

- At least one more RTT is spent receiving the rest of the HTTP response.

Too many round-trip times kill performance

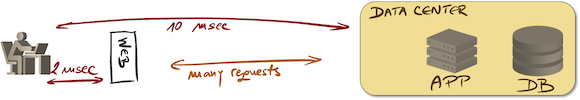

You could solve this conundrum with a new protocol that reduces the number of RTTs needed to establish a session (see: HTTP/3) or you could deploy the web server closer to the client:

Deploying a web server closer to the client

Guess what… as I explained several times pulling the web server away from the underlying infrastructure only makes the situation worse – a web application usually makes many back-end requests to collect data needed by a single client request.

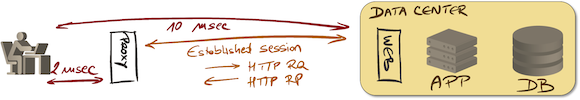

The only working solution is thus a web proxy – a local web server that terminates the client session and uses an existing HTTP session with the back-end web server, spending a single RTT for the HTTP request and response.

Deploying a web proxy closer to the client

Using web proxies has another advantage: if you deploy web servers all around the world you have to manage them, but if you settle for a web proxy you can buy it as a service from any CDN provider. I’m using CloudFlare; you could easily get the same service from AWS, Azure, or a dozen other companies.

For more information, watch the TCP, HTTP and SPDY webinar. For even more details, read the awesome High Performance Browser Networking book by Ilya Grigorik.

-

A lot of discussions are focused on Time to First Byte, but what some companies are interested in is really Time to First Ad ;) ↩︎

Missing the mention of caching or cache in your blog post in regard to CDN (Content Delivery Network) as it is also an optimization mechanism.

The latency on typical client access is more like 50ms as most ISPs transit their traffic to large IXs mostly geographically way from clients.

Also A proxy is just a server in a colocation or IX rack. Any remote management over OOB interface can work here.

Akamai spend years and lot of money to deeply deploy servers everywhere, and build an overlay from them to send data from any point in the World to any other point.