Single-Metric Unequal-Cost Multipathing Is Hard

A while ago, we discussed whether unequal-cost multipathing (UCMP) makes sense (TL&DR: rarely), and whether we could implement it in link-state routing protocols (TL&DR: yes). Even though we could modify OSPF or IS-IS to support UCMP, and Cisco IOS XR even implemented those changes (they are not exactly widely used), the results are… suboptimal.

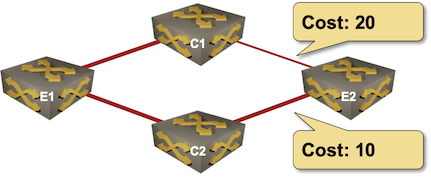

Imagine a simple network with four nodes, three equal-bandwidth links, and a link that has half the bandwidth of the other three:

It obviously makes sense to spread the load between E1 and E2 in a 2:1 ratio (⅔ of the traffic going over C2 and ⅓ of the traffic going over C1). That’s not what you’d get if you add the link costs together. The cost of E1-C1-E2 is 30, the cost of E1-C2-E2 is 20, and thus the load balancing ratio will be 2:3 (or something similar).

There’s absolutely no way that you would get the load balancing ratios right in a single-metric routing protocol without getting insane trying to tweak the link metrics. You could get the metrics just right with some interesting math, using a tool like Cariden MATE, or using MPLS-TE tunnels (where the load balancing ratio is determined by relative tunnel bandwidth)… but it’s not trivial.

How about the data center anycast use case I mentioned the last time?

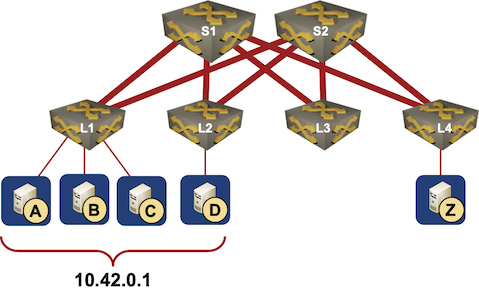

What we’d like to have is 75% of the traffic going from L4 toward L1 (where it’s spread between A, B, and C) and 25% of the traffic going toward L2.

A simplistic approach could assign humongous costs to the server links (so that the intra-fabric cost would be negligible compared to the total cost), but you’d still be stuck with 50:50 ratio between L1 and L2 – no implementation of a link-state protocol I’ve seen so far is reducing the link cost based on availability of parallel links. S1 would see 10.42.0.1/32 prefix being available through L1 with exactly the same cost as through L2.

Yet again, you could tweak the metrics and change them automatically every time you add or remove a server (congratulations, you implemented a self-driving software-defined network), but I wish you luck trying to troubleshoot that Rube Goldberg invention.

The only way to make anycast UCMP work well is to realize the problem has two components:

- Network topology component – find the end-to-end paths from ingress to egress devices

- Endpoint reachability component – find how many endpoints are connected to every egress device and change the load balancing ratios accordingly.

Do we have a set of routing protocols that could do all that? Of course, one of them is called BGP 😉

Next: Unequal-Cost Multipath with BGP DMZ Link Bandwidth Continue

More to Explore

Interested in challenging networking fundamentals like what we discussed in this blog post? You might find the How Networks Really Work webinar interesting (and parts of it are available with Free ipSpace.net Subscription).

Ivan, I still don't understand this part here: " S1 would see 10.42.0.1/32 prefix being available through L1 with exactly the same cost as through L2."

Say, L1 and L2 both advertise 10.42.0.1/32 to S1. Wouldn't S1 get 4 OSPF entries of 10.42.0.1/32 with different metrics then? And so it would load-balance among the 4 entries accordingly. Why would S1 see 10.42.0.1/32 thru L1 with the same cost as thru L2, unless each of them only generates one 10.42.0.1/32 toward S1?

@Minh Ha: Getting a definitive answer would involve digging through RFC 2328, and I don't have the nerves to do that anymore ;) Anyone reading this comment thread is most welcome to do it.

I would be surprised to see three routing table entries on S1 pointing to L1 (and it might depend on whether L1 advertises type-5 LSA or connected subnets in type-1 LSA)... but it should be pretty easy to set this up in a lab and prove me wrong ;))

Jeff Doyle's Routing TCP/IP and OSPF and IS-IS: Choosing an IGP for Large-Scale Network, didn't mention anything more specific than rfc 2328 either. So I think this is one of those gray areas that're subject to implementation, as anycast wasn't widely used back in the day, when those books and rfcs were written. One of our staff has a Cisco rack to deliver a CCNA course, so I'll ask him to replicate this scenario and see what the behaviour is like on Cisco equipment :)).

Did a few tests. Blog post coming when I find time to polish it and draw the diagrams, setup and configs are here: https://github.com/ipspace/netsim-examples/tree/master/OSPF/anycast

As expected, OSPF does not install multiple routes with the same next hop or alternate routes with different traffic shares, and I have a vague feeling that behavior can be traced back to the RFC.