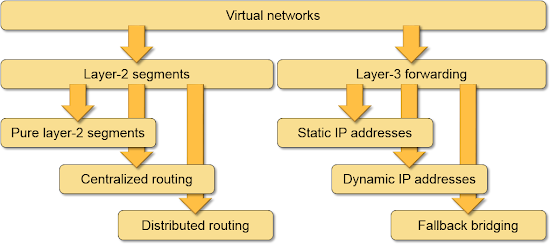

Virtual Networking Implementation Taxonomy

I’m not sure I wrote about the taxonomy of numerous virtual networking implementations. Just in case, here it is ;)

Layer-2 or layer-3 networks?

Some virtual networking solutions emulate thick coax cable (more precisely, layer-2 switch), giving their users the impression of having regular VLAN-like layer-2 segments.

Examples: traditional VLANs, VXLAN on Nexus 1000v, VXLAN on VMware vCNS, VMware NSX, Nuage Networks Virtual Services Platform, OpenStack Open vSwitch Neutron plugin.

Other solutions perform layer-3 forwarding at the first hop (vNIC-to-vSwitch boundary), implementing a pure layer-3 network.

Examples: Hyper-V Network Virtualization, Juniper Contrail, Amazon VPC.

Layer-2 networks with layer-3 forwarding

Every layer-2 virtual networking solution allows you to implement layer-3 forwarding on top of pure layer-2 segments with a multi-NIC VM.

Some virtual networking solutions provide centralized built-in layer-3 gateways (routers) that you can use to connect layer-2 segments.

Examples: inter-VLAN routing, VMware NSX, OpenStack

Other layer-2 solutions provide distributed routing – the same default gateway IP and MAC address are present in every first-hop switch, resulting in optimal end-to-end traffic flow.

Examples: Cisco DFA, Arista VARP, Juniper QFabric, VMware NSX, Nuage VSP, Distributed layer-3 forwarding in OpenStack Icehouse release.

Layer-3 networks and dynamic IP addresses

Some layer-3 virtual networking solutions assign static IP addresses to end hosts. The end-to-end layer-3 forwarding is determined by the orchestration system.

Example: Amazon VPC

Other layer-3 virtual networking solutions allow dynamic IP addresses (example: customer DHCP server) or IP address migration between cluster members.

Examples: Hyper-V network virtualization in Windows Server 2012 R2, Juniper Contrail

Finally, there are layer-3 solutions that fall back to layer-2 forwarding when they cannot route the packet (example: non-IP protocols).

Example: Juniper Contrail

A picture is worth a 1000 words

Why does it matter?

In a nutshell: the further away from bridging a solution is, the more scalable it is from the architectural perspective (there’s always an odd chance of having clumsy implementation of a great architecture). No wonder Amazon VPC and Hyper-V network virtualization (also used within the Azure cloud) lean so far toward pure layer-3 forwarding.

Need more?

- Watch the Overlay Virtual Networking webinar (and the Following Packets across Overlay Virtual Networks addendum).

- Check out cloud computing and networking webinars and webinar subscription.

- Use ExpertExpress service if you need short online consulting session, technology discussion or a design review.

I mean apart from inital deployment ....orchestration systems will do thier work...what scope do Network enginners have in this cloud system?

http://blog.ipspace.net/2014/02/going-all-virtual-with-virtual-wan-edge.html

With 50 server-facing ports on a switch, a typical large cloud data center would have thousands of ToR switches and "a few" core switches. Don't you think you need a dedicated team to take care of them? Does it matter what job titles that team has?

Then what we were doing all these years for?

There are no dedicated plumbers....there are cloud architects doing all of these...

Well you guys are not giving us any choice but to enter VM/Storage space and it will create job issues for VM/SAN guys as well....

I design and build these solutions for a living. The network architects I have worked with are outstanding. However once the design is complete, the physical remains fairly static (except for expansion). Its primary job is to provide an IP bus for the virtualized solutions above.

I see a future wherein all of the supporting functions are subsumed by the virtual console. Network, compute storage, and security will be registered to the 'brain' and all configuration and operations orchestrated from the top down.

Compute and storage have already been solved. Today, I can click a link and spin up a physical server, deploy the platform, and finish configuration. Same for storage, but to a limited degree.

Some large automated networks have cracked the code as well. Their switches PXE boot, register to the controller, and are programmed accordingly. This could come to the consumer market within a few years.

The tide is shifting from those who can administer to those who can orchestrate.

Meaning NSX/ACI/SDN will create job issues for VM/SAN engss and not for Network Enggs...as what this Industry is harping about...

I can do it today with VMware/OpenStack/CloudStack. Once the plumbing is up, I can create, router, firewall, etc. multiple networks with no interaction from the network team.

ACI will fail as it is going the wrong direction. Applications are the focus. The app/dev personnel will be able to deploy an application, attach compute and storage, then deploy networking. When they click go, all of the resources will be configured to support the Application.

The network engineer will have to adapt as have the compute and storage admins. The Borg is the VM side of the house. All else will be assimilated. Even then, the app/dev will drive the VM side of the house.

Also what about network outside of the DC? Do you think Enterprises will let a seprate team handle the physical netywork and VM team handle the virtualised network? Hell no... Virtualised network will also be handled by Seasoned Network engineers......and then once we get our hands on the VMware tools...we will slowly moved forwards understanding everything....

For ACI, app are the main focus...looks like you have no clue on ACI.

Also when we get our access to NSX..we may as well steer it towards ACI..who knows?? And we wont stop at that..we will go after Storage and VM and what you guys have learnt in 8 yrs, we will get there under 1 yr flat..in short we will ensure we will not go the admin way....you will be out this time...we will setup networks, firewalls, VM, storage in one click...and when VM experts leave....we will intake only people with network background and train them on VM.Storage.

First you handle a produciton NSX network and let me know....when there is connectivity blackout and the top mgmt is blowing down your neck...you will understand...

I would ask, if network personnel are going to take over the everything, then why are they the last to the virtualization and next generation table?

Thank you for a spirited conversation. However, I cannot compete with you at such degenerated level.

The industry did no good by insiting at the very beginning of the SDN that Network personnel were a gone by..am extermely passionate about Networking...

I am a L3/L4 Network engineer working purely on Cisco Products and Network technologies as of now with 10 years of experience. Am CCNP Certified.

I have worked on almost all the LAN/WAN/Nexus technologies.

How long will it take for a typical person like me to get a foothold into VM/SAN?

VM/SAN work purely on L1/Physical....there is no routing/switching logic like in traditional networks. So I reckon we just need to know the facts. Build factual working knowledge of VM/SAN.

I just attended an NSX walkthrough of building a simple network via NSX. Well there is nothing in it to build expertise on.

Currently am involved with Network Automations but that's just a starting point.

I just need a ball park figure. I know there is no definite answer.