Load Balancing Across IP Subnets

One of my readers sent me this question:

I have a data center with huge L2 domains. I would like to move routing down to the top of the rack, however I’m stuck with a load-balancing question: how do load-balancers work if you have routed network and pool members that are multiple hops away? How is that possible to use with Direct Return?

There are multiple ways to make load balancers work across multiple subnets:

- Make sure the load balancer is in the forwarding path from the server to the client, so the return traffic hits the load balancer, which translates the source (server) IP address.

You usually need multiple forwarding domains (VLANs or VRFs) to make this work.

- Use source NAT, where the load balancer changes the client’s IP address to load balancer’s IP address. As the return IP address belongs to the load balancer, the return (server-to-client) traffic goes through the load balancer even when it’s not in the forwarding path.

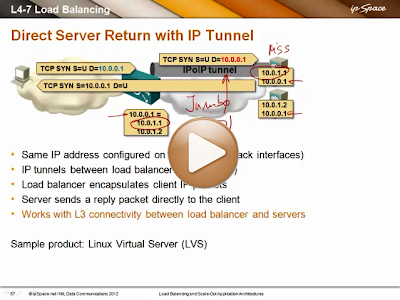

- With Direct Server Return (DSR) use IP-over-IP tunneling (or whatever tunneling mechanism is supported by both load balancer and the server) to get the client packets from the load balancer to the desired server. The return traffic is sent from the server straight to the client anyway.

Haven’t heard about Direct Server Return? Don’t worry, you’ll find all you need to know in this short video:

More information

- The Data Center 3.0 webinar has a whole (2-hour) section on scale-out architectures and load balancing;

- Greg Ferro wrote extensively about SNAT;

- You’ll find in-depth details of DSR in Linux Virtual Server documentation.

Using source NAT will obviously remove the client IP address. If the loadbalancer doesn't add e.g. X-Forwarded-For-headers (for http), the client IP is obviously lost. This also requires the client application to know how to properly parse such headers are to be parsed: X-Forward-For is a chained list of IP addresses, and the "seen" client IP (the loadbalancer) needs to be evaluated as well. Only do trust X-Forwarded-For, if the "seen" client IP is a trusted loadbalancer. This becomes an issue if a client is behind a proxy: the proxy will add an "X-Forwarded-For"-header to the outgoing request, and the loadbalancer will add the proxy's IP address to this header. The application needs to know such details, otherwise there's a potential for error.

Using IP-over-IP-tunneling is a different story: if you networks' MTU is 1500, an IPv4-over-IPv4-header will reduce the available MTU by 20 octets down to 1480 octets. If the client's request is larger than this (e.g. a typical 1500-sized packet during file upload), the tcp packet does have the DF bit set and the client is sitting behind some (broken) firewall silently dropping icmp packets, the client will experience issues of "something doesn't work".

There are also potential security issues with IP-over-IP. As a good network engineer, you do enforce egress filtering, reverse path filtering and the like to protect the internet from spoofed outgoing traffic. If one is combining IP-over-IP-tunneling to traverse different L2/L3 domains and wants to use direct server return for the replies, they essentially need to remove some of those security measures. You also need to be aware that you'll be introducing asymetric traffic into your network, which may complicate debugging, may break more easily - and as we're talking about loadbalancers, we're also talking about high-volume or highly available services, who don't want to be faced with a risk of "complicated debugging".

So if you need to do IP-over-IP-tunneling, one may also want to re-route any replies back via the load balancer's network, e.g. via another tunneled connection. This will make it easier for the network, but this may introduce another layer of complexity for the server.

Surprisingly a lot of "offloading/re-encrypting" solutions don't check if the server's certificate is barely valid, and by using them, you're accepting any further MITM-attacks downstream between loadbalancer and server. Ultimately, it's tricking your clients into assuming some security (see, the lock is closed and certified...) which is actually missing.

Regards,

Jeroen van Bemmel ( Customer support engineer @ Nuage Networks )

That would work well if the load balancer and servers are virtualized, but not so much if they happen to be appliances or bare-metal servers (where you'll have to use on-ramp/off-ramp L2 gateways, increasing the complexity of the solution).