QFabric Lite

QFabric from Juniper is probably the best data center fabric architecture (not implementation) I’ve seen so far – single management plane, implemented in redundant controllers, and distributed control plane. The “only” problem it had was that it was way too big for data centers that most of us are building (how many times do you need 6000 10GE ports?). Juniper just solved that problem with a scaled-down version of QFabric, officially named QFX3000-M.

Short QFabric review

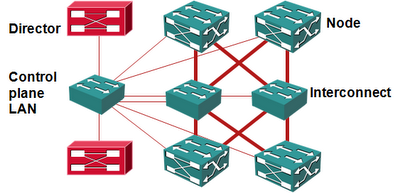

The original QFabric (now QFX3000-G) has the following components:

- QFabric Nodes (QFX3500) – top-of-rack switches with 48 10GE ports and 4 40GE uplinks (yeah, they do look like any other switch on the market).

- QFabric Interconnects (QFX3008-I) – massive 3-stage Clos fabrics, each one providing over 10Tbps of throughput.

- QFabric Directors (QFX3100) – x86-based servers providing management plane for the whole QFabric and control plane for a network node (up to 8 QFabric nodes).

- Control-plane LAN implemented with two stacks of EX4200 switches working as two virtual chassis.

QFabric lite: same architecture, new interconnects

The scaled-down QFabric (QFX3000-M) retains most of the components apart from the QFabric Interconnects. QFX3600-I is a single-stage 16-port 40Gbps switch providing the QFabric Interconnect functionality. The single-stage interconnect also reduces the end-to-end latency, bringing it down to 3 microseconds (the original QFabric had 5 microsecond end-to-end latency).

With up to four QFX3600-I interconnects in a QFabric, the QFX3000-M scales to 768 L2/L3 10Gbps ports. Obviously you still need two QFabric directors (for redundancy), but the control-plane LAN no longer needs two virtual chassis – it’s implemented with two EX4200 switches.

The minimum version of QFX3000-M would have only two QFX3600-I for a total of eight QFabric Nodes (at 3:1 oversubscription) or 384 ports (which seems to be another magical number in data center environments). With only eight QFabric nodes, you can group all of them in a single network node (moving the control plane to the QFabric Director), giving you a full set of routing protocols and spanning tree support on all ports. Does this sound like an OpenFlow-based fabric? Actually it’s the same functional architecture using a different set of protocols.

Short summary

I was always saying QFabric is a great architecture (with implementation shortcomings like lack of IPv6), but it’s simply too big for most of the real-world uses; with the QFX3000-M QFabric became a realistic option for the non-gargantuan environments.

But wait, there’s more

For those of you believing in the 40GE-only ToR switches, Juniper launched QFX3600 – a 40GE version of the QFabric Node switch. Today you can use the QFX3600 switch within the QFabric environment (QFX3000-M and QFX3000-G), and Juniper is promising a standalone version later this year.

Finally, Juniper officially announced an 8-switch version of the EX8200 virtual chassis... and they couldn’t possibly avoid the obvious use case: you can manage the core switches in up to four data centers as a single switch. How many times do I have to repeat that this is a disaster waiting to happen?

I'm positive every single one of your (or mine) designs has the same problem, although the maximum limits could be higher or lower.

On the other hand, when upgrading from QFabric Lite to QFabric, hard downtime seems to be the only option.