Monkey Design Still Doesn’t Work Well

We’ve seen several interesting data center fabric solutions during the Networking Tech Field Day presentations, every time hearing how the new fabric technologies (actually, the shortest path bridging part of those technologies) allow us to shed the yoke of the Spanning Tree monster (see Understanding Switch Fabrics by Brandon Carroll for more details). Not surprisingly we wanted to know more and asked the obvious question: “and how would you connect the switches within the fabric?”

The vendors were quick to assure us that “we can use any topology we want.” We also heard the buzzwords like hypercube, Clos, daisy chain and ring, and the promises like “you just plug it in ... it just works!” What they usually forgot to mention was the fact that removing the rigid requirements of spanning tree protocol doesn’t magically alleviate the need for proper network design.

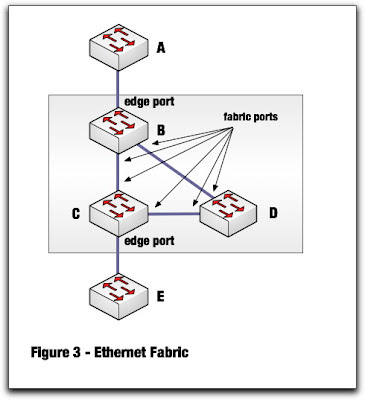

Brandon has gracefully allowed me to use a picture from his blog post to illustrate the problem. Imagine you build a network shown in the following diagram. Because you’re using a fabric technology (be it TRILL, SPB, FabricPath or something else), no ports are blocked and you should be able to use all the bandwidth in the network ... but that simply won’t happen.

You see, the shortest path bridging technologies behave almost exactly like routing, and (like their name indicates) they give you shortest path bridging. All the traffic between A and E will still go over the B-C link because that’s the shortest path. The path A-B-D-C-E is longer and won’t be used.

The B-D and C-D links would be used if there would be other devices attached to D, but I hope you get my point – shortest path bridging technologies are no better than routing.

Summary

Just because the shortest path bridging technologies provide routing-like behavior at MAC layer doesn’t mean that you can wire your network haphazardly. Fortunately, you can fall back to the age-old rules of properly designed routed networks ... and guess what: they usually prescribe a hierarchical structure with edge, aggregation and core. Maybe the shiny new world isn’t so different from the old one after all.

Or, obviously, you can stick to Routing to Access Layer, but then you won't have big layer2 domains...

* make sense

* be as simple as possible

* match your needs/goals (example: equal-cost load balancing or optimum bandwidth utilization or equidistant endpoints or something else).

Unfortunately, this simple fact is oft ignored.

Are we at the RIP stages of L2MP?

That said though, what is the topology of choice? Obviosuly, a Clos network will have the minimal number of hops but the highest cost in terms of inter-switch links. 2/3/4 dimension Torus or hyper-cubes may also work well (you trade having fewer long links between switches for more hops, but still get massive bisection bandwidth at lower cost). Both of these topologies are widely used in the HPC space.

What won't work with TRILL right now are fancy-pants topologies like Dragonfly, Flattenend Butterfly, etc. that require adaptive or non-minimal routing. But those don't work with any other mainstream routing protocol either. Maybe someday.

It seems as though fabric vendors are just assuming a 2/3 stage Clos network is going to be the design of choice, cost be damned.

One question though: What the hell is the difference between a two-stage Clos network and a "leaf and spine" network? Is it just marketing language?

Also, not sure if you can just go with shortest-path routing on a hypercube/torus either - you still need some sort of adaptive routing variant, or just plain old VLB (halving throughput in sacrifice).

As for the cost factor, it does matter in smaller deployments - large scale data-centers are not really sensitive to network cost, as its just on the order of magnitude less compared to server costs, hence the preference for Clos.

The "irregular" topologies are still interesting for small-to-med networks, though the requirement of adaptive routing makes it tough to implement in commodity hardware/software, and calls for "naturally load-balanced" Clos once again...