Category: VXLAN

Worth Reading: VXLAN Drops Large Packets

Ian Nightingale published an interesting story of connectivity problems he had in a VXLAN-based campus network. TL&DR: it’s always the MTU (unless it’s DNS or BGP).

The really fun part: even though large L2 segments might have magical properties (according to vendor fluff), there’s no host-to-network communication in transparent bridging, so there’s absolutely no way that the ingress VTEP could tell the host that the packet is too big. In a layer-3 network you have at least a fighting chance…

For more details, watch the Switching, Routing and Bridging part of How Networks Really Work webinar (most of it available with Free Subscription).

netlab EVPN/VXLAN Bridging Example

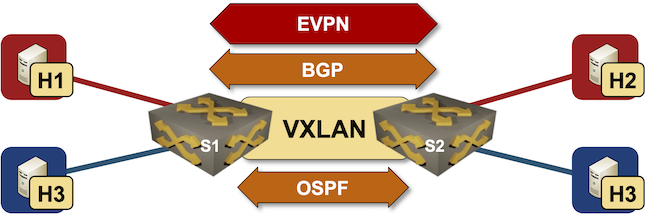

netlab release 1.3 introduced support for VXLAN transport with static ingress replication and EVPN control plane. Last week we replaced a VLAN trunk with VXLAN transport, now we’ll replace static ingress replication with EVPN control plane.

Lab topology

Worth Reading: EVPN/VXLAN with FRR on Linux Hosts

Jeroen Van Bemmel created another interesting netlab topology: EVPN/VXLAN between SR Linux fabric and FRR on Linux hosts based on his work implementing VRFs, VXLAN, and EVPN on FRR in netlab release 1.3.1.

Bonus point: he also described how to do multi-vendor interoperability testing with netlab.

If only he wouldn’t be publishing his articles on a platform that’s almost as user-data-craving as Google.

… updated on Wednesday, September 28, 2022 17:22 UTC

Combining MLAG Clusters with VXLAN Fabric

In the previous MLAG Deep Dive blog posts, we discussed the innards of a standalone MLAG cluster. Now let’s see what happens when we connect such a cluster to a VXLAN fabric – we’ll use our standard MLAG topology and add a VXLAN transport underlay to it with another switch connected to the other end of the underlay network.

MLAG cluster connected to a VXLAN fabric

Repost: On the Viability of EVPN

Jordi left an interesting comment to my EVPN/VXLAN or Bridged Data Center Fabrics blog post discussing the viability of using VXLAN and EVPN in times when the equipment lead times can exceed 12 months. Here it is:

Interesting article Ivan. Another major problem I see for EPVN, is the incompatibility between vendors, even though it is an open standard. With today’s crazy switch delivery times, we want a multi-vendor solution like BGP or LACP, but EVPN (due to vendors) isn’t ready for a multi-vendor production network fabric.

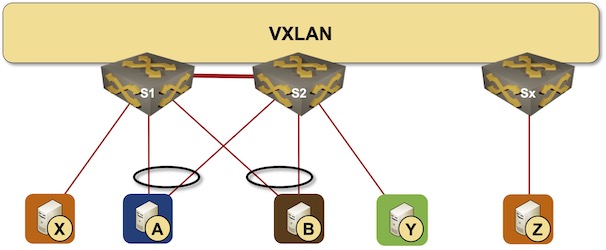

netlab VXLAN Bridging Example

netlab release 1.3 introduced support for VXLAN transport with static ingress replication. Time to check how easy it is to replace a VLAN trunk with VXLAN transport. We’ll use the lab topology from the VLAN trunking example, replace the VLAN trunk between S1 and S2 with an IP underlay network, and transport Ethernet frames across that network with VXLAN.

Lab topology

VXLAN-to-VXLAN Bridging in DCI Environments

Almost exactly a decade ago I wrote that VXLAN isn’t a data center interconnect technology. That’s still true, but you can make it a bit better with EVPN – at the very minimum you’ll get an ARP proxy and anycast gateway. Even this combo does not address the other requirements I listed a decade ago, but maybe I’m too demanding and good enough works well enough.

However, there is one other bit that was missing from most VXLAN implementations: LAN-to-WAN VXLAN-to-VXLAN bridging. Sounds weird? Supposedly a picture is worth a thousand words, so here we go.

VXLAN-Focused Design Clinic in June 2022

ipSpace.net subscribers are probably already familiar with the Design Clinic: a monthly Zoom call in which we discuss real-life design- and technology challenges. I started it in September 2021 and it quickly became reasonably successful; we covered almost two dozen topics so far.

Most of the challenges contributed for the June 2022 session were focused on VXLAN use cases (quite fitting considering I just updated the VXLAN Technical Deep Dive webinar), including:

- Can we implement Data Center Interconnect (DCI) with VXLAN? (Yes, but…)

- Can we run VXLAN over SD-WAN (and does it make sense)? (Yes/No)

- What happened to traditional MPLS/VPN Enterprise core and can we use VXLAN/EVPN instead? (Still there/Maybe)

- Should we use routers or switches as data center WAN edge devices, and how do we integrate them with VXLAN/EVPN data center fabric? (Yes 😊)

For more details, join us on June 6th. There’s just a minor gotcha: you have to be an active ipSpace.net subscriber to do it.

Overlay Virtual Networking Examples

One of ipSpace.net subscribers wanted to see a real-life examples in the Overlay Virtual Networking webinar:

I would be nice to have real world examples. The webinar lacks of contents about how to obtain a fully working L3 fabric overlay network, including gateways, vrfs, security zones, etc… I know there is not only one “design for all” but a few complete architectures from L2 to L7 will be appreciated over deep-dives about specific protocols or technologies.

Most ipSpace.net webinars are bits of a larger puzzle. In this particular case:

EVPN/VXLAN Complexity

We have school holidays this week, so I’m reposting wonderful comments that would otherwise be lost somewhere in the page margins. Today: Minh Ha on complexity of emulating layer-2 networks with VXLAN and EVPN.

Dmytro Shypovalov is a master networker who has a sophisticated grasp of some of the most advanced topics in networking. He doesn’t write often, but when he does, he writes exceptional content, both deep and broad. Have to say I agree with him 300% on “If an L2 network doesn’t scale, design a proper L3 network. But if people want to step on rakes, why discourage them.”

Worth Reading: Switching to IP fabrics

Namex, an Italian IXP, decided to replace their existing peering fabric with a fully automated leaf-and-spine fabric using VXLAN and EVPN running on Cumulus Linux.

They documented the design, deployment process, and automation scripts they developed in an extensive blog post that’s well worth reading. Enjoy ;)

Why Would You Need VXLAN Transport?

It’s amazing how sometimes people fond of sharing their opinions and buzzwords on various social media can’t answer simple questions. Today’s blog post is based on a true story… a “senior network architect” fully engaged in a recent hype cycle couldn’t answer a simple question:

Why exactly would you need VXLAN and EVPN?

We could spend a day (or a week) discussing the nuances of that simple question, but all I have at the moment is a single web page, so here we go…

Should I Go with VXLAN or MLAG with STP?

TL&DR: It’s 2020, and VXLAN with EVPN is all the rage. Thank you, you can stop reading.

On a more serious note, I got this questions from an Johannes Spanier after he read my do we need complex data center switches for NSX underlay blog post:

Would you agree that for smaller NSX designs (~100 hypervisors) a much simpler Layer2 based access-distribution design with MLAGs is feasible? One would have two distribution switches and redundant access switches MLAGed together.

I would still prefer VXLAN for a number of reasons:

The Never-Ending "My Overlay Is Better Than Yours" Saga

I published a blog post describing how complex the underlay supporting VMware NSX still has to be (because someone keeps pretending a network is just a thick yellow cable), and the tweet announcing it admittedly looked like a clickbait.

[Blog] Do We Need Complex Data Center Switches for VMware NSX Underlay

Martin Casado quickly replied NO (probably before reading the whole article), starting a whole barrage of overlay-focused neteng-versus-devs fun.

Getting More Bang for Your VXLAN Bucks

A little while ago I explained why you can’t use more than 4K VXLAN segments on a ToR switch (at least with most ASICs out there). Does that mean that you’re limited to a total of 4K virtual ethernet segments?

Of course not.

You could implement overlay virtual networks in software (on hypervisors or container hosts), although even there the enterprise products rarely give you more than a few thousand logical switches (to use NSX terminology)… but that’s a product, not technology limitation. Large public cloud providers use the same (or similar) technology to run gazillions of tenant segments.