Category: VXLAN

EVPN Designs: IBGP Full Mesh Between Leaf Switches

In the previous blog post in the EVPN Designs series, we explored the simplest possible VXLAN-based fabric design: static ingress replication without any L2VPN control plane. This time, we’ll add the simplest possible EVPN control plane: a full mesh of IBGP sessions between the leaf switches.

EVPN Designs: VXLAN Leaf-and-Spine Fabric

In this series of blog posts, we’ll explore numerous routing protocol designs that can be used to implement EVPN-with-VXLAN L2VPNs in a leaf-and-spine data center fabric. Every design will come with a companion netlab topology you can use to create a lab and explore the behavior of leaf- and spine switches.

Our leaf-and-spine fabric will have four leaves and two spines (but feel free to adjust the lab topology fabric parameters to build larger fabrics). The fabric will provide layer-2 connectivity to orange and blue VLANs. Two hosts will be connected to each VLAN to check end-to-end connectivity.

… updated on Sunday, June 30, 2024 10:43 UTC

VXLAN Virtual Labs Have Never Been Easier

I stumbled upon an “I want to dive deep into VXLAN and plan to build a virtual lab” discussion on LinkedIn1. Of course, I suggested using netlab. After all, you have to build an IP core and VLAN access networks and connect a few clients to those access networks before you can start playing with VXLAN, and those things tend to be excruciatingly dull.

Now imagine you decide to use netlab. Out of the box, you get topology management, lab orchestration, IPAM, routing protocol design (OSPF, BGP, and IS-IS), and device configurations, including IP routing and VLANs.

VXLAN/EVPN Layer-3 Handoff (L3Out) on Arista EOS

A while ago, I published a blog post describing how to establish a LAN/WAN L3 boundary in VXLAN/EVPN networks using Cisco NX-OS. At that time, I promised similar information for Arista EOS. Here it is, coming straight from Massimo Magnani. The useful part of what follows is his; all errors were introduced during my editing process.

In the cases I have dealt with so far, implementing the LAN-WAN boundary has the main benefit of limiting the churn blast radius to the local domain, trying to impact the remote ones as little as possible. To achieve that, we decided to go for a hierarchical solution where you create two domains, local (default) and remote, and maintain them as separate as possible.

Worth Reading: Introduction of EVPN at DE-CIX

Numerous Internet Exchange Points (IXP) started using VXLAN years ago to replace tradition layer-2 fabrics with routed networks. Many of them tried to avoid the complexities of EVPN and used VXLAN with statically-configured (and hopefully automated) ingress replication.

A few went a step further and decided to deploy EVPN, primarily to deploy Proxy ARP functionality on EVPN switches and reduce the ARP/ND traffic. Thomas King from DE-CIX described their experience on APNIC blog – well worth reading if you’re interested in layer-2 fabrics.

Does EVPN/VXLAN over SD-WAN Make Sense?

It looks like we might be seeing VXLAN-over-SDWAN deployments in the wild. Here’s the “why that makes sense” argument I received from a participant of the ipSpace.net Design Clinic in which I wasn’t exactly enthusiastic about the idea.

Also, the EVPN-over-WAN idea is not hypothetical since EVPN+VXLAN is now the easiest way to build L3VPN with data center switches that don’t support MPLS LDP. Folks with no interest in EVPN’s L2 features are still using it for L3VPN.

Let’s unravel this scenario a bit:

Layer-3 WAN Handoff (L3Out) in VXLAN/EVPN Fabrics

I got a question from a few of my students regarding the best way to implement end-to-end EVPN across multiple locations. Obviously there’s the multi-pod and multi-site architecture for people believing in the magic powers of stretching VLANs across the globe, but I was looking for something that I could recommend to people who understand that you have to have a L3 boundary if you want to have multiple independent failure domains (or availability zones).

MLAG Clusters without a Physical Peer Link

With the widespread deployment of Ethernet-over-something technologies, it became possible to build MLAG clusters without a physical peer link, replacing it with a virtual link across the core fabric. Avaya was one of the first vendors to implement virtual peer links with Provider Backbone Bridging (PBB) transport, and some data center switching vendors (example: Cisco) offer similar functionality with VXLAN transport.

DHCP Relaying in VXLAN Segments

After I got the testing infrastructure in place (simple DHCP relay, VRF-aware DHCP relay), I was ready for the real fun: DHCP relaying in VXLAN (and later EVPN) segments.

TL&DR: It works exactly as expected. Even though I had anycast gateway configured on the VLAN, the Arista vEOS switches used their unicast IP addresses in the DHCP relaying process. The DHCP server had absolutely no problem dealing with multiple copies of the same DHCP broadcast relayed by different switches attached to the same VLAN. One could only wish things were always as easy in the networking land.

Why Would You Need an Overlay Network?

I got this question from one of ipSpace.net subscribers:

My VP is not a fan of overlays and is determined to move away from our legacy implementation of OTV, VXLAN, and EVPN1. We own and manage our optical network across all sites; however, it’s hard for me to picture a network design without overlays. He keeps asking why we need overlays when we own the optical network.

There are several reasons (apart from RFC 1925 Rule 6a) why you might want to add another layer of abstraction (that’s what overlay networks are in a nutshell) to your network.

netlab: VRF Lite over VXLAN Transport

One of the comments I received after publishing the Use VRFs for VXLAN-Enabled VLANs claimed that:

I’m firmly of the belief that VXLAN should be solely an access layer/edge technology and if you are running your routing protocols within the tunnel, you’ve already lost the plot.

That’s a pretty good guideline for typical data center fabric deployments, but VXLAN is just a tool that allows you to build multi-access Ethernet networks on top of IP infrastructure. You can use it to emulate E-LAN service or to build networks similar to what you can get with DMVPN (without any built-in security). Today we’ll use it to build a VRF Lite topology with two tenants (red and blue).

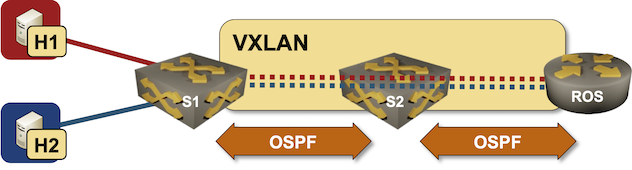

netlab VXLAN Router-on-a-Stick Example

In October 2022 I described how you could build a VLAN router-on-a-stick topology with netlab. With the new features added in netlab release 1.41 we can do the same for VXLAN-enabled VLANs – we’ll build a lab where a router-on-a-stick will do VXLAN-to-VXLAN routing.

Lab topology

… updated on Thursday, November 10, 2022 07:58 UTC

Using EVPN/VXLAN with MLAG Clusters

There’s no better way to start this blog post than with a widespread myth: we don’t need MLAG now that most vendors have implemented EVPN multihoming.

TL&DR: This myth is close to the not even wrong category.

As we discussed in the MLAG System Overview blog post, every MLAG implementation needs at least three functional components:

Use VRFs for VXLAN-Enabled VLANs

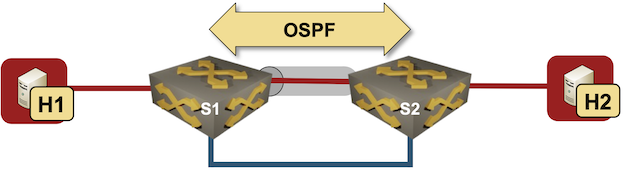

I started one of my VXLAN tests with a simple setup – two switches connecting two hosts over a VXLAN-enabled (gray tunnel) red VLAN. The switches are connected with a single blue link.

Test lab

I configured VLANs and VXLANs, and started OSPF on S1 and S2 to get connectivity between their loopback interfaces. Here’s the configuration of one of the Arista cEOS switches:

Worth Reading: VXLAN Drops Large Packets

Ian Nightingale published an interesting story of connectivity problems he had in a VXLAN-based campus network. TL&DR: it’s always the MTU (unless it’s DNS or BGP).

The really fun part: even though large L2 segments might have magical properties (according to vendor fluff), there’s no host-to-network communication in transparent bridging, so there’s absolutely no way that the ingress VTEP could tell the host that the packet is too big. In a layer-3 network you have at least a fighting chance…

For more details, watch the Switching, Routing and Bridging part of How Networks Really Work webinar (most of it available with Free Subscription).