Category: CEF

Interactions between IP routing and QoS

One of my readers sent me an interesting question a while ago:

I reviewed one of your blog posts "Per-Destination or Per Packet CEF Load Sharing?" and wondered if you had investigated previously on how MQC QoS worked together with the CEF load-sharing algorithm (or does it interact at all)? For example, let's say I have two equal cost paths between two routers and the routing table (as well as CEF) sees both links as equal paths to the networks behind each router. On each link I have the same outbound service policy applied with a simple LLQ, BW, and a class-default queues. Does CEF check each IP flow and make sure both link's LLQ and BW queues are evenly used?

Unfortunately, packet forwarding and QoS are completely uncoupled in Cisco IOS. CEF performs its load balancing algorithm purely on source/destination information and does not take in account the actual utilization of outbound interfaces. If you have bad luck, most of the traffic ends on one of the links and the packets that would easily fit on the other link will be dropped by the QoS mechanisms.

You could use multilink PPP to solve the problem in low-speed environments. With MLPPP, CEF sends the traffic to a single output interface (the Multilink interface) and the queuing mechanisms evenly distribute packet fragments across the links in the bundle.

In high-speed environments, you can only hope that the number of traffic flows traversing the links will be so high that you’ll get a good statistical distribution (which is usually the case).

EBGP Multipath Load Sharing and CEF

When I was discussing the details of the BGP troubleshooting video with one of my readers, he pointed out that I should mention the need for CEF switching in EBGP multipath scenario. My initial response was “Why would you need CEF? EBGP multipath is older than CEF” and his answer told me I should turn on my gray cells before responding to emails: “Your video as well as Cisco’s web site recommends CEF for EBGP multipath design… but interestingly, it does work without CEF”.

The real reason we need CEF in EBGP load sharing designs is the efficacy of load distribution. Without CEF, the router will send all traffic toward a single BGP prefix over one of the links (fast switching performs per-destination-prefix load sharing). With CEF, the load is distributed based on the source-destination IP address pair combinations. Even if multiple clients send the traffic toward the same server, the load is spread across available links.

Load balancing quirks

This article is part of You've asked for it series.

IOS scheduling parameters

Peter Weymann sent me a really intriguing question:

A few days ago I started reading the Ciscopress book End-to-End Network Security: Defense-in-Depthand stumbled over the scheduler command. This one could be used to allocate time that the cpu spends on fast switching packets or process switching packets, if I understand it correctly. They also mention interrupting CPU processes but honestly I don't really understand how it works.

Cisco routers support (at least) three forms of layer-3 switching (formerly known as routing). CEF switching and fast switching are performed entirely within the interrupt context (I/O adapter interrupts a process the CPU is currently executing and all the work is done before the process resumes). Process switching is performed in two steps: packet is briefly analysed within the interrupt context and requeued into the IP Input process where it's eventually switched. Almost all I/O adapters used these days use a concept of RX/TX rings to communicate with the CPU, meaning that the CPU potentially has to handle more than one packet for each interrupt.

Fast switching is gone starting with IOS release 12.4(20)T.

Under very high load, the packet arrival rate could be so high that the router would constantly service packets within the interrupt context without ever returning back to the IOS processes.

You can check the CPU load incurred by the interrupt context and IOS processes with the show process cpu command. The second number in the five seconds part of the first line tells you the amount of interrupt context activity in the last five seconds.

To prevent the starvation of IOS processes (which could result in keepalive and routing protocol problems, eventually leading to loss of routing protocol neighbors), the scheduler allocate command limits the amount of time that can be spent in the interrupt context and allocates some guaranteed time to the IOS processes. Very probably the routers have a mechanism to mask the requests from the I/O adapters during that period so that the CPU is not interrupted (BTW, this slightly increases the jitter).

A similar command is the scheduler interval command. IOS has high- and low priority processes. Whenever the CPU has to decide what process to run (usually following an interrupt or when a process decides it's done with its work), it will run a high-priority process if one is ready. This could lead to starvation of low-priority processes and the scheduler interval command specifies the maximum amount of time the higher-priority processes can consume before a low-priority process is given a chance to run.

Unless you have serious (and I mean __serious__) problems in your network, don't play with these commands. They are a last-resort things you can do if you're under very heavy load and still need access to the exec to reconfigure the router. In most cases, you should not have to worry ... and anyhow, if the CPU load is close to 100%, you have other problems anyway.

Apart from the Inside Cisco IOS Software Architecture book that you absolutely must have if you're interested in (a bit outdated) view of the internals of Cisco IOS, you can get more information in these documents:

- Router performance tuning by Rob Thomas

- Troubleshooting router hangs from Cisco Systems

Debugging cached CEF adjacencies

Alternatively, you can use the debug arp adjacency command, but you cannot limit its output with an access-list

CEF accounting

The "How could we figure out if any traffic uses the default route" challenge was obviously too easy; a number of readers quickly realized that the CEF accounting can do what we need (and I have to admit I've completely missed it).

However, when I started to explore the various CEF accounting features, it turned out the whole thing is not as simple as it looks. To start with, the ip cef accounting global configuration command configures three completely unrelated accounting features: per-prefix accounting (that we need), traffic matrix accounting (configured with the non-recursive keyword) and prefix-length accounting.

The per-prefix accounting is the easiest one to understand: every time a packet is forwarded through a CEF lookup, the counters attached to the CEF prefix entry are increased. To clear the CEF counters, you can use the clear ip cef address prefix-statistics command. The per-prefix counters are also lost when the IP prefix is removed from the CEF table (for example, because it temporarily disappears from the IP routing table during network convergence process). The CEF per-prefix accounting is thus less reliable than other accounting mechanisms (for example, IP accounting).

Note: The CEF per-prefix counters are always present; if the CEF per-prefix accounting is not configured, they simply remain zero.

Last but not least, you don't need the detail keyword if you want to display the CEF accounting data for a particular prefix. The show ip cef address mask command is enough. And, finally, if you're running IOS release 12.2SB or 12.2XN, you can inspect the CEF counters with SNMP.

ARP entries are periodically refreshed if you use CEF switching

In a previous post I've been writing about the inability to clean the ARP cache due to cached CEF adjacencies. As it turns out, this behavior has another side effect: the router will automatically refresh all ARP entries (and CEF adjacencies) as they expire from the ARP cache. This might become a problem on high-end devices with a lot of directly connected hosts if you set the arp timeout to a low value.

What is a cached CEF adjacency?

Whenever a router running CEF switching has LAN interfaces (or any other multi-access interfaces), you'll find cached adjacencies for active directly attached IP neighbors in its CEF table. These adjacencies ensure the smooth traffic flow toward the LAN-attached next-hops (preventing the initial packet drop symptom once the next-hop becomes active).

Unequal Cost Load-Sharing with MPLS TE

One of the most commonly asked load-sharing-related questions is “can I load-share traffic across unequal-cost links?”. In general, the answer is no. In order to load-share the traffic, you need more than one path to the destination and the only way to get multiple routes toward a destination in the IP routing table is to make them equal-cost (the only notable exception being EIGRP that supports unequal-cost load-sharing with the variance parameter).

There are, however, two cases where you can force unequal traffic split across equal-cost paths toward a destination: when using inter-AS BGP with the link bandwidth parameter, and when using unequal-bandwidth traffic-engineering tunnels.

CEF punted packets

- If the destination is reachable over an interface that cannot use CEF-switching due to a feature not supported by CEF (for example, X.25 link), the packet has to be fast- or process-switched.

These destinations are easily discovered by inspecting the punt adjacencies).

- All packets destined for the router itself are process switched (thus punted).

- If the router needs to reply back to the source with an ICMP packet (redirect, unreachable ...), the reply can be generated only in the process-switching path.

- All packets with the IP options are punted to process switching.

- Fragments that have to be processed by the router are also process-switched.

This article is part of You've asked for it series.

CEF punt adjancency

In "border cases" you might find interesting CEF adjacencies in your CEF adjacency table (displayed with show ip cef adjacency). Most common one is the glean adjacency used for directly connected routes (this adjacency type is a placeholder that indicates the router it should perform the ARP table lookup and send the packet to directly connected neighbor). Discard, Drop, Noroute and Null adjacencies are obvious, the "weird" one is the Punt adjacency, which indicates that the router cannot CEF-switch the packet toward the destination (due to a feature being used that is not yet supported by CEF), thus the packet is punted to the next switching method (fast switching and ultimately process switching).

Per-Port CEF Load Balancing

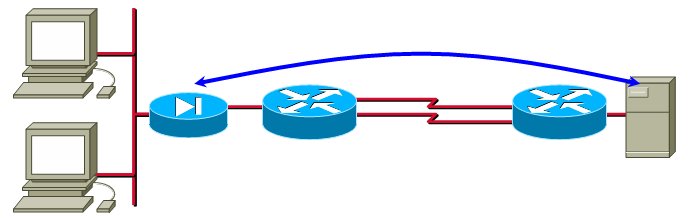

In designs with very low number of IP hosts, no per-destination load-sharing algorithm will work adequately. Consider, for example, an extranet design where a large number of IP hosts are NAT-ed to a single IP address which then accesses a single remote server.

In this design, all the traffic flows between a single pair of IP addresses, making per-destination load-sharing unusable.

Per-destination or per-packet CEF load sharing?

To configure per-packet load-sharing, use the ip load-sharing per-packet interface configuration command (default is per-destination). This command has to be configured on all outgoing interfaces over which the traffic is load-shared.

The switch between the load-sharing modes is not immediate; sometimes you have to wait a few seconds for the ip load-sharing command to take effect, worst case a manual clearing of the CEF table (clear ip cef address) is required.

Which switching path does an IOS feature use

I've got an excellent question recently: Which switching path is used in Zone-based firewalls when a packet is dropped? As usual, IOS documentation was not very helpful (which is understandable as the answer might depend on hardware platform, interface encapsulation, other features configured on the router etc.). However, there is a great tool to use - the show interface stats command.

Fine-Tuning CEF Load Balancing

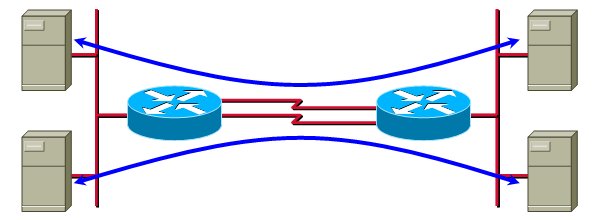

In environments with a low number of IP hosts you have to fine-tune the CEF load-sharing algorithm to ensure that the traffic is spread between all parallel paths. A typical scenario is a primary-backup data center setup with pairs of replicating servers, as shown in the figure below.

In these cases, you have to try different values of seed parameter of the CEF universal algorithm.