Video: Cloud Infrastructure-as-Code

With AWS re:Invent 2022 being just a few days away, it’s time for another cloudy Friday video: using infrastructure-as-code principles to provision public cloud resources by Matthias Luft (part of Introduction to Cloud Computing webinar).

Azure Networking Update (Phase 1)

Last week I completed the first part of the annual Azure Networking update. The Azure Firewall section is already online; hope you’ll find it useful. I already have the materials for the Private Link and Gateway Load Balancer services, but haven’t decided whether to schedule another live session to cover them, or just create a short video.

Then there are a half-dozen smaller things I found while processing a year worth of Azure networking News. You’ll find them (and links to documentation) in New Azure Services and Features document.

Integrated Routing and Bridging (IRB) Design Models

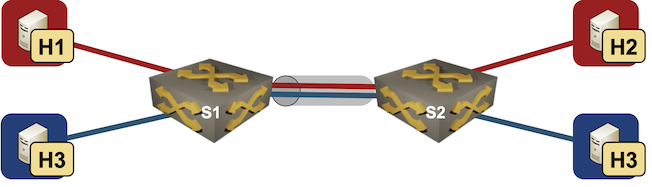

Imagine you built a layer-2 fabric with tons of VLANs stretched all over the place. Now the users want to exchange traffic between those VLANs, and the obvious question is: which devices should do layer-2 forwarding (bridging) and which ones should do layer-3 forwarding (routing)?

There are four typical designs you can use to solve that challenge:

- Exchange traffic between VLANs outside of the fabric (edge routing)

- Route on core switches (centralized routing)

- Route on ingress (asymmetric IRB)

- Route on ingress and egress (symmetric IRB)

This blog post is an overview of the design models; we’ll cover each design in a separate blog post.

Network Automation: a Service Provider Perspective

Antti Ristimäki left an interesting comment on Network Automation Considered Harmful blog post detailing why it’s suboptimal to run manually-configured modern service provider network.

I really don’t see how a network any larger and more complex than a small and simple enterprise or campus network can be developed and engineered in a consistent manner without full automation. At least routing intensive networks might have very complex configurations related to e.g. routing policies and it would be next to impossible to configure them manually, at least without errors and in a consistent way.

netlab: IRB with Anycast Gateways

netlab release 1.4 added support for static anycast gateways and VRRP. Today we’ll use that functionality to add anycast gateways to the VLAN trunk lab:

Lab topology

We’ll start with the VLAN trunk lab topology and make the following changes:

Worth Reading: Resolverless DNS

Geoff Huston published a lengthy article (as always) describing talks from recent OARC meeting, including resolver-less DNS and DNSSEC deployment risks.

Definitely worth reading if you’re at least vaguely interested in the technology that supposedly causes all network-related outages (unless it’s BGP, of course)

Worth Reading: Another Hugo-Based Blog

Bruno Wollmann migrated his blog post to Hugo/GitHub/CloudFlare (the exact toolchain I’m using for one of my personal web sites) and described his choices and improved user- and author experience.

As I keep telling you, always make sure you own your content. There’s absolutely no reason to publish stuff you spent hours researching and creating on legacy platforms like WordPress, third-party walled gardens like LinkedIn, or “free services” obsessed with gathering visitors’ personal data like Medium.

Video: Exposing Kubernetes Services to External Clients

After a brief introduction of Kubernetes service and an overview of services types, Stuart Charlton added the last missing bit: how do you expose Kubernetes services to external clients.

Multihoming Cannot Be Solved within a Network

Henk made an interesting comment that finally triggered me to organize my thoughts about network-level host multihoming1:

The problems I see with routing are: [hard stuff], host multihoming, [even more hard stuff]. To solve some of those, we should have true identifier/locator separation. Not an after-thought like LISP, but something built into the layer-3 addressing architecture.

Proponents of various clean-slate (RINA) and pimp-my-Internet (LISP) approaches are quick to point out how their solution solves multihoming. I might be missing something, but it seems like that problem cannot be solved within the network.

BGP in ipSpace.net Design Clinic

The ipSpace.net Design Clinic has been running for a bit over than a year. We covered tons of interesting technologies and design challenges, resulting in over 13 hours of content (so far), including several BGP-related discussions:

- BGP route servers

- Redundant BGP-Based Internet Access

- Secure BGP Configuration on Customer Routers

- Enterprise WAN Routing Design

All the Design Clinic discussions are available with Standard or Expert ipSpace.net Subscription, and anyone can submit new design/discussion challenges.

BGP Unnumbered Duct Tape

Every time I mention unnumbered BGP sessions in a webinar, someone inevitably asks “and how exactly does that work?” I always replied “gee, that’s a blog post I should write one of these days,” and although some readers might find it long overdue, here it is ;)

We’ll work with a simple two-router lab with two parallel unnumbered links between them. Both devices will be running Cumulus VX 4.4.0 (FRR 8.4.0 container generates almost identical printouts).

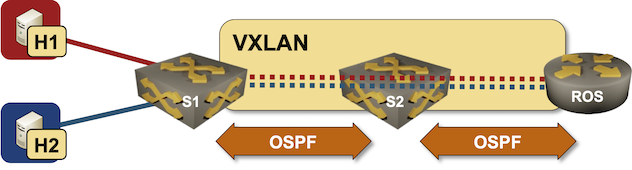

netlab VXLAN Router-on-a-Stick Example

In October 2022 I described how you could build a VLAN router-on-a-stick topology with netlab. With the new features added in netlab release 1.41 we can do the same for VXLAN-enabled VLANs – we’ll build a lab where a router-on-a-stick will do VXLAN-to-VXLAN routing.

Lab topology

Worth Reading: History of Fiber Optics Cables

Geoff Huston published a fantastic history of fiber optics cables, from the first (copper) transatlantic cable to 2.2Tbps coherent optics. Have fun!

Video: Routing Protocols Overview

After discussing network addressing and switching, routing, and bridging in the How Networks Really Work webinar, it was high time for a deep dive into routing protocols, starting (as always) with an overview.

BGP Route Reflectors in the Forwarding Path

Bela Varkonyi left two intriguing comments on my Leave BGP Next Hops Unchanged on Reflected Routes blog post. Let’s start with:

The original RR design has a lot of limitations. For usual enterprise networks I always suggested to follow the topology with RRs (every interim node is an RR), since this would become the most robust configuration where a link failure would have the less impact.

He’s talking about the extreme case of hierarchical route reflectors, a concept I first encountered when designing a large service provider network. Here’s a simplified conceptual diagram (lines between boxes are physical links as well as IBGP sessions between loopback interfaces):