IPv6 Address Assignment and Tracking

One of the significant challenges of IPv6 is the host address assignment and tracking (for logging/auditing reasons), more so if you use SLAAC or (even worse) SLAAC privacy extensions. Not surprisingly, Eric Vyncke and I spent significant time addressing this topic in the IPv6 Security webinar.

Published on , commented on July 9, 2022

Fallacies of GUI

I love Greg Ferro’s characterization of CLI:

We need to realise that the CLI is a “power tools” for specialist tradespeople and not a “knife and fork” for everyday use.

However, you do know that most devices’ GUI offers nothing more than what CLI does, don’t you? Where’s the catch?

Summer seems to have arrived

The current weather around Central Europe doesn’t exactly support this conclusion, but I do get many more “I’m on vacation” responses than usual, so it’s time to reduce the blogging frequency to keep your RSS reader from overloading (you did switch from Google Reader to something like Feedly, didn’t you?).

However, if you’re looking for some really heavy reading, do pick up The Hidden Reality and explore various multiverse proposals. There’s also a beach-friendly version of multiverse discussion: The Long Earth by the one-and-only Terry Pratchett.

Data Center Fabrics Built with Plexxi Switches

During the recent Data Center Fabrics Update webinar Dan Backman from Plexxi explained how their innovative use of CWDM technology and controller-assisted forwarding simplifies deployment and growth of reasonably-sized data center fabrics.

I would highly recommend that you watch the video – the start is a bit short on details, but he does cover all the juicy aspects later on.

Real-Life SDN/OpenFlow Applications

NEC and a slew of its partners demonstrated an interesting next step in the SDN saga @ Interop Las Vegas 2013: multi-vendor SDN applications. Load balancing, orchestration and security solutions from A10, Silver Peak, Red Hat and Radware were happily cooperating with ProgrammableFlow controller.

A curious mind obviously wants to know what’s behind the scenes. Masterpieces of engineering? Large integration projects ... or is it just a smart application of API glue? In most cases, it’s the latter. Let’s look at the ProgrammableFlow – Radware integration.

The Tools That I Use (Webinars)

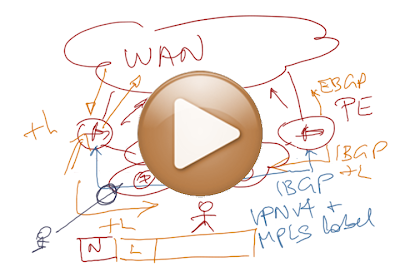

Andreas was watching my recent Enterasys DCI webinar and got intrigued by the quick hand drawings I made, so he asked me “What kind of tool do you use to make the hand drawings during your presentations? It must be something different than a mouse.”

In case you weren’t watching one of my recent webinars, here’s a sample to get you started:

CLI and API Myths

Greg Ferro published a great blog post explaining why he decided to use node.js to build his cloud automation platform. While I agree with most things he wrote, this one prickled me the wrong way:

In my view, an Application Programmable Interface(API) is the fundamental change that makes Software Defined Networking (SDN) a “thing”. We need to realise that the CLI is a “power tools” for specialist tradespeople and not a “knife and fork” for everyday use.

While I agree with his view on CLI, keep in mind that API is no different.

EIBGP Load Balancing

The next small step in my MPLS/VPN update project: EIBGP load balancing – why is it useful, how it works, why can’t you use it without full-blown MPLS/VPN, and what the alternatives are.

MPLS/VPN Carrier’s Carrier – Myth or Reality?

Andrew is struggling with MPLS/VPN providers and sent me the following question:

Is "carriers carrier" a real service? I'm having a bit of an issue at the moment with too many MPLS providers […] Carrier’s carrier would be an answer to many of them, but none of the carriers admit to being able to do this, so I was wondering if it's simply that I'm speaking to the wrong people, or whether they really don't...

Short answer: I have yet to see this particular unicorn roaming the meadows of reality.

Arista EOS Virtual ARP (VARP) Behind the Scenes

In the Optimal L3 Forwarding with VARP and Active/Active VRRP blog post I made a remark along the lines of “Things might get nasty [in Arista EOS Virtual ARP world] if you have configuration mismatches”, resulting in a lengthy and amazingly insightful email exchange with Lincoln Dale during which we ventured deeper and deeper down the Virtual ARP (VARP) rabbit hole. Here’s what I learned during out trip:

Implementing Control-Plane Protocols with OpenFlow

The true OpenFlow zealots would love you to believe that you can drop whatever you’ve been doing before and replace it with a clean-slate solution using dumbest (and cheapest) possible switches and OpenFlow controllers.

In real world, your shiny new network has to communicate with the outside world … or you could take the approach most controller vendors did, decide to pretend STP is irrelevant, and ask people to configure static LAGs because you’re also not supporting LACP.

Network Virtualization and Spaghetti Wall

I was reading What Network Virtualization Isn’t1 from Jon Onisick the other day and started experiencing all sorts of unpleasant flashbacks caused by my overly long exposure to networking industry missteps and dead ends touted as the best possible solutions or architectures in the days of their glory:

Will SPDY Solve Web Application Performance Issues?

In the TCP, HTTP and SPDY webinar I described the web application performance roadblocks caused by TCP and HTTP and HTTP improvements that remove most of them. Google went a step further and created SPDY, a totally redesigned HTTP. What is SPDY? Is it really the final solution? How much does it help? Hopefully you’ll find answers to some of these questions in the last part of the webinar.

The Difference between Access Lists and Prefix Lists

A while ago someone asked what the difference between access and prefix lists is on the Network Engineering Stack Exchange web site (a fantastic resource brought to life primarily by sheer persistence of Jeremy Stretch, who had to fight troves of naysayers with somewhat limited insight claiming everything one would want to discuss about networking falls under server administration web site).

The question triggered a lengthy wandering down the memory lane … and here's the history of how the two came into being (and why they are the way they are).

Published on , commented on July 10, 2022

Response: SDN’s Casualties

An individual focused more on sensationalism than content deemed it appropriate to publish an article declaring networking engineers an endangered species on an industry press web site that I considered somewhat reliable in the past.

The resulting flurry of expected blog posts included an interesting one from Steven Iveson in which he made a good point: it’s easy for the cream-of-the-crop not to be concerned, but what about others lower down the pile. As always, it makes sense to do a bit of reality check.