Can We Skip the Network Layer?

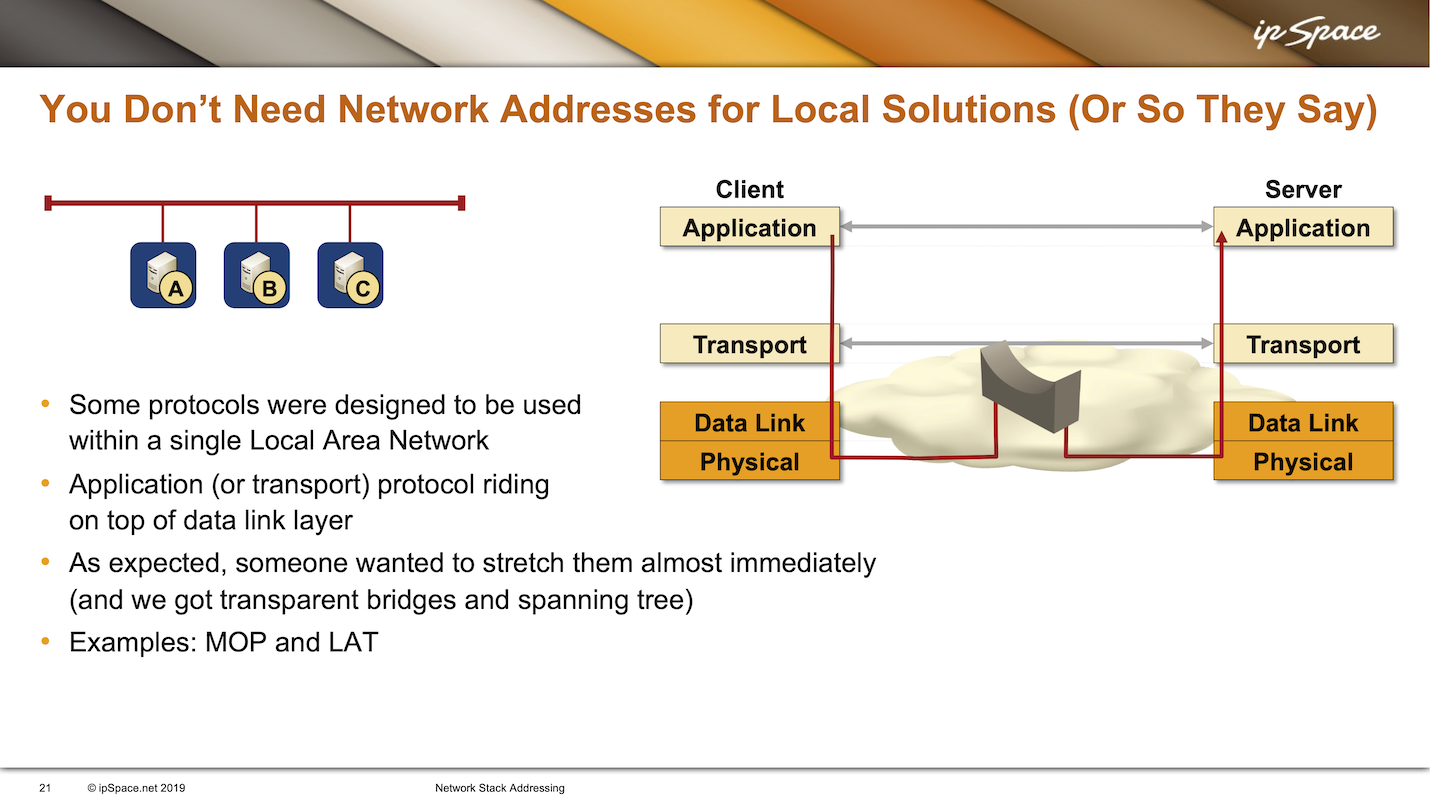

I mentioned that you don’t need node addresses when dealing with only two entities. Now and then, someone tries to extend this concept and suggests that the network layer addressing isn’t needed if the solution is local. For instance, if we have a solution that is supposed to run only on a single Ethernet segment, we don’t need network layer addressing because we already have data link layer addresses required for Ethernet to work (see also: ATAoE).

Too often in the past, an overly ingenious engineer or programmer got the idea to simplify everyone’s life and use the data link layer addresses as the ultimate addresses of individual nodes. They would then put the transport layer on top of that to get reliable packet transport. Finally, put whatever application on top of the transport layer. Problem solved.

Digital Equipment Corporation (DEC) was very fond of this idea. One reason they wanted to eliminate the network layer was the complexity of DECnet, their network-layer protocol. It consumed a lot of memory in low-end 16-bit nodes like PCs or (in their case) terminal servers. Implementing proper DECnet in terminal servers would consume too much memory and CPU cycles.

Someone came up with this “great” idea that they could design a dedicated protocol (LAT) for local-area terminal services. It would sit straight on top of Ethernet, making everyone’s life easier. They even had another protocol called MOP, maintenance and operations protocol, that you could use to connect to the terminal server or download software to a terminal server. MOP was an early equivalent of a PXE boot.

As expected, things didn’t end well. They hit the wall when the first customer wanted to have two segments with terminal servers accessing the same computer, and the computer was not sitting between the two segments. Someone quickly came up with the idea that they could connect the two segments, but the laws of physics were as harsh in those days as they are now. In those days, a single Ethernet segment could be up to 500 meters long, but you couldn’t connect two of them.

And so someone dropped by Radia Perlman saying, well, Radia, we have this problem. We have these two Ethernet segments, and we need to make them into one segment because, you know, we’ve sold the solution to the customer, and the customer wants to stretch the whole thing. And we can’t do it because Ethernet was not designed that way.

Supposedly (so the myth goes), over a weekend, she designed transparent bridging and the spanning tree protocol (STP) that detects loops when you’re connecting multiple transparent bridges in a redundant topology. The rest is history, and we’re still dealing with the consequences of someone having a great idea that it makes perfect sense to stretch a single data link layer across longer distances. Virtualization vendors (VMware in particular) went one step further – their marketing departments and consultants keep suggesting that it makes sense to stretch a single segment across the continent and move virtual machines across that stretched VLAN. We’ll leave that sad story for another day, but if you insist, I ranted against it too often.

Lesson (not) learned: there are sound engineering reasons for separate data-link- and network layers in a protocol stack.

Ivan, I cut my teeth as a junior PC tech in the early 90s. My special talent was being able to jockey the config.sys and autoexec.bat on MS-DOS workstations and get all the device drivers to load, plus tweaking the IRQ and Memory locations (with dip switches) on ISA bus ethernet cards.

At the ripe age of 19, I figured I knew everything in the world there was to know about computer networks and continued to deploy LAT with reckless abandon.

1 year later this new guys shows up who has some experience and explains why I might want to consider deploying TCP/IP rather than LAT. Bah! Why would these networks ever want to talk to anything else?

Oh the hubris of youth...

Oh the memories you are bringing back with autoexec.bat and paginated memory article on my magazine....

Ivan, can we just make John Day's Patterns in Network Architecture required reading for anyone that enters the field?

You can agree or disagree with Day's opinion, but at least he makes you think about the underling issues.

Thinking about the underlying issues is good, and I'm all for having some required reading that would force newcomers to do that.

Unfortunately, the vibe I got from his materials (admittedly presentations, not the book) is more like, "They got it all wrong, but I know how to do it right," and then he goes into applications of RFC 1925 rule 6a (the "R" in RINA). See https://blog.ipspace.net/2022/09/ipv6-worst-decision-ever.html and https://blog.ipspace.net/2022/11/multihoming-within-network.html

Or I'm still missing something important 🤷♂️

I can whole-heartedly recommend Day's book. There is a lot of history, which you are likely 100% familiar with.

But he does hit the nail on the head on why IPv4 doesn't do multi-homing correctly and he only uses CLNP as an example where they didn't screw that (entirely) up. IPv4 addresses the NIC and CLNP addresses the node. That's a huge difference.

His discourse on layers is great. Everything is a repeating layer with certain attributes that are required for each layer. Everything is inter-process communication (IPC) and just the mechanisms change, depending on the scope.

I especially like his view on what he calls "enrolment". We slapped DHCP onto IPv4 and thought that was a solution. Day explains how enrolment is integral to the layer you are enrolling in because it's integral to how the topology of your layer works!