L2 or L3 switching in campus networks?

Michael sent me an interesting question:

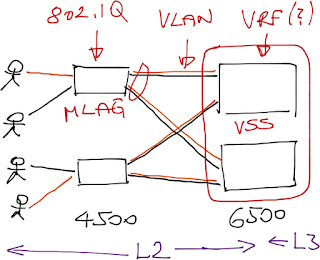

I work in a rather large enterprise facing a campus network redesign. I am in favor of using a routed access for floor LANs, and make Ethernet segments rather small (L3 switching on access devices). My colleagues seem to like L2 switching to VSS (distribution layer for the floor LANs). OSPF is in use currently in the backbone as the sole routing protocol. So basically I need some additional pros and cons for VSS vs Routed Access. :-)

The follow-up questions confirmed he has L3-capable switches in the access layer connected with redundant links to a pair of Cat6500s:

What are the options?

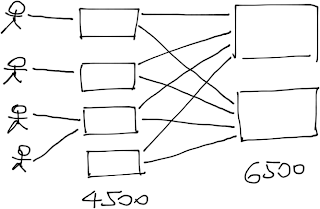

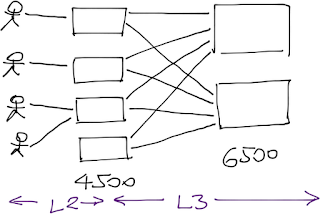

There are two fundamental designs Michael could use:

Layer-3 switching (also known as routing) in the access layer. VLANs would be terminated at the access-layer switch (no user-to-switch redundancy, thus no HSRP), the links between access and distribution layer would be P2P L3 links (routed interfaces) and every single switch would participate in the OSPF routing.

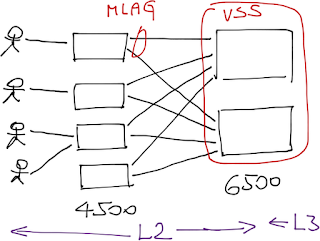

Layer-2 switching (also known as bridging) in the access layer. VLANs would be terminated at the distribution layer; the access layer switches would run as pure bridges. Half of the uplinks would be blocked due to the spanning tree limitations, unless you aggregate them with multi-chassis link aggregation (MLAG), which requires VSS on the Cat6500. You would still run STP with MLAG to prevent forwarding loops due to configuration or wiring errors.

When you configure VSS on Cat6500s, they appear as a single IP device, so yet again you don’t need HSRP.

Which one is better?

Both designs have minor benefits and drawbacks. For example, L3 design is more complex and has larger OSPF areas, L2 design requires VSS on Cat6500. The major showstopper is usually the requirement for multiple security zones (for example, users in different departments or guest VLANs).

You might be lucky enough and satisfy the security requirements by installing packet filters in every access VLANs, but more often than not you have to implement path separation throughout the network – for example, the guest VLAN traffic should stay separated from internal traffic.

The proper L3 solution to path separation is full-blown MPLS/VPN with label-based forwarding in the L3 part of the network ... but HP seems to be the only vendor with MPLS/VPN support on low-end A-series switches.

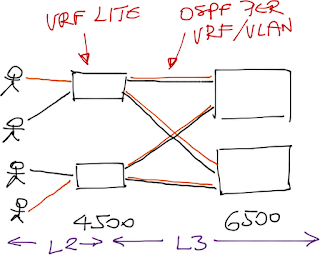

Without MPLS/VPN you’re left with the Multi-VRF kludge (assuming your access layer switch support VRFs – not all do), where you have to create numerous P2P L3 interfaces (using VLANs) between access and core switches. Do I have to mention you have to run a separate copy of OSPF in each VRF instance?

Obviously the MultiVRF-based path separation doesn’t scale, so it might be easier to go with the L2 design: terminate VLANs on the Cat6500, where you can use centralized packet filters, VRFs and even MPLS/VPN if you need to retain the path separation across the network core.

Have I missed something?

What are your thoughts? Would you prefer L2 or L3 switching in access network? Do you believe in “route where you must, bridge where you can” or in “route as much as possible”? Write a comment!

Any relevant webinars?

Sure. Enterprise MPLS/VPN Deployment webinar (recording) describes the path separation challenges and the potential solutions – MultiVRF and MPLS/VPN with label-based forwarding. You’ll also learn about VRF-aware NAT and DHCP (just in case you need them in your network). And if you’re interested in a wider range of topics, you might find the yearly subscription cost effective.

And what are those crazy diagrams?

Greg Ferro has persuaded me that iPad-based drawing has a future. I bought a proper pen (doing it with your fingers will get you a kindergarten-grade results), Penultimate software (nothing to do with Penultimate Hop Popping) and started experimenting. Who know, I just might learn how to do good napkin drawings.

Why? No bullshitting around with the STP, and security concerns with root/bpdu -guard etc and storm-control.

Just OSPF and use passive interfaces by default.

Although the other option "Layer-2 switching (also known as bridging) in the access layer" might be interesting if he has a large number of clients (laptops mostly) who do roaming across the building.

My 2 cents.

I can't say that this is the best issue and we are looking forward to transitioning to VSS later but we had't considered using MPLS/VPN. Not sure what the advantage would be.

We rejected layer3 access for several reasons. The main one is that "edge" layer3 switches have very poor feature sets in comparison with bigger boxes - at the time, our concerns included "no multicast in VRF lite on 3750", "no netflow", "no ipv6 in hardware (later introduced on 3750)" as well as "a hell of a lot more routers to configure"

We've actually made edge subnets a lot larger as time has gone by; this helps avoid IP wastage. For example - a not uncommon requirement at our place is 10 floors with maybe 160 regular hosts but requirement for "bursting" to 50 extra dynamic IPs (e.g. during infrequent meetings) in each location. This needs a /24 each i.e. the best part of a /19, or I can provision a single big /21. This is also less lines in the router config. Fault domain size was a concern so we grew slowly - but we're just not seeing problems, only benefits.

The original iteration was VRF lite. It was a pain in the backside, and I'm glad we went with MPLS. Much less typing to bring up a new VRF, many fewer routing adjacencies.

There are apparently some Cisco IOS features coming which make VRF lite "a bit like MPLS" in terms of typing and config - IIRC it is basically auto-creation of the per-VRF p2p VLANs and maintenance of the routing adjacencies - but we're on MPLS and using it for other things (TE to load-balance unequal cost paths) now.

Submitted designs for the latter option mentioned here. L3 at the distribution and p2p L3 links up the chain. Currently no need for pci compliance/security zones in the access layer. Planning to do identity based security into the DC(s).

Remark : as a campus network admin, i really like this kind of blog posts, with (for me) real-life arhitecture issues.... Keep up the good work!

Wireless reminds me of a campus version of vMotion. A technology that may be easy to deploy in a small network, but as things get larger, make designing for scale more difficult. Back in the day (1994), Carnegie Mellon had a dedicated wireless network wired network for all the APs. It peered with the rest of the campus network. It let us optimize the wireless network backbone separately from the wired network backbone. This was in the stone age of wireless networks.

http://www.cmu.edu/computing/about/history/wireless/index.html has some interesting history.

When going for scale, I think it best the the network engineers, software developers, system engineers, all have the same goals. We can't dumb down one of the legs of this three legged stool to make it easier for one of the other legs to function. Too much dependence on "fancy" / proprietary protocols makes one a slave to a vendors software development teams and update cycles.

IMHO

Probably not appropriate for the typical corporate environment, but if your company culture has lots of people working remotely then you have to have the VPN set up for all your workers anyway, and why duplicate the security decisions from the VPN setup to the access network?

If you go with the L2+L3 distribute your VLANs among your access-distribution uplinks to reduce the wasted bandwidth and rely on QoS for deal with congestion when all VLANs bounce to only one side. Btw you should already have a QoS policy in place to deal with other things beside congestion due to failure (e.g. congestion due to problematic hosts that are doing something unusual).

Also make sure 4096 VLANs are not a problem to you. You might be able to use Q-in-Q to overcome this limitation, but last time I played with it some vendors had issues supporting Q-in-Q and additional features due to the way the packets would flow from asic-to-asic in the dataplane.

But I believe we can make something a little clever with L3 only. So here's a couple of ideas:

1) provisioning L3 only solution requires more typing but shouldn't really be a problem. If it is, then is time for you to put some scripting/automation in your provisioning process. Provisioning customer for a worldwide Provider also requires a lot of typing... guess what they usually do.

2) If your L3 access switch supports BGP, please don't use OSPF, avoid it like the plague unless we are talking about your backbone.

3) BGP works pretty well with CE-PE in a MPLS-VPN context so why shouldn't it work great between your L3 access and your L3 distribution (speaking of which... do you really need a distribution layer there?). Maybe BGP is not as reactive as OSPF but you can tweak a couple of things (timers and others) to make it react faster to changing events. Most probably you don't need sub-second convergence anyway (VOIP and Interactive Video can be a challenge).

4) Unless you have roaming users with /32's floating around between different switches you probably are only going to need a dynamic routing protocol to "test" your access-distribution links and make sure you don't blackhole packets. See if there are other ways to validate these links, I believe that there are some vendors that implement alternative ways to signal routing protocols and/or interface status based on connectivity checks.

5) If you have roaming users, and you per user VLANs, maybe you have to use something like 802.11X with dynamic VLAN assignment. Maybe you don't need your users to maintain a static IP to track his/her privileges, in which case your address pools would be static so one less reason to depend on a dynamic routing protocol.

(1) no L3 licenses

(2) simple configuration

When we looked at the VSS design, my Cisco SE gave us a rare solid piece of advice. He said with VSS if you want to upgrade one, you have to take the whole VSS down. This was 10 months ago and I'm not sure if it's true anymore but that was a headache I didn't want to deal with.

For what it's worth, we're doing an OSPF totally stub area into area 0 at the dist/core layer and letting EIGRP summary routes take care of the campus reachability to/from DC's. 2x10GbE links per access closet, i have more BW in my campus than my DC's!

Dot1x is an additional helper in wired enviroments... but once You can assign the IP without end-user interaction, You can use 'dummer' access switches and route and secure at your own gusto.

We are moving into the deployment phase of a large school board MAN rework, where we are using HP Comware-based equipment (7500s) to virtualize their entire campus. This gear supports MPLS VPN, the various L2 MPLS technologies and even includes VPLS. The one thing you find out when working with Comware is that most of the gear seems to have a great deal of service provider catering functionality put in (likely has to do with China Telecom being a major customer). We've now built this really cool setup and the customer hadn't even asked for MPLS originally - it just came with the gear so we designed using it. The subinterface/multi-slash-30 alternative really isn't fun to deploy and add onto when you have a new VPN required.

HP does also have the 5800 which supports all of the above. A 5k$ switch with VPLS support is not something you can usually find.

As another poster highlighted, the issue with VSS (or HP IRF) is that ISSU really only is for minor, compatible upgrades. The manufacturers are usually quick to point out the advantages of stacking but hesitant to point out the caveats. Of course if you have maintenance windows allowing you to bring down your whole core, this really isn't that big a deal. Your availability vs performance requirements will dictate whether the pros and cons are really worth it.

http://www.cisco.com/en/US/prod/collateral/iosswrel/ps6537/ps6557/ps6604/whitepaper_c11-638769.html

Dietmar

Honestly, I don't see the configuration being simpler. You either have the complexity at layer 2 or layer 3. No matter what you need to create loop free topologies. I'd much rather look in the routing table then have to hop through multiple bridges to find out where a mac address lives on the network.

I've yet to support a network where some VLAN didn't have to be randomly spanned across closets. You can design it without spanning a VLAN, but that doesn't mean it will never be a "business need". And without a hack or redesign, you're essentially screwed with a routed access layer. It's pretty, it's clean, I love it... but it's less realistic than L2.

VSS just scares me. Shared control plane in core/distro... ack.

Or you can simply consider a vendor that does not promote vendor lock-in and not settle for a gimped version of an important protocol like this. Cisco still manages to not have an LLDP implementation on anything else than switches. And lets be truthful, its on the switches so they can win competing phone vendor switching business.

More details on eFSU here: http://www.cisco.com/en/US/docs/switches/lan/catalyst6500/ios/12.2SX/configuration/guide/issu_efsu.html

I like Layer3 campus setup to avoid the Layer 2 loops and broadcast. I implemented the VSS with layer 3 campus setup with Converged network. It is sable more than a year.

One time we faced the issues due to IOS bugs. Now the setup is stable more than years 9,500 users in the campus network…

I like Layer3 campus setup to avoid the Layer 2 loops and broadcast. I implemented the VSS with layer 3 campus setup with Converged network. It is sable more than a year.

One time we faced the issues due to IOS bugs. Now the setup is stable more than years 9,500 users in the campus network…

Nope. ISSU is supported, and if all downlinks are etherchannels - there would be no traffic disruption upon upgrade (well, Cisco claims 200ms, but I could live with that). Though each of the chassis' would be reloaded, the control plane stays solid.

But... Once I actually tried that with SXI4a, the whole VSS went down. TAC said it's a bug. So in theory VSS is brilliant, but in practice it's too risky.

For the same reasons, complex VLAN setups should be avoided as much as possible, with security and segregation implemented on the hosts via managed firewall and IPsec policies. Software solutions are always more flexible.

For a smaller site, I would use the layer2 with VSS solution.

For a larger site I would run MPLS - but I would collapse the CE into the PE as running VRF lite CEs doesn't scale in a campus environment.

When you have 20+ VRFs, needed to set up BGP sessions between each vrf between the PE and CE becomes very messy.

Therefore my recommendation for larger sites:

Pair of 6500(VSS) as [PE/CE]

Access switches connected via port channels to the collapsed PE/CE.

I have had a look at the smaller HP MPLS switches, but they only support relatively small routing tables so they would probably be too small for most MPLS enabled sites.

I have worked in environments where it's deployed at a major international airport, a top law firm and multiple in data centers. It's really versatile. It's also dead-easy to manage - just barely different than managing one chassis. There are just some very minor differences to keep in mind with VSS deployments.