VM-FEX – not as convoluted as it looks

Reading Cisco’s marketing materials, VM-FEX (the feature probably known as VN-Link before someone went on a FEX-branding spree) seems like a fantastic idea: VMs running in an ESX host are connected directly to virtual physical NICs offered by the Palo adapter and then through point-to-point virtual links to the upstream switch where you can deploy all sorts of features the virtual switch embedded in the ESX host still cannot do. As you might imagine, the reality behind the scenes is more complex.

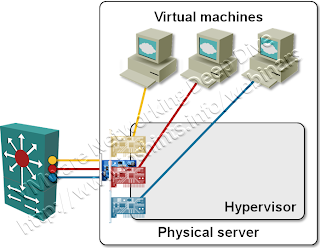

The first picture shows the mental model of VM-FEX architecture I would get after reading high-level whitepapers. According to this mental model, some handwave magic would automatically provision the virtual NIC and the upstream switch every time a new VM is started or vMotioned into an ESX host.

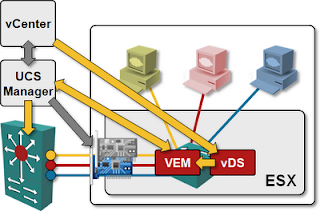

The second picture shows the reality: the control- and management-plane flows that have to take place for VM-FEX to work.

Before you can start deploying VM-FEX, the virtual Ethernet (vEthernet) adapters (on Palo NIC) used by VM-FEX have to be pre-provisioned by the UCS Manager; SR-IOV could be used to make changes in real time, but it’s not supported by vSphere.

You might have to reload your physical server before the changes take effect. However, due to the way UCS manager allocates PCI resources, the previously-created vEthernet/HBA adapters won’t change (your server will continue to work after the reload).

When a new VM NIC has to be activated (due to VM startup or vMotion event), the following events take place:

- Whenever a new VM is moved to or started in an ESX host, vCenter changes port state in a vDS port group;

- ESX signals the port change to the vDS kernel module (Virtual Ethernet Module – VEM). VEM thus learns the port group name and the port number of the newly-enabled virtual port.

- VEM selects a free vEthernet adapter and establishes a link between VM’s virtual NIC and the vEthernet adapter.

- VEM propagates the change to the UCS manager.

- UCS manager configures a virtual port corresponding to the newly-activated vEthernet adapter in the upstream switch (Nexus 6100);

Even though both Nexus 1000V and VM-FEX use VEM kernel module, you don’t need Nexus 1000V to implement VM-FEX. Actually, you have to select one or the other today; you cannot run both in the same host (it would make no sense anyway).

There are a few architectural reasons for the complex architecture used by VM-FEX:

You cannot tie a VM to a physical NIC. The vEthernet NIC created on the Palo NIC appears as regular physical NICs to the operating system (ESX). You cannot tie a VM directly to a physical NIC; although the VMDirectPath allows a VM to use physical hardware, it also disables vMotion for that VM. Therefore even though VMs use physical NICs, we still need a kernel module (VEM) that acts like a patch panel and shuffles the data between VM virtual NIC drivers and physical NIC.

vSphere 5 can support vMotion with VMDirectPath for vEthernet adapters.

ESX cannot create new vEthernet NICs. Although you could create hardware on demand with the SR-IOV technology, neither ESX nor Palo adapter support SR-IOV at the moment. The only way to create a new vEthernet adapter on the Palo adapter is thus from the outside (through UCS manager).

vSphere/ESX host cannot signal to the upstream switch what it needs. What we would need to implement vEthernet integration properly is EVB’s VSI Discovery Protocol (VDP). VDP is not implemented by vSphere, so VM-FEX needs a vDS replacement (VEM) that provides both data-plane pass-through functionality and control-plane communication with the upstream devices.

In the initial implementation of VM-FEX, VEM communicates with the UCS Manager. According to Shrijeet Mukherjee from Cisco, the communication will take place directly between VEM and upstream Nexus 6100 switch in the 2.x UCS software release.

Actually, Cisco had to implement functionality equivalent to both 802.1Qbh/802.1BR standard (VN-Tag – support for virtual link tagging) and parts of 802.1Qbg (VDP) to get VM-FEX up and running.

Update 2011-09-23: Shrijeet Mukherjee (Director of Engineering, Virtual Interface Card @ Cisco) kindly helped me understand the technical details of the VM-FEX architecture. I updated the post based on that information.

The way I understand how VM-FEX works, VEM bypasses the vSwitch forwarding mechanisms, but not the control/management plane.

The vSwitch (actually vDS) still exists (VEM is hidden inside it from the vCenter perspective), but the packets follow a different path (using logical NICs) than they would otherwise.

1) U may easily build VM-FEX on UCS C-series

2) U may do it w/o UCSM which requires FIs

3) U may easily do vMotion of VM w/ DirectPathIO w/ VM-FEX