Intelligent Redundant Framework (IRF) – Stacking as Usual

When I was listening to the Intelligent Redundant Framework (IRF) presentation from HP during the Tech Field Day 2010 and read the HP/H3C IRF 2.0 whitepaper afterwards, IRF looked like a technology sent straight from Data Center heavens: you could build a single unified fabric with optimal L2 and L3 forwarding that spans the whole data center (I was somewhat skeptical about their multi-DC vision) and behaves like a single managed entity.

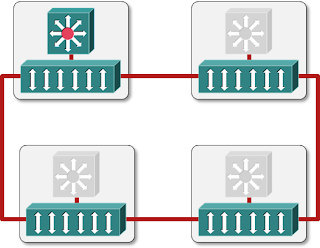

No wonder I started drawing the following highly optimistic diagram when creating materials for the Data Center 3.0 webinar, which includes information on Multi-Chassis Link Aggregation (MLAG) technologies from numerous vendors.

Oversimplified HP IRF design

However, the worm of doubt was continuously nagging somewhere deep in my subconsciousness, so I decided to check the configuration guides of various HP switches (kudos to HP for the free and unrestricted access to their very good documentation). I selected a core switch (S12508) and an access-layer switch (S5820X-28S) from the HP IRF technology page1, downloaded their configuration guides and studied the IRF chapters. What a disappointment:

- Only devices of the same series can form an IRF. How is that different from any other stackable switch vendor?

- Only two core switches can form an IRF. How is that different from Cisco’s VSS or Juniper’s XRE200?

- One device in the IRF is the master, others are slaves. Same as Cisco’s VSS.

- Numerous stackable switches can form an IRF. Everyone else is calling that a stack.

- IRF partition is detected through proprietarily modified LACP or BFD. Same as Cisco’s VSS.

- After IRF partition, the loser devices block their ports. The white paper is curiously mum about the consequences of IRF partition. No wonder, IRF does the same thing as any other vendor – the losing part of the cluster blocks its ports following a partition.

There might be something novel in the IRF technology that truly sets it apart from other vendors’ solutions, but I haven’t found it. For the moment, IRF looks like stacking-as-usual to me; if I got it wrong, please chime in with your comments.

More information

Numerous MLAG technologies, including Cisco’s VSS and vPC, Juniper’s XRE200 and HP’s IRF are described in the Data Center 3.0 for Networking Engineers webinar.

-

Had to remove the links to HP web sites when cleaning up this article in May 2022 – HP can’t be bothered to have valid TLS certificates on their web sites. If you really want to find those links, search through the commit history on ipSpace.net blog repository. ↩︎

There are afew small things which do differentiate IRF.

1) irf in a 2-chassis "stack" shares state across all 4management modules. Unlike the rpr-warm in the VSS solution. Cisco's approach is to reboot the chassis if the in-chassis master fails. HP just drops to half speed ( which is still usually faster than Cisco's full speed!)

2) IRF does require specific hardware within the same family, but that's about were it ends. No restriction in which series of line cards(67xx) only. No situations were the line cards will not be given power. And we actually have the ability to have PoE AND IRF in e same chassis.

3) Consistency across the portfolio. Operationally, this means we have consistent bevahiour at each tier oath network. Compared to the various options available (stack wise, VSS, vpc, fabric path,etc...) that fill some of the functionality of IRF.

4) IRF is hardened in the field. IRF is based on 3coms XRN from the late 90's. Withoutnletting the cat out of the bag; this means that we have already solved a lot of the problems that go along with this type of technology.

Hope this helps,

Chris

Need to check #1 though - my understanding is that the RSP in the second chassis takes over immediately ... but you're right, the secondary RSP (if present) needs to be reloaded.

Need to check #1 though - my understanding is that the RSP in the second chassis takes over immediately ... but you're right, the secondary RSP (if present) needs to be reloaded.

You're right on that. Sorry if i wasn't clear, the failover to the master chassis takes about 400ms from my reading (compared to approx 50ms with IRF). My point was the in-chassis failover is abysmal. Reboot the whole chassis? In this scenario there's also no guidance as to the length of time that the VSS pair will take to reconvert because of the variance in time it may take for the cat6k to reboot depending on which modules are in the box.

P.S: I am currently testing an enterprise level network. This would be a good test to try.

You are right that IRF is similar in function as VSS. For me the key difference would be that VSS is platform restricted (65xx), while the same distributed forwarding technology (each irf member can perform full local forwarding, no need to consult master) is available in the form of IRF on basically 'all' switches in the H3C portfolio, from the low-end to the high-end.

This means for instance that the 5800 (top of rack switch) in a stack has distributed L2 forwarding, distributed L3 IPv4 forwarding, distributed L3 IPv6 forwarding and distributed MPLS/VPLS forwarding, traffic never needs to pass/consult the master for the actual traffic forwarding.

I do not know if it will be useful, but I heard that IRF support for 4 chassis switches will be released in the near future.

I read IRF is Active-Active mode, and much more performed during failover compared with VSS. The latency is better than VSS or vPC

Im afraid that you didn't understad so well the guides you read it. First, obviously, any vendor can even make an stack with other vendors, and HP either. Second, there is not a master slave usage, If the "master" switch failed, the IRF array stills up, there is not a dependency. Third, Imagine IRF and Cisco VSS are the same thing, but HP IRF is available since $2,000 dollars switches, Cisco is requiring the Nexus models or Catalys 6500, how much do these switches cost?