Category: workshop

IPv6CP+DHCPv6+SLAAC+RA = IPCP

Last week I got an interesting tweet: “Hey @ioshints can you tell me what is the radius parameter to send ipv6 dns servers at pppoe negotiation?” It turned out that the writer wanted to propagate IPv6 DNS server address with IPv6CP, which doesn’t work. Contrary to IPCP, IPv6CP provides just the bare acknowledgement that the two nodes are willing to use IPv6. All other parameters have to be negotiated with DHCPv6 or ICMPv6 (RA/SLAAC).

The following table compares the capabilities of IPCP with those offered by a combination of DHCPv6, SLAAC and RA (IPv6CP is totally useless as a host parameter negotiation tool):

Traffic Trombone (what it is and how you get them)

Every so often I get a question “what exactly is a traffic trombone/tromboning”. Here’s my attempt at a semi-formal definition.

Traffic trombone is a term (probably invented by Greg Ferro) that colorfully describes inter-VLAN traffic flows in a network with stretched (usually overlapping) L2 domains.

In a traditional L2/L3 data center architecture with small L2 domains in the access layer and L3 forwarding across the core network, the inter-subnet traffic flows were close to optimal: a host would send a packet toward the first-hop (ingress) router (across a bridged L2 subnet), the ingress router would forward the packet across an optimal path toward the egress router, and the egress router would deliver the packet (yet again, across a bridged L2 subnet) to the destination host.

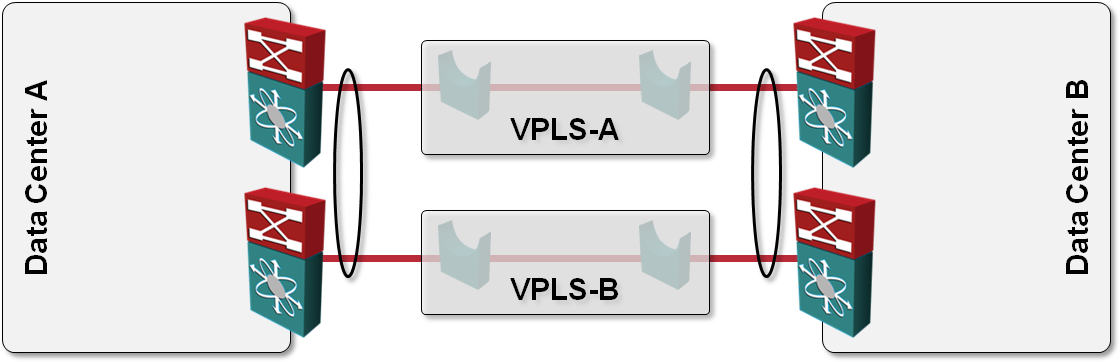

L2 DCI with MLAG over VPLS transport?

One of the answers I got to my “How would you use VPLS transport in L2 DCI” question was also “Can’t you just order two VPLS services, use them as P2P links and bundle the two links into a multi-chassis link aggregation group (MLAG)?” like this:

How would you use VPLS transport in L2 DCI?

One of the questions answered in my Data Center Interconnect webinar is: “what options do I have to build a layer-2 interconnect with transport technology X”, with X ∈ {dark-fiber, DWDM, SONET, pseudowire, VPLS, MPLS/VPN, IP}. VPLS is one of the tougher nuts to crack; it provides a switched LAN emulation, usually with no end-to-end spanning tree (which you wouldn’t want to have anyway).

Imagine the following simple scenario where we want to establish redundant connectivity between two data centers and the only transport technology we can get is VPLS (or some other Carrier Ethernet LAN service):

VEPA or vCloud Network Isolation?

If I could design my dream data center with total disregard to today’s limitations (and technologies from an alternate universe), it would have optimal connectivity between any two endpoints (real or virtual), no limits on VM mobility and on-demand L4-7 services insertion (be it firewalling, load balancing or something else) ... all of that implemented on truly scalable trombone-free networking infrastructure (in a dream world I don’t care whether it’s called routing or bridging).

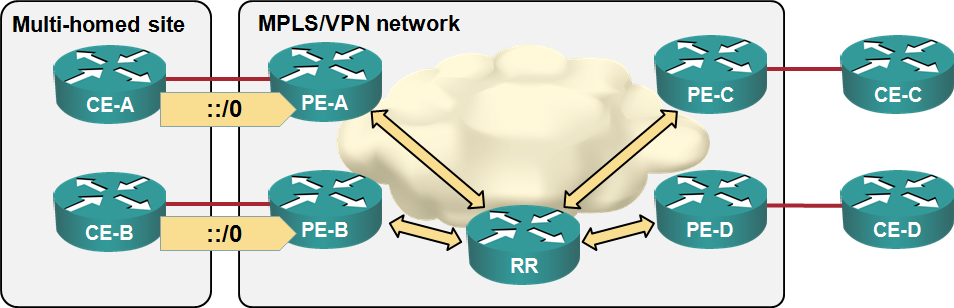

Load sharing in MPLS/VPN networks with route reflectors

Some of the e-mails and comments I received after writing the “Changing VPNv4 route attributes” post illustrated common MPLS/VPN misconceptions, so it’s worth addressing them in a series of posts. Let’s start with the simplest scenario: load balancingsharing toward a multi-homed customer site. We’ll use a very simple MPLS/VPN network with three customer sites, four CE-routers, four PE-routers a route reflector:

End-to-End QoS marking in MPLS/VPN-over-DMVPN networks

I got a great question in one of my Enterprise MPLS/VPN Deployment webinars when I was describing how you could run MPLS/VPN across DMVPN cloud:

That sounds great, but how does end-to-end QoS work when you run IP-over-MPLS-over-GRE-over-IPSec-over-IP?

My initial off-the-cuff answer was:

Well, when the IP packet arriving through a VRF interface gets its MPLS label, the IP precedence bits from the IP packet are copied into the MPLS EXP (now TC) bits. As for what happens when the MPLS packet gets encapsulated in a GRE packet and when the GRE packet is encrypted… I have no clue. I need to test it.

Open FCoE – Software implementation of the camel jetpack

Intel announced its Open FCoE (software implementation of FCoE stack on top of Intel’s 10GB Ethernet adapters) using the cloudy bullshit bingo including simplifying the Data Center, Free New Technology, Cloud Vision and Green Computing (ok, they used Environmental impact) and lots of positive supporting quotes. The only thing missing was an enthusiastic Gartner quote (or maybe they were too expensive?).

VMware vSwitch does not support LACP

This is very old news to any seasoned system or network administrator dealing with VMware/vSphere: the vSwitch and vNetwork Distributed Switch (vDS) do not support Link Aggregation Control Protocol (LACP). Multiple uplinks from the same physical server cannot be bundled into a Link Aggregation Group (LAG, also known as port channel) unless you configure static port channel on the adjacent switch’s ports.

When you use the default (per-VM) load balancing mechanism offered by vSwitch, the drawbacks caused by lack of LACP support are usually negligible, so most engineers are not even aware of what’s (not) going on behind the scenes.

MPLS/VPN over mGRE strikes again

More than five years after the MPLS/VPN-in-mGRE encapsulation was standardized (add a few more years for the work-in-progress and IETF draft stages), it finally debuted in a mainstream-wannabe IOS release running on ISR routers (15.1(2)T), making it usable for the enterprise WAN designers, who are probably its best target audience.

I was writing about the two conflicting MPLS/VPN over mGRE implementations a while ago and got the impression the Service Providers aren’t too excited about this option. No wonder – most of them use full-blown MPLS backbones, so they have no need for GRE tunnels.

Internet-related links (2010-12-19)

GigaOm published two interesting articles by Joe Weinman: in the first one, he describes why pay-per-use residential broadband Internet is probably inevitable, in the second one he predicts changes in user behavior if the service providers decide to implement it. I would also suggest you take time and read his in-depth Market for Melons article.

Obviously, collecting money costs money and the pay-per-use model is no exception (not to mention that most people would pay less), so the service providers prefer usage caps. There are numerous ways to implement usage caps, but implementing usage cap as an acceptable use policy and calling exceeding the cap policy violation is not the way to do it. Some people are truly trying to alienate the users.

HP Virtual Connect: every vendor has its own dinosaurs

I was listening to the HP Virtual Connect (VC) PPP podcast recently and got the impression that HP VC is a weirdly convoluted product. I started wondering what exactly they were thinking when they were designing it ... and had the epiphany when Ken Henault took a step back and explained the history leading to the current complexity (listen to the Packet Pushers podcast to get the whole story)

DHCPv6 IA_PD relaying works with 12.2SRE2

Last week I ran numerous lab tests while preparing router configurations for the Building IPv6 Service Provider Core webinar. One of the fantastic test results: DHCPv6 relaying works correctly on a 7200 running 12.2(33)SRE2, even when the client requests IA_PD option.

Chinese BGP incident: was it a traffic hijack?

You’re probably familiar with the April fat fingers incident in which Chinanet (AS 23724) originated ~37.000 prefixes for about 15 minutes. The incident made it into the annual report of US Congress’ U.S.-China Economic and Security Review Commission (page 243 of this PDF) and the media was more than happy to pick it up (Andree Toonk has a whole list of links in his blog post). We might never know whether the misleading statements in the report were intentional or just a result of clueless technical advisors, but the facts are far away from what they claim:

Internet peering disputes: follow the money

You’ve probably heard about the recent peering dispute between Level-3 and Comcast ... and might have enjoyed the frenzy with which the blogging pundits have followed the false net neutrality scent left by Level-3 spin doctors.

Facts first: Level-3 is trying to dump huge amount of data into Comcast’s network for free.