Detecting Byzantine Link Failures with SNMP

One of my readers has to deal with a crappy Network Termination Equipment (NTE)1 that does not drop local link carrier2 when the remote link fails. Here’s the original ASCII art describing the topology:

PE---------------NTE--FW---NMS

<--------IP-------->

He’d like to use interface SNMP counters on the firewall to detect the PE-NTE link failure. He’s using static default route toward PE on FW, and tried to detect the link failure with ifOutDiscards counter.

netlab Multi-Platform Custom Configuration Templates

In the Building a BGP Anycast Lab I described how you could use custom configuration templates to extend the netlab functionality.

That example used Cisco IOS… but what if you want to test the same functionality on multiple platforms? netlab provides a nice trick: the custom configuration template could point to a directory with platform-specific templates. Let me show you how that works…

OMG: Hop-by-Hop Path MTU Discovery

Straight from the “Bad Ideas Never Die” (see also RFC 1925 Rule 11) department: Geoff Huston described a proposal to use hop-by-hop IPv6 extension headers to implement Path MTU Discovery. In his words:

It is a rare situation when you can create an outcome from two somewhat broken technologies where the outcome is not also broken.

IETF should put rules in place similar to the ones used by the patent office (Thou Shalt Not Patent Perpetual Motion Machine), but unfortunately we’re way past that point. Back to Geoff:

It appears that the IETF has decided that volume is far easier to achieve than quality. These days, what the IETF is generating as RFCs is pretty much what the IETF accused the OSI folk of producing back then: Nothing more than voluminous paperware about vapourware!

Video: Understanding Kubernetes Pods

Pods are a basic building block of any Kubernetes-based deployment… but what exactly are they and how are they related to Kubernetes networking? Stuart Charlton unraveled that mystery in the Understanding Pods video (part of Kubernetes Networking Deep Dive webinar)

New in netlab: More MPLS and VRFs, Dell OS10, Cumulus 5.0 on Containerlab

I already mentioned the netsim-tools Easter Egg, here are the other cool features shipping in release 1.2.1:

- Dell OS10 on libvirt (including BGP, OSPFv2, OSPFv3 and VRF Lite) by Stefano Sasso

- VRFs, MPLS, and MPLS/VPN support on Mikrotik RouterOS and VyOS by Stefano Sasso

- Containerlab support for Cumulus 5.0 with NVUE including Simple VRF-Lite by Julien Dhaille

Network Digital Twins Work Best in PowerPoint

A friend of mine sent me the following question a few months ago:

I thought you might know the best way (currently) to create a digital clone of parts of a production network? The objective is to test changes against a test network as part of a CI/CD process. Ideally, there would be an automation that could replicate selected parts of a production network in a test network.

TL&DR: Sounds great, but you might be solving the wrong problem.

… updated on Thursday, April 27, 2023 09:00 UTC

Everything Is Better with a GUI (even netlab)

Some people think that everything is better with Bluetooth (or maybe it’s AI these days). They’re clearly wrong; according to the ancient wisdom of product managers working for networking vendors, everything is better with a GUI.

Now imagine adding network topology visualizer and GUI-based device access with in-browser SSH to an intent-based infrastructure-as-code virtual network function labbing tool. How’s that for a Bullshit Bingo winner1?

Worth Reading: New Linux Command Line Tools

Julia Evans published a long list of new(ish) Linux command line tools. For example, did you ever want to have directory listing in nicely formatted JSON? How about ls -l | jc --ls | jq .?

Quite a few of these tools also work on Mac and can be installed with HomeBrew. Some are written in a scripting language, so you could (in theory) also use them on Windows (without WSL).

Video: Challenges of Managed SD-WAN Services

When I published a link to the Is MPLS/VPN Too Complex? blog post to LinkedIn, someone asked whether I’m skeptical about service provider SD-WAN services due to lack of skills, and Kristijan Taskovski quickly identified the root cause in his reply:

The argument of a lack of skill is only one that is perpetuated by businesses. It’s not perpetuated by engineers. People that are trained, honed, and knowledgeable are expensive. Expense is the number one enemy for a business.

That’s exactly why I think most managed SD-WAN services will be a dismal failure.

Telephone System Is a Bad Example of Hierarchical Addresses

Networking engineers proposing strict hierarchical addressing scheme as a solution to global BGP table explosion often cite the international telephone system numbering plan (E.164) as a perfect example of an addressing plan that uses hierarchy to minimize routing table sizes. Even more, widespread mobile roaming and local number portability indicate that we could solve IP mobility and multihoming if only insert-your-favorite-opinion-here.

AWS Automatic EC2 Instance Recovery

On March 30th 2022, AWS announced automatic recovery of EC2 instances. Does that mean that AWS got feature-parity with VMware High Availability, or that VMware got it right from the very start? No and No.

Automatic Instance Recover Is Not High Availability

Reading the AWS documentation (as opposed to the feature announcement) quickly reveals a caveat or two. The automatic recovery is performed if an instance becomes impaired because of an underlying hardware failure or a problem that requires AWS involvement to repair.

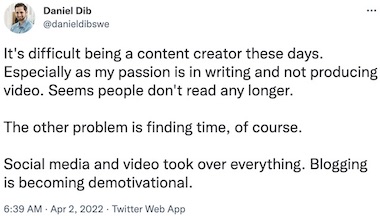

Keep Blogging, Some of Us Still Read

I stumbled upon a sad tweet a few days ago…

… and not surprisingly, a lot of people chimed in saying “don’t give up, we still prefer reading”. Unfortunately, it does seem like the amount of worthy content is constantly decreasing, and way too many quality blogs disappeared over the years, so I’ll try to lift the veil of depression a bit ;)

Creating VRF Lite Labs With netlab

I always found VRF lab setups a chore. On top of the usual IPAM tasks you have to create VRFs, assign route targets and route distinguishers, do that on every PE-router in your lab… before you can start working on interesting things.

I tried to remove as much friction as I could with the netlab VRF configuration module – let me walk you through a few simple examples1 which will also serve to illustrate the VRF configuration differences between Cisco IOS and Arista EOS.

Worth Reading: Full-Stack Network Automation

Lívio Zanol Puppim published a series of blog posts describing a full-stack network automation, including GitOps with GitLab, handling secrets with Hashicorp Vault, using Ansible and AWX to run automation scripts, continuous integration with Gitlab CI Runner, and topped it off with a REST API and React-based user interface.

You might not want to use the exact same components, but it’s probably worthwhile going through his solution and explore the source code. He’s also looking for any comments or feedback you might have on how to improve what he did.

Worth Reading: The AI Illusion

Russ White’s Weekend Reads are full of gems, including a recent pointer to the AI Illusion – State-of-the-Art Chatbots Aren’t What They Seem article. It starts with “Artificial intelligence is an oxymoron. Despite all the incredible things computers can do, they are still not intelligent in any meaningful sense of the word.” and it only gets better.

While the article focuses on natural language processing (GPT-3 model), I see no reason why we should expect better performance from AI in networking (see also: AI/ML in Networking – The Good, the Bad, and the Ugly).