Unintended Consequences of IPv6 SLAAC

One of my friends is running a large IPv6 network and has already experienced a shortage of IPv6 neighbor cache on some of his switches. Digging deeper into the root causes, he discovered:

In my larger environments, I see significant neighbor table cache entries, especially on network segments with hosts that make many long-term connections. These hosts have 10 to 20 addresses that maintain state over days or weeks to accomplish their processes.

What’s going on? A perfect storm of numerous unrelated annoyances:

Explore: Why No IPv6? (IPv6 SaaS)

Lasse Haugen had enough of the never-ending “we can’t possibly deploy IPv6” excuses and decided to start the IPv6 Shame-as-a-Service website, documenting top websites that still don’t offer IPv6 connectivity.

His list includes well-known entries like twitter.com, azure.com, and github.com plus a few unexpected ones. I find cloudflare.net not having an AAAA DNS record truly hilarious. Someone within the company that flawlessly provided my website with IPv6 connectivity for years obviously still has some reservations about their own dogfood ;)

LISP vs EVPN: Mobility in Campus Networks

I decided not to get involved in the EVPN-versus-LISP debates anymore; I’d written everything I had to say about LISP. However, I still get annoyed when experienced networking engineers fall for marketing gimmicks disguised as technical arguments. Here’s a recent one:

Stateful Firewall Cluster High Availability Theater

Dmitry Perets wrote an excellent description of how typical firewall cluster solutions implement control-plane high availability, in particular, the routing protocol Graceful Restart feature (slightly edited):

Most of the HA clustering solutions for stateful firewalls that I know implement a single-brain model, where the entire cluster is seen by the outside network as a single node. The node that is currently primary runs the control plane (hence, I call it single-brain). Sessions and the forwarding plane are synchronized between the nodes.

SR/MPLS Security Framework

A long-time friend sent me this question:

I would like your advice or a reference to a security framework I must consider when building a green field backbone in SR/MPLS.

Before going into the details, keep in mind that the core SR/MPLS functionality is not much different than the traditional MPLS:

netlab 1.8.1: VRF OSPFv3, Integration Tests

netlab release 1.8.1 added a interesting few features, including:

- OSPFv3 in VRFs, implemented on Arista EOS, Cisco IOS, Cisco IOS-XE, FRR, and Junos (vMX, vPTX, vSRX).

- EBGP sessions over IPv4 unnumbered and IPv6 LLA interfaces on Arista EOS

- Cisco IOS XRd container support

- Retry tests until the timeout functionality in netlab validate.

This time, most of the work was done behind the scenes1.

Worth Reading: Cybersecurity Is Broken

Another cybersecurity rant worth reading: cybersecurity is broken due to lack of consequences.

Bonus point: pointer to RFC 602 written in December 1973.

Why Are We Using EVPN Instead of SPB or TRILL?

Dan left an interesting comment on one of my previous blog posts:

It strikes me that the entire industry lost out when we didn’t do SPB or TRILL. Specifically, I like how Avaya did SPB.

Oh, we did TRILL. Three vendors did it in different proprietary ways, but I’m digressing.

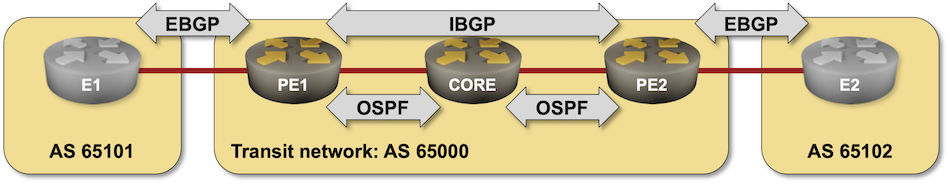

BGP Challenge: Build BGP-Free MPLS Core Network

Here’s another challenge for BGP aficionados: build an MPLS-based transit network without BGP running on core routers.

That should be an easy task if you configured MPLS in the past, so try to spice it up a bit:

- Use SR/MPLS instead of LDP

- Do it on a platform you’re not familiar with (hint: Arista vEOS is a bit different from Cisco IOS)

- Try to get it running on FRR containers.

EVPN Designs: VXLAN Leaf-and-Spine Fabric

In this series of blog posts, we’ll explore numerous routing protocol designs that can be used to implement EVPN-with-VXLAN L2VPNs in a leaf-and-spine data center fabric. Every design will come with a companion netlab topology you can use to create a lab and explore the behavior of leaf- and spine switches.

Our leaf-and-spine fabric will have four leaves and two spines (but feel free to adjust the lab topology fabric parameters to build larger fabrics). The fabric will provide layer-2 connectivity to orange and blue VLANs. Two hosts will be connected to each VLAN to check end-to-end connectivity.

Using wemulate with netlab

An RSS hiccup brought an old blog post from Urs Baumann into my RSS reader. I’m always telling networking engineers that it’s essential to set up realistic WAN environments when testing distributed software, and wemulate (a nice tc front-end) seemed like a perfect match. Even better, it runs in a container – an ideal component for a netlab-generated virtual WAN network.

wemulate acts as a bump in the wire; it uses Linux bridges to connect two container interfaces. We’ll use it to introduce jitter into an IP subnet:

┌──┐ ┌────────┐ ┌──┐

│h1├───┤wemulate├───┤h2│

└──┘ └────────┘ └──┘

◄──────────────────────►

192.168.33.0/24

Repost: EBGP-Mostly Service Provider Network

Daryll Swer left a long comment describing how he designed a Service Provider network running in numerous private autonomous systems. While I might not agree with everything he wrote, it’s an interesting idea and conceptually pretty similar to what we did 25 years ago (IBGP without IGP, running across physical interfaces, with every router being a route-reflector client of every other router), or how some very large networks were using BGP confederations.

Just remember (as someone from Cisco TAC told me in those days) that “you might be the only one in the world doing it and might hit bugs no one has seen before.”

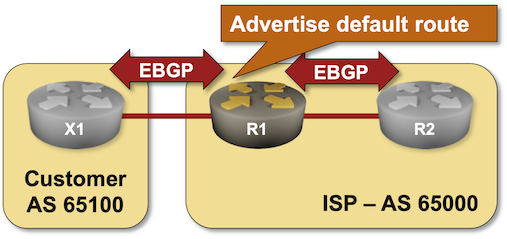

BGP Labs: Advertise the Default Route

If you’re an Internet Service Provider running BGP with your customers, you might not want to send them the whole Internet routing table. Sending the regional prefixes and the default route is usually good enough.

FRRouting Claims IBGP Loopbacks Are Inaccessible

Last week, I explained the differences between FRRouting and more traditional networking operating systems in scenarios where OSPF and IBGP advertise the same prefix:

- Traditional networking operating systems enter only the OSPF route into the IP routing table.

- FRRouting enters OSPF and IBGP routes into the IP routing table.

- On all platforms I’ve tested, only the OSPF route gets into the forwarding table1.

One could conclude that it’s perfectly safe to advertise the same prefixes in OSPF and IBGP. The OSPF routes will be used within the autonomous system, and the IBGP routes will be propagated over EBGP to adjacent networks. Well, one would be surprised 🤦♂️

OSI Layers in Routing Protocols

Now and then, someone rediscovers that IS-IS does not run on top of CLNP or IP and claims that, therefore, it must be a layer-2 protocol. Even vendors’ documentation is not immune.

Interestingly, most routing protocols span the whole seven layers of the OSI stack, with some layers implemented internally and others offloaded to other standardized protocols.