OSPF and Connected Networks: To Redistribute or Not?

A few days ago, I was discussing a data center design with a seasoned network architect. During the MPLS discussions, he made an offhand remark “There are still some switches running OSPF and using network 0.0.0.0 and redistribute connected.” My first thought was, “This can’t be good,” but I had no idea how bad it was until I ran a lab test.

The generic dilemma along the lines of “should I make connected interfaces part of my OSPF process (and make them passive) or should I redistribute them into OSPF” has no clear-cut answer (apart from the obvious “it depends”) ... and Google will quickly find you tons of lengthy discussions.

NHRP Convergence Issues in Multi-Hub DMVPN Networks

Summary for differently attentive: A hub router failure in multi-hub DMVPN networks can cause spoke-to-spoke traffic disruptions that last up to three minutes.

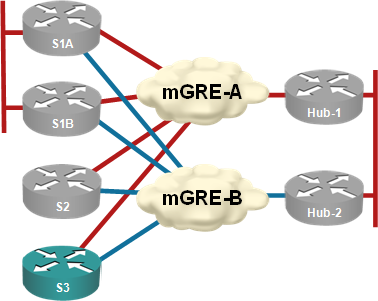

Almost every DMVPN design I’ve seen has multiple hubs for redundancy purposes. I’ve always preached the “one hub per DMVPN tunnel” mantra (see the diagram below) to those who were willing to listen citing “NHRP issues after hub failure” as one of the main reasons you should not have two or more hubs per DMVPN tunnel.

Each hub router controls an independent DMVPN tunnel

Interesting links (2011-05-01)

Working on the May Day feels like an oxymoron, but Sundays are about the only time I can clean up my overflowing Inbox.

The best post I’ve stumbled across recently is undoubtedly 38 life lessons I’ve learned in 38 years (thank you, @greg_meehan). I will try to remember the slow down one. Another great one: Managing IT people from Storagebod. Been there, seen that (and failed a few times).

And here’s the usual long list of links:

OpenFlow 1.1 in hardware: I was wrong (again)

Earlier this month I wrote “we’ll probably have to wait at least a few years before we’ll see a full-blown hardware product implementing OpenFlow 1.1.” (and probably repeated something along the same lines in during the OpenFlow Packet Pushers podcast). I was wrong (and I won’t split hairs and claim that an academic proof-of-concept doesn’t count). Here it is: @nbk1 pointed me to a 100 Gbps switch implementing the latest-and-greatest OpenFlow 1.1.

DMVPN Spoke NHRP Behavior Changed in IOS Release 15.0M

In the good old days, we (thought we) knew how Phase 2 DMVPN works and what happens when the spoke-to-spoke session cannot be established. As I discovered when developing the lab configurations for the DMVPN: New Features in IOS Release 15 webinar, that behavior has forever changed (and not for the better) sometime in the 12.4T (or 15.0M) release. I blame the introduction of NAT awareness in IOS release 12.4(15)T, but it could be another totally unrelated change.

New Data Center switches from Force10

Force10 has just launched a new series of data center switches. The ZettaScale switches are, as one would expect from Force10, down-to-earth high-performance low-footprint products – a good option for those network engineers that like building high-density high-performance data centers with minimal feature overload.

All the information in this post is based on the briefing I’ve received from Force10 last week, the draft materials they sent me and the subsequent answers to my questions. I haven’t been able to touch the boxes or read the product documentation yet.

Virtual network appliances: benefits and drawbacks

A while ago I decided to figure out how well various vendors support virtualized networking (one of the answers: some of the solutions don’t scale) and what can be done with virtual network appliances (I was pleasantly surprised by F5’s BIG-IP LTM VE and Vyatta). You’ll find some of my other thoughts on this subject in the Virtual network appliances: Benefits and drawbacks article published by SearchNetworking.

Spoke-to-Spoke IP Multicast over DMVPN?

A long-time reader has sent me an intriguing question: “would IP multicast work between DMVPN spokes?” In theory, the answer is “we could make it work”, but we all know theory and practice are not the same thing.

To make IP multicast work between DMVPN spokes, you’d need to configure multicast propagation between them with the ip nhrp map multicast remote-spoke-NBMA command. In a small DMVPN network where you need IP multicast only between a handful of spokes, that might even work; obviously this trick does not scale for a number of reasons:

Published on , commented on July 6, 2022

OpenFlow FAQ: Will the Hype Ever Stop?

Network World has published another masterpiece last week: FAQ: What is OpenFlow and why is it needed? Following the physics-changing promises made during the Open Network Foundation launch, one would hope to get some straight facts; obviously things don’t work that way. Let’s walk through some of the points. While most of them might not be too incorrect from an oversimplified perspective, they do over-hype a potentially useful technology way out of proportions.

NW: “OpenFlow is a programmable network protocol designed to manage and direct traffic among routers and switches from various vendors.” This one is just a tad misleading. OpenFlow is actually a protocol that allows a controller to download forwarding tables into one or more switches. Whether that manages or directs traffic depends on what controller is programmed to do.

Distributed Firewalls: a Ticking Bomb

Are you ever asked to use a layer-2 Data Center Interconnect to implement distributed active-active firewalls, supposedly solving all the L3 issues and asymmetrical-traffic-flow-over-stateful-firewalls problems? Don’t be surprised; I was stupid enough (or maybe just blinded by the L2 glitter) in 2010 to draw the following diagram illustrating a sample use of VPLS services:

Interesting links (2011-04-17)

Data Center

RFC 6165 documents the layer-2 related IS-IS extensions. No more excuses along the “TRILL standards are not ready” lines. Are you listening, Brocade and HP?

Data Center Feng Shui: Architecting for Predictable Performance. A nice introductory explanation of advantages of hardware-based forwarding.

When is a Fabric not a Fabric? Juniper continues the “who’s the smartest kid on the block” game. I thought we’re all adults; stop the “bright future” promises and get the products out.

OSPF Route Selection Rules

OSPF implementation in Cisco IOS deviates slightly from OSPF/NSSA standards (RFC 2328 and RFC 3101). These are the OSPF route selection rules as implemented by Cisco IOS release 12.2(33)SRE1 (all recent releases probably behave identically):

VPLS versus OTV for L2 Data Center Interconnect (DCI)

DJ Spry asked an interesting question in a comment to my MPLS/VPN in DCI designs post: “Why would one choose OTV over MPLS/VPN?” The answer is simple: it depends on what you need. MPLS/VPN provides path isolation between layer-3 domains (routed networks) across MPLS or IP infrastructure whereas OTV providers layer-2 transport (and VLAN-based path isolation) across IP infrastructure. However, it does make sense to compare OTV with VPLS (which was DJ Spry’s next question). Apart from the obvious platform dependence (OTV runs on Nexus 7000, VPLS runs on Catalyst 6500/Cisco 7600 and a few other routers) which might disappear once ASR1K gets the rumored OTV support, there’s a huge gap in functionality and complexity between the two layer-2 transport technologies.

MPLS/VPN in Data Center Interconnect (DCI) Designs

Yesterday I was describing a dreamland in which hypervisor switches would use MPLS/VPN to implement seamless scalable VM mobility across IP+MPLS infrastructure. Today I’ll try to get down to earth; there are exciting real-life design using MPLS/VPN between data centers. You can implement them with Catalyst 6500/Cisco 7600 or ASR1K and will soon be able to do the same with Nexus 7000.

Most data centers have numerous security zones, from external network, DMZ, web servers and applications servers to database servers, IP-based storage and network management. When you design active/active data centers, you want to keep the security zones strictly separate and the “usual” solution proposed by L2-crazed crowd is to bridge multiple VLANs across the DCI infrastructure (in the next microsecond they start describing the beauties of their favorite L2 DCI technology).

(v)Cloud Architects, ever heard of MPLS?

Duncan Epping, the author of fantastic Yellow Bricks virtualization blog tweeted a very valid question following my vCDNI scalability (or lack thereof) post: “What you feel would be suitable alternative to vCD-NI as clearly VLANs will not scale well?”

Let’s start with the very basics: modern data center switches support anywhere between 1K and 4K (the theoretical limit based on 802.1q framing) VLANs. If you need more than 1K VLANs, you’ve either totally messed up your network design or you’re a service provider offering multi-tenant services (recently relabeled by your marketing department as IaaS cloud). Service Providers had to cope with multi-tenant networks for decades ... only they haven’t realized those were multi-tenant networks and called them VPNs. Maybe, just maybe, there’s a technology out there that’s been field-proven, known to scale, and works over switched Ethernet.