VMware Virtual Switch: no need for STP

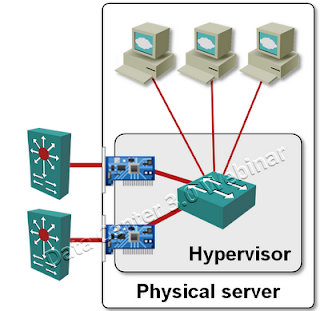

During the Data Center 3.0 webinar I always mention that you can connect a VMware ESX server (with embedded virtual switch) to the network through multiple active uplinks without link aggregation. The response is very predictable: I get a few “how does that work” questions in the next seconds.

VMware did a great job with the virtual switch embedded in the VMware hypervisor (vNetwork Standard Switch – vSS – or vNetwork Distributed Switch – vDS): it uses special forwarding rules (I call them split horizon switching, Cisco UCS documentation uses the term End Host Mode) that prevent forwarding loops without resorting to STP or port blocking.

Physical and virtual NICs

The ports of a virtual switch (Nexus 1000V uses the term Virtual Ethernet Module – VEM) are connected to either physical Network Interface Cards (uplinks) or virtual NICs of the virtual machines. The physical ports can be connected to one or more physical switches and don’t have to be aggregated into a port channel.

Ports are not equal

In a traditional Ethernet switch, the same forwarding rules are used for all ports. Virtual switch uses different forwarding rules for vNICs and uplinks.

No MAC address learning

The hypervisor knows the MAC addresses of all virtual machines running in the ESX server; there’s no need to perform MAC address learning.

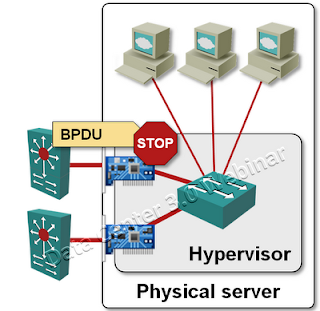

Spanning Tree Protocol is ignored

Virtual switch is not running Spanning Tree Protocol (STP) and does not send STP Bridge Protocol Data Units (BPDU). STP BPDUs received by the virtual switch are ignored. Uplinks are never blocked based on STP information.

The switch ports to which you connect the ESX servers should be configured with bpduguard to prevent forwarding loops due to wiring errors. As ESX doesn’t run STP, you should also configure spanning-tree portfast on these ports.

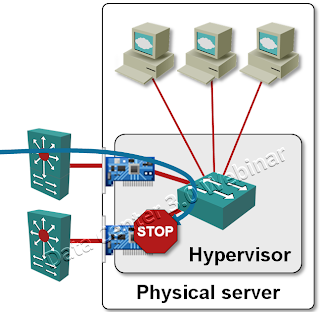

Split-horizon forwarding

Packets received through one of the uplinks are never forwarded to other uplinks. This rule prevents forwarding loops through the virtual switch.

Limited flooding of broadcasts/multicasts

Broadcast or multicast packets originated by a virtual machine are sent to all other virtual machines in the same port group (VMware terminology for a VLAN). They are also sent through one of the uplinks like a regular unicast packet (they are not flooded through all uplinks). This ensures that the outside network receives a single copy of the broadcast.

The uplink through which the broadcast packet is sent is chosen based on the load balancing mode configured for the virtual switch or the port group.

Broadcasts/multicasts received through an uplink port are sent to all virtual machines in the port group (identified by VLAN tag), but not to other uplinks (see split-horizon forwarding).

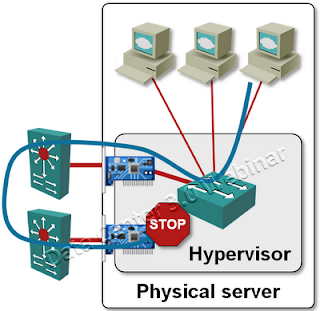

No flooding of unknown unicasts

Unicast packets sent from virtual machines to unknown MAC addresses are sent through one of the uplinks (selected based on the load balancing mode). They are not flooded.

Unicast packets received through the uplink ports and addressed to unknown MAC addresses are dropped.

Reverse Path check based on source MAC address

The virtual switch sends a single copy of a broadcast/multicast/unknown unicast packet to the outside network (see the no flooding rules above), but the physical switch always performs full flooding and sends copies of the packet back to the virtual switch through all other uplinks. VMware thus has to check the source MAC addresses of packets received through the uplinks. Packet received through one of the uplinks and having a source MAC address belonging to one of the virtual machines is silently dropped.

Hyper-V is different

If your server administrators are used to the ESX virtual switch and start deploying Microsoft’s Hyper-V, expect a few fun moments (Microsoft is making sure you’ll have them: the Hyper-V Virtual Networking Best Practices document does not mention redundant uplinks at all).

A potential solution is to bridge multiple physical NICs in Hyper-V ... creating a nice forwarding loop that will melt down large parts of your network (more so if you do that on a powerful server with multiple 10GE uplinks like the Cisco UCS blade servers). Configuring bpduguard on all portfast switch ports is thus a must.

This sounds like the same technology they use in UCS integral nexus 'interconnect'. They use mac-pinning on the uplinks and you can selectively choose which VLANs actively traverse each link if you so wish. Any particular reason you didn't use the term mac-pinning?

The "Mac pinning" is just one of the possible load balancing methods vSwitch can use. VMware uses the descriptive term "Route based on source MAC hash".

Of course, if ESX doesn't forward the bpdus from a VM, then none of that happens and enabling bpduguard is safe. Definitely not worth the risk until I'm sure, though! :)