BGP Route Reflector Myths

New networking myths are continuously popping up. Here’s a BGP one I encountered a few days ago:

You don’t need IBGP sessions between BGP route reflectors

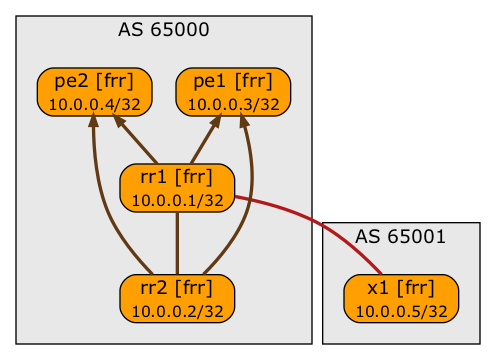

In general, that’s clearly wrong, as illustrated by this setup:

BGP session (arrows indicate RR clients)

- RR1 and RR2 are route reflectors

- PE1 and PE2 are route reflector clients

- RR1 has an external BGP session

Without the IBGP session between RR1 and RR2, X1 and RR2 could not communicate. Maybe you don’t consider that a big deal, in which case add X2 connected to PE2.

Next question: where did that myth come from? Imagine a data center fabric running IBGP (for EVPN) on top of an IGP. You could have dedicated out-of-band route reflectors, or you could use spine switches as route reflectors. Spine switches shouldn’t have external connections (more about that in Leaf-and-Spine Fabric Architectures webinar), and a typical leaf-and-spine fabric wouldn’t have spine-to-spine links anyway. IBGP sessions between spine switches do seem like an overkill.

The idea of not having a full mesh of IBGP sessions between spine switches still sounds a bit off. Without that full mesh you’re losing redundancy. For example, in our network PE1 receives routes from X1 via RR1 and RR2; if the BGP session between RR1 and PE1 is lost for any reason, PE1 still has a route toward X1. Remove the IBGP session between RR1 and RR2 and you’ve lost that backup update propagation path.

pe1# sh ip bgp 10.0.0.5

BGP routing table entry for 10.0.0.5/32

Paths: (2 available, best #1, table default)

Not advertised to any peer

65001

10.0.0.1(rr1) (metric 20) from rr1(10.0.0.1) (10.0.0.1)

Origin IGP, metric 0, localpref 100, valid, internal, best (Cluser length)

65001

10.0.0.1(rr2) (metric 20) from rr2(10.0.0.2) (10.0.0.1)

Origin IGP, metric 0, localpref 100, valid, internal

Originator: 10.0.0.1, Cluster list: 10.0.0.1

In theory, the need for the IBGP sessions between spine switches diminishes if as the number of spine switches grows (unless someone “wisely” decided to use only two of the spines as route reflectors). In practice, I prefer not to over-optimize my designs; I’ve been bitten too many times by what seemed like an awesome idea at the time I implemented it.

Lesson Learned

You can’t beat the laws of physics (or networking). A BGP router in an autonomous system should either participate in the IBGP full mesh or be a client of at least two route reflectors. For more details, please read BGP Route Reflector Details.

Does the above rule cover all possible scenarios? The answer is left as an exercise for the careful reader.

Appreciate you sharing your insights and lessons learnt from the past.

I think I may know where that silly notion came from, and you are right of course: One can construct a network topology in which iBGP between route reflectors is useful/required.

However, in a topology where the route reflectors are intended as equivalent redundant options to form a full iBGP mesh, they would (normally) each have the exact same connectivity to other devices. If not, you would have snowflakes (https://blog.ipspace.net/2021/12/worth-reading-snowflake-network-devices.html). This is especially true for a cluster of 'pure' route reflectors that only do control plane and no packet forwarding.

So: Do we need iBGP sessions between truly equivalent, fully connected route reflectors?

How about "Without that full mesh you’re losing redundancy." and "The need for the IBGP sessions between spine switches diminishes if as the number of spine switches grows unless someone “wisely” decided to use only two of the spines as route reflectors"

As always, pick your poison (aka "it depends"), and whatever you do, make sure you know what you're doing as opposed to following PowerPoint-based "best practices"

Ivan, honestly I fail to understand how:

In a "normal" design there should not exist a route that is known only to one RR. As Jeroen stated above, having iBGP between equivalent, fully connected route reflectors does not make sense. RR`s purpose in this scenario is to propagate routes, not to use them.

Do we have 2 or 200 spine switches, what`s the difference? How does that affect redundancy or reliability or the very need of iBGP sessions between them? Imagine your picture above with rr[3-100] added. Does it change anything?

There's a non-zero probability that a BGP session is down. There is a lower, but still non-zero probability that nobody notices that (battle-hardened HSRP oldtimers are probably realizing where this is going by now). Bringing another BGP session down could thus result in unexpected partial connectivity which could be avoided by the IBGP session between route reflectors.

Having more than two route reflectors significantly reduces the probability of an overall failure even without the IBGP full mesh between them. You'll find detailed explanation on how to compute that probability in https://ipspace.net/Reliability

Obviously you can consider the probability of a configuration or software error to be low enough to make this an scholastic argument ;) and you might be right ;))