One-Tier Data Centers? Now Bigger Than Ever with Arista 7300 Switches

Early November seems to be the right time for data center product harvest: after last week’s Juniper launch, Arista launched its new switches on Monday. The launch was all we came to expect from Arista: better, faster, more efficient switches … and a dash of PureMarketing™ – the Splines.

The Switches

Arista announced X-series switches with two product lines:

- 7250QX-64 Top-of-Rack switch with 64 40GE ports in a 2RU form factor – a third less than what Nexus 6004 delivers, but in half the space (Nexus 6004 is 4RU high);

- 7300-series modular switches with 4. 8 or 16 linecards, and having up to 512 40GE ports or 2048 10GE ports – almost twice as many as the 7500E models launched only half a year ago.

You can read more about the new hardware in John Herbert’s blog post.

One-Tier Data Center

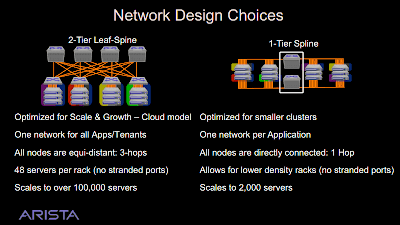

Having a very-high-density modular switch, Arista’s marketing got creative – there’s no need for leaf-and-spine architectures if you can connect all the servers to a single pair of giant switches.

The idea is not new (and has a few limitations – more about them in an upcoming blog post). We’ve been using similar designs for years as most enterprise data centers these days don’t need more than a rack of high-density servers connected to two 10GE ToR switches anyway.

The only difference is the scale – you could connect around 50 servers to two ToR switches (you have to dedicate some ports to inter-server links, storage devices and external connectivity), now you can build a 2-switch network with forty times as many servers.

But Wasn’t QFabric a One-Tier Data Center?

Definitely – at least from the marketing perspective. QFabric is a single management entity, but it’s still a traditional leaf-and-spine architecture with 1:3 oversubscription and 3 hops across the network (five if you count internal Clos fabric in interconnect nodes). Arista is the first vendor that allows you to build a one-tier (maybe I should write one hop) line-rate network connecting 1000+ servers.

Splines? Seriously?

We have the concept – one-hop line-rate network. We need a spiffy name. It’s no longer leaf-and-spine, it’s a Spline. Seriously? Which one? Maybe this?

More information

I’ll tell you more about Arista’s new switches in the update session of the Data Center Fabrics webinar, and if you need help designing your brand-new data center network, contact me.

In addition, it is not all eggs in one basket. It is just more eggs than before. And I think of it as a big high quality basket. Therefore, I do not see a design failure.

Spline - fictional alien beings

And then Cisco 1-upped them by putting 36x40GE ports on their Trident 2 box instead of the 32x40GE Arista did.

The 36x10GE card doesn't have the Insieme chip on it and won't support ACI. Those are meant to be used on the edges of the network not in the core, so if you wanted 100G you could certainly use a 77xx switch in the same role.