Category: networking fundamentals

Video: Combining Data-Link- and Network Layer Addresses

The previous videos in the How Networks Really Work webinar described some interesting details of data-link layer addresses and network layer addresses. Now for the final bit: how do we map an adjacent network address into a per-interface data link layer address?

If you answered ARP (or ND if you happen to be of IPv6 persuasion) you’re absolutely right… but is that the only way? Watch the Combining Data-Link- and Network Addresses video to find out.

Flow-Based Packet Forwarding

In the Cache-Based Packet Forwarding blog post I described what happens when someone tries to bypass the complexities of IP routing table lookup with a forwarding cache.

Now imagine you want to implement full-featured fast packet forwarding including ingress- and egress ACL, NAT, QoS… but find the required hardware (TCAM) too expensive. Wouldn’t it be nice if we could send the first packet of every flow to a CPU to figure out what to do with it, and download the results into a high-speed flow cache where they could be used to switch the subsequent packets of the same flow. Welcome to flow-based packet forwarding.

Video: Comparing TCP/IP and CLNP

If you were building networks in early 1990s you probably remember at least a half-dozen different network protocols. Only one of them survived (IPv6 came later), with another one (CLNP) providing an interesting view into a totally different parallel universe that evolved using a different set of fundamental principles.

After introducing the network-layer addressing, I compared the two and pointed out where one or the other was clearly better.

You might think that it makes no sense to talk about protocols that were rarely used in old days, and that are almost non-existent today, but as always those who cannot remember the past are doomed to repeat it, this time reinventing CLNP principles in IPv6-based layer-3-only data center fabrics.

… updated on Monday, February 28, 2022 16:07 UTC

Cache-Based Packet Forwarding

In the previous blog post in this series I described how convoluted routing table lookups could become when you have to deal with numerous layers of indirection (BGP prefix ⇨ BGP next hop ⇨ IGP next hop ⇨ link bundle ⇨ outgoing interface). Modern high-end hardware can deal with the resulting complexity; decades ago we had to use router CPU to do multiple (potentially recursive) lookups in the IP routing table (there was no FIB at that time).

Network devices were always pushed to the bleeding edge of performance, and smart programmers always tried to optimize the CPU-intensive processes. One of the obvious packet forwarding optimizations relied on the fact that within a short timeframe most packets have to be forwarded to a small set of destinations. Welcome to the wonderful world of cache-based forwarding.

Packet Forwarding 101: Header Lookups

Whenever someone asks me about LISP, I answer, “it’s a nice idea, but cache-based forwarding never worked well.” Oldtimers familiar with the spectacular failures of fast switching and various incarnations of flow switching usually need no further explanation. Unfortunately, that lore is quickly dying out, so let’s start with the fundamentals: how does packet forwarding work?

Packet forwarding used by bridges and routers (or Layer-2/3 switches if you believe in marketing terminology) is just a particular case of statistical multiplexing – a mechanism where many communication streams share the network resources by slicing the data into packets that are sent across the network. The packets are usually forwarded independently; every one of them must contain enough information to be propagated by each intermediate device it encounters on its way across the network.

Video: Network Layer Addressing

After a brief excursion into the ancient data link layer addressing ideas (that you can still find in numerous systems today) and LAN addressing it’s time to focus on network-layer addressing, starting with “can we design protocols without network-layer addresses” (unfortunately, YES) and “should a network-layer address be tied to a node or to an interface” (as always, it depends).

For more details, watch the Network Layer Addressing video (part of How Networks Really Work webinar).

Lesson Learned: The Way Forward

I tried to wrap up my Lessons Learned presentation on a positive note: what are some of the things you can do to avoid all the traps and pitfalls I encountered in the almost four decades of working in networking industry:

- Get invited to architecture and design meetings when a new application project starts.

- Always try to figure out what the underlying actual business needs are.

- Just because you can doesn’t mean that you should.

- Keep it as simple as possible, but no simpler.

- Work with your peers and explain how networking works and why you face certain limitations.

- Humans are not perfect – automate as much as it makes sense, but no more.

Video: Local Area Network Addressing

In the Local Area Network Addressing video (part of How Networks Really Work webinar) I covered numerous obscure LAN addressing details including:

- There’s no layer-2 address in Fibre Channel frames (because FC is routing not bridging);

- Why is the multicast bit the lowest bit (0x01) in the first byte on Ethernet but the highest bit (0x80) on Token Ring or FDDI;

- How some NIC manufacturers never got the memo on what OUI really means.

Fast Failover: Marketing and Reality

I wanted to cover fast failover (at least the basics and Prefix Independent Convergence – PIC) in another live session of How Networks Really Work webinar in 2021, but unfortunately I ran out of time.

As a teaser, you might want to watch the recording of Fast Failover: Marketing and Reality presentation I had at the Seventh RSNOG Conference.

Lesson Learned: Some Services Are Not Worth Delivering

Here’s one of the secrets to AWS’s unprecedented scale and financial success: they quickly figured out that some services are not worth delivering. Most everyone else believes in building snowflake single-customer solutions to solve imaginary problems, effectively losing money while doing so.

Video: Early Data-Link-Layer Addressing

After a brief coverage of the theoretical aspects of network addressing, it’s time to pay a brief visit to the early data-link-layer addressing solutions, from one address per datagram/frame (SDLC, HDLC) and ignore this address (PPP) to no address on P2P links (SLIP).

Feedback: Business Aspects of Networking

Every other blue moon someone asks me to do a not-so-technical presentation at an event, and being a firm believer in frugality I turn most of them into live webinar sessions collected under the Business Aspects of Networking umbrella.

At least some networking engineers find that perspective useful. Here’s what Adrian Giacometti had to say about that webinar:

Big Picture: BFD, Non-Stop Forwarding, and Graceful Restart

We have school holidays this week, so I’m reposting wonderful comments that would otherwise be lost somewhere in the page margins. Today: Erik Auerswald’s excellent summary of BFD, NSF, and GR.

I’d suggest to step back a bit and consider the bigger picture: What is BFD good for? What is GR/NSF/NSR/SSO good for?

BFD and GR/NSF/NSR/SSO have different goals: one enables quick fail over, the other prevents fail over. Combining both promises to be interesting.

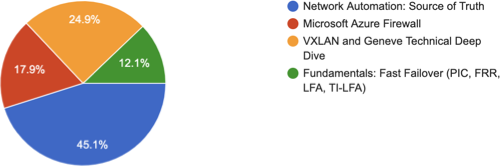

Feedback: How Networks Really Work

A few weeks ago I asked my subscribers which webinar they’d like to see in November (thanks a million to everyone who replied!). Not surprisingly, network automation got the top spot, but I was a bit sad to see my long-term pet project at the bottom of the list:

Graceful Restart and BFD

The whole High Availability Switching series started with a question along the lines of “does it make sense to run BFD together with Graceful Restart”. After Non-Stop Forwarding 101, Graceful Restart 101, and Graceful Restart and Convergence Speed we finally have enough information to answer that question.

TL&DR: Most probably not.

A more nuanced answer depends (as always) on a gazillion implementation details.