Going All Virtual with Virtual WAN Edge Routers

If you’re building a Greenfield private cloud, you SHOULD consider using virtual network services appliances (firewalls, load balancers, IPS/IDS systems), removing the need for additional hard-to-scale hardware devices. But can we go a step further? Can we replace all networking hardware with x86 servers and virtual appliances?

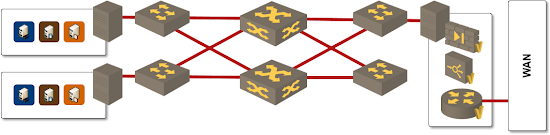

Of course we can’t. Server-based L2/L3 switching is still way too expensive; pizza-box-sized ToR switches are the way to go in small and medium private clouds (I don’t think you’ll find many private clouds that need more than 2 Tbps of bandwidth that two 10GE ToR switches from almost any vendor give you) … but what about WAN edge routers?

If your data center uses 1Gbps uplinks, and you’re a Cisco shop, I can’t see a good reason not to consider Cloud Services Router (CSR 1000V). You can buy a 1Gbps license with the latest software version and I’m positive you’ll get 1Gbps out of it unless you have heavy encryption needs.

Is that not enough? You might have to wait for the upcoming Vyatta 5600 vRouter that uses Intel DPDK and supposedly squeezes 10Gbps out of a single Xeon core.

Connecting to the outside world

Most servers have a spare 1Gb port or two. Plug Internet uplinks into those ports and connect the uplink NIC straight to the router VM using hypervisor bypass.

I know it’s a psychologically scary idea, but is there a technical reason why this approach wouldn’t be as secure as a dedicated hardware router?

Why Would You Do It?

There are a few reasons to go down the all-virtual path:

- Reduced sparing/maintenance requirements – you need hardware maintenance for ToR switches and servers, not for dedicated hardware appliances or routers;

- Increased flexibility – you can deploy the virtual network appliances or routers on any server. It’s also easier to replace a failed server (you probably have a spare server already running, don’t you?) than it is to replace a failed router … and there’s almost no racking-and-stacking if a blade server fails;

- If you believe in distributed storage solutions (Nutanix or VMware VSAN), you need only two hardware components in your data center: servers with local storage and ToR switches. How cool is that?

I’m positive you’ll find a few other reasons. Share them in the comments.

Need More Information?

Check out my cloud infrastructure resources and register for the Designing Private Cloud Infrastructure webinar.

I can also help you design a similar solution through one or more virtual meetings or an on-site workshop.

Zero watts is a lot cheaper than any Intel server.

So far, it has been better for me to really on my network team to maintain network hardware than to transfer responsibility to another team.

Steven Iveson

You _should_ consider a dedicated cluster (if the size of the cloud warrants it) because there's a significant difference between network services workloads and traditional server workloads, potentially requiring different virtualization ratio or server configuration.

Now whether it can be done on x86 hardware or not really just comes down to bandwidth needs.

There are some interesting devices out there now like the Pluribus server/switch thing which kind of blur the lines by integrating a wire speed backplane/switch with actual server hardware and making it open and extensible. Could be a great NFV platform.

Steven Iveson

If you can have an honest discussion (w/o the turf wars), our datacenters would shrink dramatically along with the operational overhead.

The chances of a large scale impact of a common-mode is too big a risk for many organisations which rely on the network as a critical service.

Imagine the virtualised edge router, or edge firewall encountering the hypervisor bug which would bring down not just the virtualised servers, but bring the network to its knees.

I can think of a few such bugs - VMware's e1000 high-load crash bug, VMXNet3 inability to initiate pptp tunnels, Win2k12R2 purple screen of death (doesn't mean it won't happen to other new network OS) , to name just a few.

One thing I haven't considered is the security consequence of the PCI-passthrough method. Does the hypervisor still have some kind of wedge in there? We should definitely talk about it!