Long-Distance vMotion, Stretched HA Clusters and Business Needs

During a recent vMotion-over-VXLAN discussion Chris Saunders made a very good point: “Folks should be asking a better question, like: Can I use VXLAN and vMotion together to meet my business requirements.”

Yeah, it’s always worth exploring the actual business needs.

Based on a true story ...

A while ago I was sitting in a roomful of extremely intelligent engineers working for a large data center company. Unfortunately they had been listening to a wrong group of virtualization consultants and ended up with the picture-perfect disaster-in-waiting: two data centers bridged together to support a stretched VMware HA cluster.

During the discussion I tried not to be prejudicial grumpy L3 guru that I’m known to be (at least in vendor marketing circles) and focused on figuring out the actual business needs that triggered that oh-so-popular design.

Q: “So what loads would you typically vMotion between the data centers?”

A: “We don’t use long-distance vMotion, that wouldn’t work well… the VM would have to access the disk data residing on the LUN in the other data center”

Q: “So why do you have stretched HA cluster?”

A: “It’s purely for disaster recovery – when one data center fails, the VMs of the customers (or applications) that pay for disaster recovery get restarted in the second data center.”

Q: “And how do you prevent HA or DRS from accidentally moving a VM to the other data center”

A: “Let’s see…”

Q: “OK, so when the first data center fails, everything gets automatically restarted in the second data center. Was that your goal?”

A: (from the storage admin) “Actually, I have to change the primary disk array first – I wouldn’t want a split-brain situation to destroy all the data, so the disk array failover is controlled by the operator”

Q: “Let’s see – you have a network infrastructure that exposes you to significant risk, and all you wanted to have is the ability to restart the VMs of some customers in the other data center using an operator-triggered process?”

A: “Actually, yes”

Q: “And is the move back to the original data center automatic after it gets back online?”

A: “No, that would be too risky”

Q: “So the VMs in subnet X would never be active in both data centers at the same time?”

A: “No.”

Q: “And so it would be OK if you would move subnet X from DC-A to DC-B during the disaster recovery process?”

A: “Yeah, that could work…”

Q: “OK, one more question – how quickly do you have to perform the disaster recovery?”

A: “Well, we’d have to look into our contracts ...”

Q: “But what would the usual contractual time be?”

A: “Somewhere around four hours”

Q: “Let’s summarize – you need a disaster recovery process that has to complete in four hours and is triggered manually. Why don’t you reconfigure the data center switches at the same time to move the IP subnets from the failed data center to the backup data center during the disaster recovery process? After all, you have switches from vendor (C|J|A|D|...) that could be reconfigured from a DR script using NETCONF.”

A: (from the network admin) “Yeah, that’s probably a good idea.”

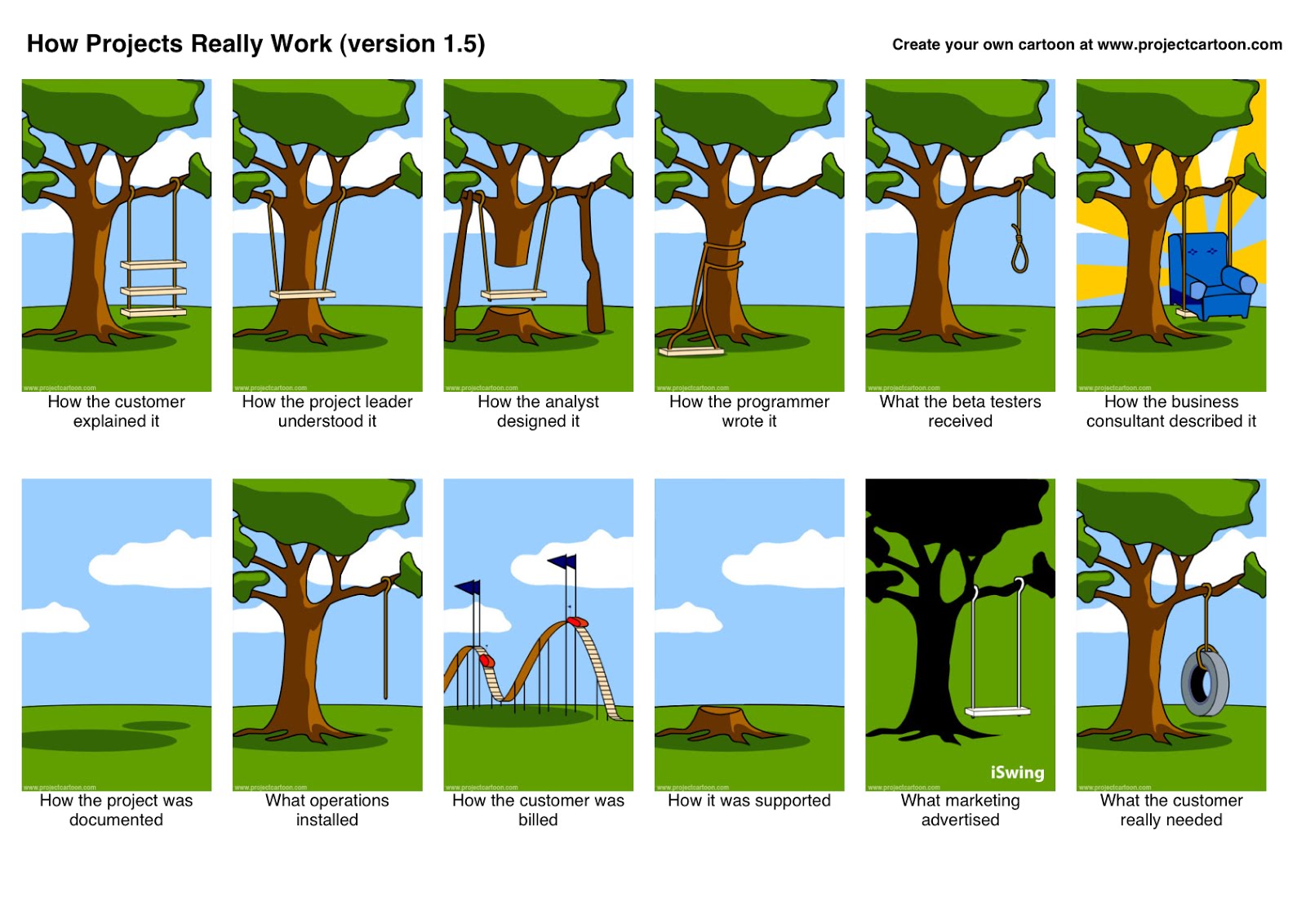

Does this picture look familiar (particularly the business consultant part)?

Source: www.projectcartoon.com

In such situation - I think that only way is not to focuse on automatic switching but on DR manual procedures (like Ivan described). The possibility of DC failure is low. Such situation don't happen often (like single device failure), so you can prepare clear procedures of manual switching to second DC.

Also if you have to have L2 connectivity and can't convice PM or sysadmins to user L3 or manual procedures - please read this document http://www.juniper.net/us/en/local/pdf/implementation-guides/8010050-en.pdf Especially please focus on Figure 7. For me it's one and only way to implement L3 connectivity for streched L2 DC.

To be clear as network engineer I prefer clear L3 connectivity :)

Say you have a primary data center and a secondary data center a mile apart with plenty of dark fiber between the two.

It would be great if we would do a layer 3 interconnect, but is a layer 2 DCI still a bad idea here?

When exactly does a layer 2 DCI stop being acceptable?

If you think you need more than one failure domain (or availability zone), then you should be very careful with bridging (it usually makes sense to have more than one L2 domain within a single facility).

It solves the entire issue of spanning sites over the WAN and syncing massive "dataset", as the only state that gets synced is the state of the SLB fronting the hosts on Site.