… updated on Monday, May 20, 2024 17:58 +0200

VM-aware Networking Improves IaaS Cloud Scalability

In the VMware vSwitch – the baseline of simplicity post I described simple layer-2 switches offered by most hypervisor vendors and the scalability challenges you face when trying to build large-scale solutions with them. You can solve at least one of the scalability issues: VM-aware networking solutions available from most data center networking vendors dynamically adjust the list of VLANs on server-to-switch links.

What’s the Problem

Let’s briefly revisit the problem: vSwitches have almost no control plane. Thus, a vSwitch cannot tell the adjacent physical switches what VLANs it needs to support VMs connected to it. Lacking that information, you have to configure a wide range of VLANs on the server-facing ports on the physical switch to allow the free movement of VMs between the servers (hypervisor hosts).

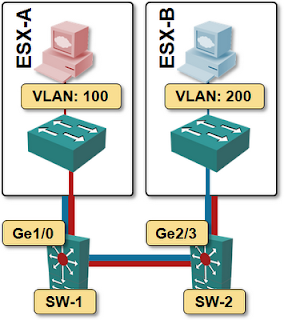

The following diagram illustrates the problem:

- Two tenants are running in a vSphere cluster (ESX-A and ESX-B);

- Red tenant is using VLAN 100, Blue tenant is using VLAN 200;

- With the VM distribution shown in the diagram, ESX-A needs access to VLAN 100; ESX-B needs access to VLAN 200.

- As the networking gear cannot predict how the VMs will move between vSphere hosts, you have to configure both VLANs on all server-facing ports (Ge1/0 on SW-1 and Ge2/3 on SW-2) as well as on all inter-switch links.

The list of VLANs configured on the server-facing ports of the access-layer switches thus commonly includes completely unnecessary VLANs.

Every VLAN is configured on every server-facing port

The wide range of VLANs configured on all server-facing ports causes indiscriminate flooding of broadcasts, multicasts and unknown unicasts to all the servers, even when those packets are not needed by the servers (because the VLAN on which they’re flooded is not active in the server). The flooded packets increase the utilization of the server uplinks; their processing (and dropping) also increases the CPU load.

The “Standard” Solution

VSI Discovery Protocol (VDP), part of Edge Virtual Bridging (EVB, 802.1Qbg) would solve that problem, but it’s not implemented in any virtual switch. Consequently, there’s no support in the physical switches, although HP and Force10 keep promising EVB support; HP for more than a year.

The closest we’ve ever got to a shipping EVB-like product is Cisco’s VM-FEX. The Virtual Ethernet Module (VEM) running within the vSphere kernel uses a protocol similar to VDP to communicate its VLAN/interface needs with the UCS manager.

More people have seen Nessie than an EVB-compliant vSwitch

The Real-World Workarounds

Faced with the lack of EVB support (or any other similar control-plane protocol) in the vSwitches, the networking vendors implemented a variety of kludges. Some of them were implemented in the access-layer switches (Arista’s VM Tracer, Force10’s HyperLink, Brocade’s vCenter Integration), others in network management software (Juniper’s Junos Space Virtual Control and ALU’s OmniVista Virtual Machine Monitor).

In all cases, a VM-aware solution must first discover the network topology. Almost all solutions send CDP packets from access-layer switches and use CDP1 listeners in the vSphere hosts to discover host-to-switch connectivity. The CDP information gathered by vSphere hosts is usually extracted from vCenter using VMware’s API (yes, you typically have to talk to the vCenter if you want to communicate with the VMware environment).

Have you noticed I mentioned VMware API in the previous paragraph? Because no hypervisor vendor bothered to implement a standard protocol, the networking vendors have to implement a different solution for each hypervisor. Almost all of the VM-aware solutions support vSphere/vCenter, a few vendors claim they also support Xen, KVM, or Hyper-V, and I haven’t seen anyone supporting anything beyond the big four.

After the access-layer topology has been discovered, the VM-aware solutions track VM movements between hypervisor hosts and dynamically adjust the VLAN range on access-layer switch ports. Ideally, you’d combine that with MVRP in the network core to trim the VLANs further, but only a few vendors implemented MVRP (and supposedly, only a few customers are using it). QFabric is a shining (proprietary) exception: because its architecture mandates single ingress lookup which should result in a list of egress ports, it also performs optimum VLAN flooding.

Does It Matter?

Without VM-aware networking, you must configure every VM-supporting VLAN on every switch-facing port, reducing the whole data center network to a single broadcast domain (effectively a single VLAN from the scalability perspective). If your data center has just a few large VLANs, you probably don’t care (most hypervisor hosts have to see most of the flooded VLAN traffic anyway); if you have a large number of small VLANs, VM-aware networking makes perfect sense.

Using the rough estimates from the RFC 5556 (section 2.6), implementing VM-aware networking moves us from around 1,000 end-hosts in a single bridged LAN to 100,000 end-hosts inside 1,000 VLANs. While I wouldn’t run 100,000 VMs in a purely bridged environment, the scalability improvements you can gain with VM-aware networking are definitely worth the investment.

-

Sadly, that’s not a typo. VMware included a vendor-proprietary protocol in its low-cost license; you must pay extra for a standard protocol (LLDP). ↩︎

Perhaps I'm missing something, but it seems that this problem has been long solved. Why reinvent this wheel? We had open standard VLAN pruning on CatOS 5.4, afterall.

"brought to you by the same people who won't run LACP or even MAC-learning bridges"

...oh. Nevermind.

The main problem in vSwitch-pSwitch integration is "how do I guess which VLANs to configure on the pSwitch access ports?" and there's no ideal solution for that (because vSwitches keep mum about their needs). After you know which VLANs you need on access ports, VTP/GVRP/MVRP does the rest.

Customer X requests a range of IPs for a new program. If the program fits in existing virtual-enabled subnets, fine. If not, when the server folks start their builds, they ping me and ask me to create a port-profile if it is missing. I install the Vlans on the access switches as part of building the vlan on the Cisco 1KV. It takes five minutes, what's the big deal? Yes i know Cisco and VMWare are trying to edge out the network engineer(and also SAN?) requirement for their builds but doesn't anyone remember entire campuses being wiped out by vlan auto-config protocols?

Am I right in assuming you allow the VMs for a new application (that needs a new VLAN) to be deployed only on a limited range of servers, thus limiting the scope of the newly-created VLANs? If that's the case and if the number of VLANs running on any particular server is small, it doesn't make sense to worry about the mismatch between VLANs needed by the server and VLANs configured on the access switch.

If, however, VLANs span hundreds of servers, and you have a large number of sparsely populated VLANs with VMs randomly spread all over the place, then the dynamic VLAN adjustments make sense.

Ivan

You're absolutely right that the problem is the vSwitch "keeping mum"... But it's a big leap from:

"I won't tell the pSwitch what I need"

to:

"I need SNMP access to configure pSwitch ports for what I need"

Hey, why doesn't the vSwitch just *communicate* its needs?

Will's concerns about VLAN distribution schemes wiping out data centers is well founded... But those issues happened when the whole problem (and mistake) was owned by one administrative group. Do we really want vCenter reconfiguring the physical network? How's that an improvement over a far more limited auto-provisioning scheme that we can't get right?

In case of VMware-based virtualisation environment, Is there a point in provisioning any individual VLAN beyond an individual vSphere cluster it is serving (which is limited to 32 hosts today)? If not, then the argument is weakened, is it not?

There are probably valid use cases in scale-out environments (you don't want all instances on the same cluster), there are some maintenance scenarios where you want to evacuate a complete cluster ... in most cases it's the "keep it simple" approach. Provision all VLANs on all server-facing ports and as long as everything works you don't have to touch the switches anymore.