Edge Virtual Bridging (EVB; 802.1Qbg) eases VLAN configuration pains

Challenge: If you want to deploy virtual machines belonging to different security zones within the same physical host, you have to isolate them. VLANs are the most common approach. If you want to migrate a running VM from one host to another while preserving its user sessions, you usually have to rely on bridging. The set of VLANs needed on a trunk link between the hypervisor host and access switch is thus unpredictable (more information in my VMware Networking Deep Dive webinar)

Solution#1 (painful): Configure all possible VLANs on the trunk link. Stretched VLANs spanning the whole data center are an ideal ingredient of a major meltdown.

Solution #2 (proprietary): Buy access switches that can download VLAN information from vCenter (example: Arista with VM Tracer).

Solution #3 (proprietary/future standard): Use Cisco UCS system with VN-Tag (precursor to 802.1Qbh). UCS manager downloads VLAN information from vCenter and applies it to dynamic virtual ports connected to vNICs.

Solution #4 (future): Use Edge Virtual Bridging

The emerging Edge Virtual Bridging (EVB; 802.1Qbg) standard addresses numerous networking-related challenges introduced by server virtualization. Today we’ll focus on EVB’s easiest component: VM provisioning and Virtual Station Interface (VSI) Discovery and Configuration Protocol (VDP).

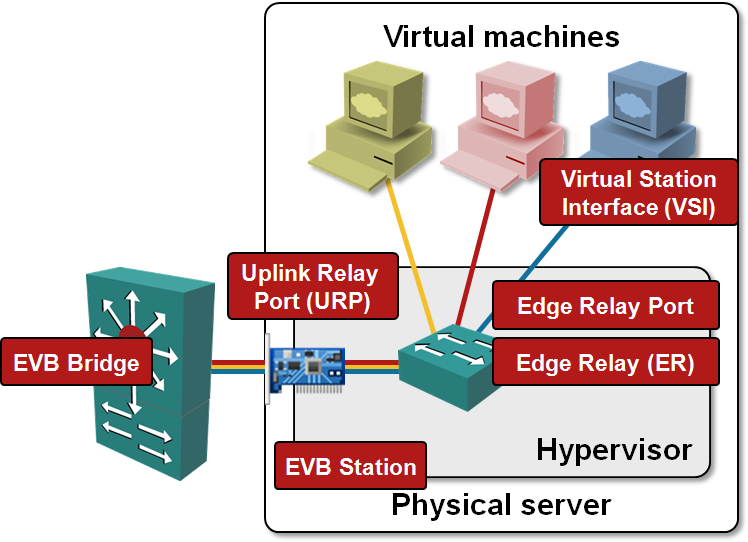

Before we can start the journey, you have to scrap all the vnetworking terminology you’ve acquired from VMware or anyone else and learn the “standard” terms. The following diagram shows a familiar picture overlaid with weird acronyms

Here’s the mapping between EVB acronyms and more familiar VMware-based terminology:

| EVB | Edge Virtual Bridging (the 802.1AQbg standard) |

| EVB Station | EVB-capable hypervisor host |

| EVB Bridge | EVB-capable access (ToR/EoR) switch |

| Edge Relay (ER) | vSwitch |

| Uplink Relay Port (URP) | Physical NIC |

| Virtual Station Interface (VSI) | Virtual NIC |

| Edge Relay Port | Port on a vSwitch |

One of the most interesting parts of EVB is the VSI Discovery and Configuration Protocol (VDP). Using VDP, the EVB station (host) can inform the adjacent EVB Bridge (access switch) before a VM is deployed (started or moved). The host can also tell the switch which VLAN the VM needs and which MAC address (or set of MAC addresses) the VM uses. Blasting through the VLAN limits (4K VLANs allowed by 802.1Q), the VDP supports 4-byte long Group ID, which can be mapped dynamically into different access VLANs on as-needed basis (this is a recent addendum to 802.1Qbg and probably allows nice interworking with I-SID field in PBB/SPB).

VDP uses a two-step configuration process:

- Pre-associate phase, where the hypervisor host informs the access switch about its future needs. A hypervisor could use the pre-associate phase to book resources (VLANs) on the switch before a VM is dropped into its lap and reject VM migration if the adjacent switch has no resources.

- Associate phase, when the host activates the association between VSI instance (virtual NIC) and a bridge port.

Obviously there’s also the De-associate message that is used to tell the EVB bridge that the resource used by the VM are no longer needed (it was moved to another host, disconnected from vSwitch/ER or shut down).

Bad news

If the previous paragraphs sounded like a description of seventh heaven, here’s some bad news:

- Numerous vendors have “embraced” EVB and “expressed support”, but as far as I know, Force10 is the only one that has announced EVB support in an actual shipping product.

- VMware doesn’t seem to be interested ... and why should they be? However, most of the EVB functionality (at least the ER part and station-side VDP implementation) has to reside in the hypervisor/vSwitch.

- Open vSwitch seems to be doing a bit better; at least they’re discussing the implications of VEPA.

I hope I’m wrong. If that’s the case, please correct my errors in the comments ;)

More information

You’ll learn more about modern data center architectures in my Data Center 3.0 for Networking Engineers webinar. The details of VMware networking (including the limitations of vSwitch mentioned above) are described in VMware Networking Deep Dive webinar. Both webinars are also part of the yearly subscription package.

VN-Tag-to-the-VM is available today in Cisco UCS via the use of VM-FEX technology - made up of Palo (P81E VIC) plus VN-Link (management plane integration between UCS Manager and vCenter). (Note: not all use of VN-Link involves Nexus 1000v. VM-FEX != Nexus 1000v)

Summary is that VM-FEX is a shipping product that directly addresses the "Challenge" at the beginning of the article. Since 802.1Qbh is based on Cisco's FEX technology and is moving along towards ratification very nicely, I think adding this 4th solution to your article would be useful to the reader.

Again, thanks for the article. Great info.

I'm a bit surprised you got to nearly the end before you even mentioned VEPA. I think all of these techniques potentially could collapse under the weight of their own complexity. Also, from a somewhat narrow networking perspective it all makes great sense, but from a broader perspective (i.e. server, storage, apps) it leaves a lot to be desired. IMHO, as virtualization densities grow, the hairpin traffic problem is going to undermine all of these efforts.

My take here: http://blog.vcider.com/2011/01/vepa/

http://www.enterprisenetworkingplanet.com/nethub/article.php/3933656

Furthermore, X8 "is expected to begin customer trials in October 2011." Futures, futures, futures :-E

http://investor.extremenetworks.com/releasedetail.cfm?ReleaseID=575708

How do you define the difference between EVB and VEPA? It's always been my understanding that EVB = the general term for everything VEPA, Port Extension, and other related technologies.